IBM BladeCenter

The IBM BladeCenter was IBM's blade server architecture, until it was replaced by Flex System. The x86 division was later sold to Lenovo in 2014.[1]

History

Introduced in 2002, based on engineering work started in 1999, the IBM BladeCenter was relatively late to the blade server market. It differed from prior offerings in that it offered a range of x86 Intel server processors and input/output (I/O) options. In February 2006, IBM introduced the BladeCenter H with switch capabilities for 10 Gigabit Ethernet and InfiniBand 4X.

A web site called Blade.org was available for the blade computing community through about 2009.[2]

In 2012 the replacement Flex System was introduced.

Versions

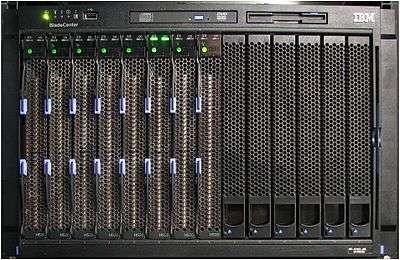

IBM BladeCenter (E)

The original IBM BladeCenter was later marketed as BladeCenter E[3] with 14 blade slots in 7U. Power supplies have been upgraded through the life of the chassis from the original 1200 to 1400, 1800, 2000 and 2320 watt.

The BladeCenter (E) was co-developed by IBM and Intel and included:

- 14 blade slots in 7U

- Shared media tray with optical drive, floppy drive and USB 1.1 port

- One (upgradable to two) management modules

- Two (upgradable to four) power supplies

- Two redundant high-speed blowers

- Two slots for Gigabit Ethernet switches (can also have optical or copper pass-through)

- Two slots for optional switch or pass-through modules, can have additional Ethernet, Fibre Channel, InfiniBand or Myrinet 2000 functions.

IBM BladeCenter T

BladeCenter T is the telecommunications company version[4] of the original IBM BladeCenter, available with either AC or DC (48 V) power. Has 8 blade slots in 8U, but uses the same switches and blades as the regular BladeCenter E. To keep NEBS Level 3 / ETSI compliant special Network Equipment-Building System (NEBS) compliant blades are available.

IBM BladeCenter H

Upgraded BladeCenter design with high-speed fabric options. Fits 14 blades in 9U. Backwards compatible with older BladeCenter switches and blades.

- Features[5]

- 14 blade slots in 9U

- Shared Media tray with Optical Drive and USB 2.0 port

- One (upgradable to two) Advanced Management Modules

- Two (upgradable to four) Power supplies

- Two redundant High-speed blowers

- Two slots for Gigabit Ethernet switches (can also have optical or copper pass-through)

- Two slots for optional switch or pass-through modules, can have additional Ethernet, Fibre Channel, InfiniBand or Myrinet 2000 functions.

- Four slots for optional high-speed switches or pass-through modules, can have 10 Gbit Ethernet or InfiniBand 4X.

- Optional Hard-wired serial port capability

IBM BladeCenter HT

BladeCenter HT is the telecommunications company version[6] of the IBM BladeCenter H, available with either AC or DC (48 V) power. Has 12 blade slots in 12U, but uses the same switches and blades as the regular BladeCenter H. But to keep NEBS Level 3 / ETSI compliant special NEBS compliant blades are available.

IBM BladeCenter S

Targets mid-sized customers by offering storage inside the BladeCenter chassis, so no separate external storage needs to be purchased. It can also use 110 V power in the North American market, so it can be used outside the datacenter. When running at 120 V , the total chassis capacity is reduced.

- Features[7]

- 6 blade slots in 7U

- Shared Media tray with Optical Drive and 2x USB 2.0 ports

- Up to 12 hot-swap 3.5" (or 24 2.5") SAS or SATA drives with RAID 0, 1 and 1E capability, RAID 5 and SAN capabilities optional with two SAS RAID controllers

- Two optional Disk Storage Modules for HDDs, six 3.5-inch SAS/SATA drives each.

- 4 hot-swap I/O switch module bays

- 1 Advanced Management Module as standard (no option for secondary Management Module)

- Two 950/1450-watt, hot-swap power modules and ability to have two optional 950/1450-watt power modules, offering redundancy and power for robust configurations.

- Four hot-swap redundant blowers, plus one fan in each power supply.

Intel based

Modules based on x86 processors from Intel.

HS12

(2008) Features

- Celeron Single core 445 to Quad-Core Intel Xeon processors up to 2.83 GHz

- Six DIMM slots (up to 24 GB)

- Hot-swap drives

HS20

(2002–6) Features

- One or two Intel Xeon DP processors (single or dual-core)

- Four DIMM

- Option for one or two 2.5" drives (ATA100, SCSI U320 or Serial Attached SCSI (SAS) depending on generation)

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

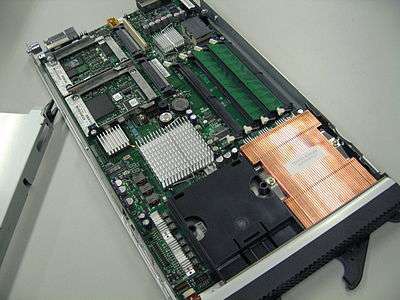

HS21

(2007–8) This blade model can use the High-speed IO option of the BladeCenter H, but is backwards compatible with the regular BladeCenter.

Features

- One or two Intel Xeon DP processors (dual or quad-core)

- Four DIMM slots

- Option for one or two SAS 2.5" drives

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

HS21 XM

(2007–8) This blade model can use the High-speed IO option of the BladeCenter H, but is backwards compatible with the regular BladeCenter.

Features

- One or two Intel Xeon DP processors (dual or quad-core)

- Eight DIMM slots

- Option for one SAS 2.5" drive or one or two SAS-based Solid State drives

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X as well as additional Fibre-Channel or Ethernet ports)

HS22

(2009–11) Features

- One or two Intel Xeon 5500 or 5600 series processors (up to 3.6 GHz 4c or 3.46 GHz 6c)

- Twelve DDR3 VLP DIMM slots (up to 192 GB of total memory capacity)

- Option for two hot swap SAS 2.5" drives or Solid State drives (RAID 0 and 1 are possible)

- Two Gigabit Ethernet ports (Broadcom 5709S)

- 1 CIOv slot (standard PCI-Express daughter card) and 1 CFFh slot (high-speed PCI-Express daughter card) for a total of 8 ports of I/O to each blade, including 4 ports of high-speed I/O

- Requires Advanced Management Module

HS22v

(2010–11) Features are very similar to HS22 but:

- 18 DDR-3 VLP DIMM slots, up to 288 GB of total memory capacity

- up to two 1.8" disks (SSD, not hot swapable)

- Requires Advanced Management Module

HS23

(2012) Features

- Single-wide

- One or Two Intel Xeon E5-2600

- 16 Dimm slots, up to 1600mhz

- 2 hot-swappable HDDs (SATA/SAS) or SSDs

- Dual 10G/1G Ethernet onboard expandable to 4x10

- Virtual Fabric / vNic's onboard

- Requires Advanced Management Module

HS23E

(2012) Features:

- Single-wide

- One or Two Intel Xeon E5-2400

- 12 Dimm slots, up to 1600mhz

- 2 hot-swappable HDDs (SATA/SAS) or SSDs

- Dual Gigabit Ethernet onboard ports with TOE

- Requires Advanced Management Module

HS40

(2004) Features

- Double-wide (needs 2 slots)

- One to four Intel Xeon MP processors

- Eight DIMM slots

- Option for one or two ATA100 2.5" drives

- Four Gigabit Ethernet ports

- Two expansion slots for up to four additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

HC10

(2008) This blade model is targeted to the workstation market

Features

HX5

(2010–11) This blade model is targeted at the server virtualization market.

Features

- 2 expandable to 4 Intel E7, 6500 or 7500 series Xeon (4-10 cores per CPU, up to 2.67 GHz)

- Sixteen DIMM slots (256 GB Max). Expandable to 40 slots with a 24 DIMM MAX 5 memory blade (640GB total).

- Two Gigabit Ethernet ports per blade.

AMD based

Modules based on x86 processors from AMD.

LS20

(2005-6) Features

- One or two AMD Opteron processors (single or dual-core)

- Four DIMM slots for DDR1 VLP DIMM's

- Option for one or two SCSI U320 2.5" drives

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

LS21

(2006)

This blade model can use the high-speed I/O of the BladeCenter H, but is also backwards compatible with the regular BladeCenter.

Features:

- One or two AMD Opteron processors (dual-core), support 65nm Quad core after BIOS update (tested)

- Eight DIMM slots for up to 32 GB of RAM

- Option for one SAS or SATA 2.5" drive

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

LS22

(2008) Upgraded model of LS21.

Features:

- One or two 45nm AMD Opteron processors (quad or hex-core)

- Eight DIMM slots for up to 64 GB of RAM

- Option for two SAS or SATA 2.5" drive

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

LS41

(2006–7) This blade model can use the High-speed IO option of the BladeCenter H, but is backwards compatible with the regular BladeCenter

Features

- Double-wide (needs 2 slots)

- One to four AMD Opteron processors (dual-core)

- Sixteen DIMM slots for up to 64 GB of RAM

- Option for one or two SAS 2.5" drives

- Four Gigabit Ethernet ports

- Two expansion slots for up to four additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

- Data Storage Capacity ?

LS42

(2008–9) Upgraded model of LS41.

Features

- Double-wide (needs 2 slots)

- One to four AMD Opteron processors (quad or hex-core)

- Sixteen DIMM slots for up to 128 GB of RAM

- Option for one or two SAS 2.5" drives

- Four Gigabit Ethernet ports

- Two expansion slots for up to four additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

Power based

Modules based on PowerPC- or Power ISA-based processors from IBM.

JS20

(2006) Features

- Can run AIX or Linux

- Two PowerPC 970 processors at 1.6 or 2.2 GHz

- Four DIMM slots for PC2700 ECC memory (max 8 GB)

- Option for one or two ATA100 2.5" drives

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

JS21

(2006) This blade model can have the High-speed IO option of the BladeCenter H, but is backwards compatible with the regular BladeCenter

Features

- Can run AIX or Linux

- Can do virtualization, offering Dynamic Logical Partitioning (DLPAR) capabilities.

- Two PowerPC 970FX single-core processors at 2.7 GHz or two PowerPC 970MP dual-core processors at 2.5 GHz

- Four DIMM slots for PC2-3200 or PC2-4200 ECC memory (max 16 GB)

- Option for one or two SAS 2.5" drives

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

JS22

(2009) Features

- Can run IBM i, AIX or Linux

- Can do virtualization, offering Dynamic Logical Partitioning (DLPAR) capabilities.

- Two POWER6 dual-core processors at 4.0 GHz

- Four DIMM slots ECC Chipkill DDR2 SDRAM memory (max 32 GB)

- One 2.5" SAS drive up to 146 GB

- Integrated Virtualization Manager(IVM)

- Two Gigabit Ethernet ports card

- One expansion slot for up to two additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

JS23

(2009) Features

- Can run IBM i, AIX or Linux

- Can do virtualization, offering Dynamic Logical Partitioning (DLPAR) capabilities.

- Two POWER6 dual-core processors at 4.2 GHz

- 64 MB L3 cache (32 per processor)

- Four DIMM slots ECC Chipkill DDR2 SDRAM memory (max 64 GB)

- One 2.5" SAS up to 300 GB or one 69 GB SSD drive

- Integrated Virtualization Manager(IVM)

- Two Gigabit Ethernet ports card

- One expansion slot for up to two additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

- One CIOv PCIe expansion slot

JS43 Express

Features

- Can run IBM i, AIX or Linux

- Can do virtualization, offering Dynamic Logical Partitioning (DLPAR) capabilities.

- Four POWER6 dual-core processors at 4.2 GHz

- 128 MB L3 cache (32 per processor)

- Eight DIMM slots ECC Chipkill DDR2 SDRAM memory (max 128 GB)

- Zero to two 2.5" SAS up to 300 GB or zero to two 69 GB SSD drives

- Integrated Virtualization Manager(IVM)

- Two Gigabit Ethernet ports card

- One expansion slot for up to two additional ports (Fibre Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

- Two PCIe CIOv expansion slots

- Double-wide form factor

JS12 Express

Features

- Can run IBM i, AIX or Linux

- Can do virtualization, offering Dynamic Logical Partitioning (DLPAR) capabilities.

- One POWER6 dual-core processor at 3.8 GHz

- Eight DIMM slots ECC Chipkill DDR2 SDRAM memory (max 64 GB)

- Zero to two 2.5" SAS drive up to 146 GB

- Integrated Virtualization Manager(IVM)

- Two Gigabit Ethernet ports, with optionan dual Gbit card

- One expansion slot for up to two additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (4 Gbit/s Fibre Channel, iSCSI, or InfiniBand 4X)

PS700

Features

- Can run IBM i, AIX or Linux on Power

- Can do virtualization, offering Dynamic Logical Partitioning (DLPAR) capabilities.

- One POWER7 quad-core processor at 3 GHz

- max 64 GB

- Two Gigabit Ethernet ports, with optionan dual Gbit card

PS701

Features are very similar to PS700, but

- Eight-core processor

- max 128 GB

PS702

Think two PS701 tied together back-to-back, forming a double-wide blade

PS703

Features are very similar to PS701, but

- Two eight-core processors at 2.4 GHz

PS704

Think two PS703 tied together back-to-back, forming a double-wide blade.

Cell based

Modules based on Cell processors from IBM.

QS20

Features

- Double-wide (needs 2 slots)

- Can run Linux

- Two Cell processors at 3.2 GHz

- 1 GB XDRAM Memory (512 MB per processor)

- 40 GB IDE100 2.5" drive

- Two Gigabit Ethernet ports

- Optional InfiniBand 4X connectivity

QS21

Features

- Single-wide

- Can run Linux

- Two Cell processors at 3.2 GHz

- 2 GB Memory (1 GB per processor)

- Two Gigabit Ethernet ports

- One expansion slot for up to two additional ports (Fibre-Channel storage, additional Ethernet, Myrinet 2000 or InfiniBand)

- One High-speed expansion slot for up to two additional ports (10 Gbit Ethernet or InfiniBand 4X)

QS22

Features

- Single-wide

- Can run Linux

- Two PowerXCell 8i processors at 3.2 GHz

- Up to 32 GB of DDR2 SDRAM RAM memory

- Two Gigabit Ethernet ports

- Expansion slots for SAS daughter card, InfiniBand 4X DDR daughter card and 8 GB uFDM Flash Drive

UltraSPARC based: 2BC

Themis computer announced a blade around 2008. It ran the Sun Solaris operating system from Sun Microsystems. Each module had one UltraSPARC T2 with 64 threads at 1.2 GHz and up to 32 GB of DDR2 SDRAM processor memory.[8]

Advanced network: PN41

Developed in conjunction with CloudShield, features [9]

- Single-wide

- Based on Intel IXP2805 network processor

- Full payload screening with deep packet inspection (DPI)

- Full Layer 7 processing and control

- User programmability using Eclipse-based IDE and RAVE open development language

- Quad 1 Gbit + quad 10 Gbit Ethernet controllers

- Up to 20 Gbit/s DPI throughput per blade

- Selective traffic capture, rewrite and redirect

- Has LAN and WAN Interfaces

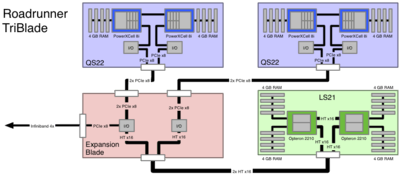

Roadrunner TriBlade

The IBM Roadrunner supercomputer used a custom module called the TriBlade from 2008 through 2013. An expansion blade connects two QS22 modules with 8 GB RAM each via four PCIe x8 links to a LS21 module with 16 GB RAM, two links for each QS22. It also provides outside connectivity via an Infiniband 4x DDR adapter. This makes a total width of four slots for a single TriBlade. Three TriBlades fit into one BladeCenter H chassis.[10]

Switch modules

The BladeCenter can have a total of four switch modules, but two of the switch module bays can take only an Ethernet switch or Ethernet pass-though. To use the other switch module bays, a daughtercard needs to be installed on each blade that needs it, to provide the required SAN, Ethernet, InfiniBand or Myrinet function. Mixing of different type daughtercards in the same BladeCenter chassis is not allowed.

Gigabit Ethernet

Gigabit Ethernet switch modules were produced by IBM, Nortel, and Cisco Systems. BLADE Network Technologies produced some switches, and later was purchased by IBM. In all cases speed internal to the BladeCenter, between the blades, is non-blocking. External Gigabit Ethernet ports vary from four to six and can be either copper or optical fiber.

Storage Area Network

A variety of SAN switch modules have been produced by QLogic, Cisco, McData (acquired by Brocade) and Brocade ranging in speeds of 1, 2, 4 and 8 Gbit Fibre Channel. Speed from the SAN switch to the blade is determined by the lowest-common-denominator between the blade HBA daughtercard and the SAN switch. External port counts vary from two to six, depending on the switch module.

InfiniBand

A InfiniBand switch module has been produced by Cisco. Speed from the blade InfiniBand daughtercard to the switch is limited to IB 1X (2.5 Gbit). Externally the switch has one IB 4X and one IB 12X port. The IB 12X port can be split to three IB 4X ports, giving a total of four IB 4X ports and a total theoretical external bandwidth of 40 Gbit.

Pass-through

Two kinds of pass-through module are available: copper pass-through and fibre pass-through. The copper pass-through can be used only with Ethernet, while the Fibre pass-through can be used for Ethernet, SAN or Myrinet.

Bridge

Bridge modules are only compatible with BladeCenter H and BladeCenter HT. They function like Ethernet or SAN switches and bridge the traffic to InfiniBand. The advantage is that from the Operating System on the blade everything seems normal (regular Ethernet or SAN connectivity), but inside the BladeCenter everything gets routed over the InfiniBand.

High-speed switch modules

High-speed switch modules are compatible only with the BladeCenter H and BladeCenter HT. A blade that needs the function must have a high-speed daughtercard installed. Different high-speed daughtercards cannot be mixed in the same BladeCenter chassis.

10 Gigabit Ethernet

A 10 Gigabit Ethernet switch module was available from BLADE Network Technologies. This allowed 10 Gbit/s connection to each blade, and to outside the BladeCenter.

InfiniBand 4X

There are several InfiniBand options:

- A high-speed InfiniBand 4X SDR switch module from Cisco. This allows IB 4X connectivity to each blade. Externally the switch has two IB 4X ports and two IB 12X ports. The 12X ports can be split to three 4X ports, providing a total of eight IB 4X ports or a theoretical bandwidth of 80 Gbit. Internally between the blades, the switch is non-blocking.

- A High-speed InfiniBand pass-through module to directly connect the blades to an external InfiniBand switch. This pass-though module is compatible with both SDR and DDR InfiniBand speeds.

- A high-speed InfiniBand 4X QDR switch module from Voltaire (later acquired by Mellanox Technologies). This allows full IB 4X QDR connectivity to each blade. Externally the switch has 16 QSFP ports, all 4X QDR capable.

See also

- The IBM Roadrunner was implemented with IBM BladeCenter components, from 2008 through 2013[11]

- All supercomputers of Spanish Supercomputing Network employ the IBM BladeCenter. This includes Magerit and Marenostrum (the two most powerful supercomputers of Spain) and 6 small supercomputers.

- Ljubljana Supercomputing Center employs the IBM BladeCenter

- Weta Digital uses IBM BladeCenter to render The Lord of the Rings and other films

References

- Kunert, Paul (23 January 2014). "It was inevitable: Lenovo stumps up $2.3bn for IBM System x server biz". channelregister.co.uk. The Register. Retrieved 23 January 2014.

- "Blade Server Information from Blade.org". Archived from the original on August 16, 2009. Retrieved July 18, 2013.

- "IBM BladeCenter E chassis specifications". IBM. 2007-02-05. Archived from the original on 2012-09-18.

- "IBM BladeCenter T chassis specifications". IBM. 2006-01-17. Archived from the original on 2012-09-18.

- "IBM BladeCenter H chassis specifications". IBM. 2008-10-07. Archived from the original on 2012-09-18.

- "IBM BladeCenter HT chassis specifications". IBM. 2008-01-26. Archived from the original on 2012-09-18.

- "IBM BladeCenter S chassis specifications". IBM. 2008-10-07. Archived from the original on 2012-09-18.

- "T2BC Blade Servers". Themis Computer. Archived from the original on June 5, 2008. Retrieved July 18, 2013.

- "IBM PN41 network blade". IBM. 2008-08-27. Archived from the original on 2012-09-18. Retrieved 2009-06-15.

- Ken Koch (March 13, 2008). "Roadrunner Platform Overview" (PDF). Los Alamos National Laboratory. Retrieved July 18, 2013.

- Montoya, Susan (March 30, 2013). "End of the Line for Roadrunner Supercomputer". The Associated Press. Retrieved July 18, 2013.

External links

| Wikimedia Commons has media related to IBM BladeCenter. |

- IBM BladeCenter homepage

- Lenovo Press - replaced IBM Redbooks for Bladecenter

- IBM BladeCenter Products and Technology - IBM Redbooks

- BladeCenter HS23 (E5-2600) Product Guide

- Lenovo BladeCenter E Product Guide - Lenovo Press

- Installing VMware vSphere 5.5 on IBM BladeCenter H22

- IBM BladeCenter Product Publications Quick Reference - IBM Redbooks

- Intel Server Products

- x4live.com - Latest News and Links on Modular System x, Modular BladeCenter and Systems Management

- IBM's Online Server Configurator

- coreipm.com - Board Management Controller (BMC) firmware & other information for building BladeCenter compatible blades