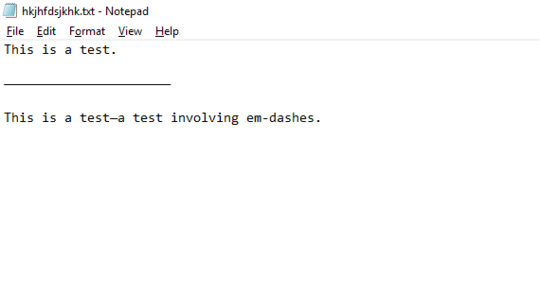

I can reproduce the problem.

Reason: Autodetection of file encoding.

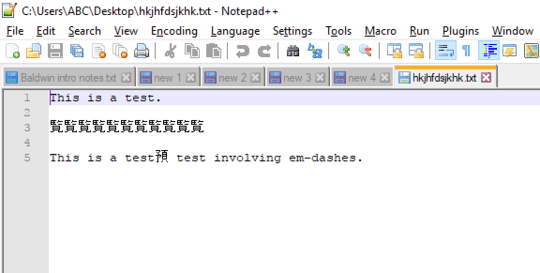

Your file is encoded in standard 8-bit code table, namely Windows-1252 (as indicated in your comment below the question), one of ANSI 8-bit encodings, which has 256 possible characters. But it looks like the Notepad++ is interpreting the file containing em-dashes as if it was in Shift-JIS encoding. (This encoding can be seen on status bar near bottom right corner of Notepad++ main window when the issue occurs.) Therefore, Notepad++ interprets characters with ASCII value greater than 127 found in the file as Japanese characters.

Solution: Change encoding of your file to UTF-8 (or another suitable encoding).

- Open your file.

- Using menu Encoding > Character set > Western European > Windows-1252 switch to the right encoding, where characters appear as expected.

- Using menu Encoding > Convert to UTF-8. Status bar indicator near bottom right corner now shows UTF-8-BOM.

- Save your file.

Maybe you can object you do not want UTF-8, but you did not indicate that limitation in the question and generally, there is no reason not to use it. It will keep all the characters stable, without appearence issues you encountered. The limitation can be processing in older applications/tools. Then you need to stick with ANSI encoding they require.

Additional information:

UTF-8 is fully supported by Notepad which comes with Windows, so you are not going to get troubles here. However, I recommend using UTF-8 files with BOM. UTF-8 without BOM works, too, but when the mark is missing, editors rely on format auto-detection and as you can see, it can sometimes go wrong. I have seen that some older programs complained about BOM marker like "Invalid characters at start of the file." and then I converted my file into UTF-8 without BOM.

Unicode standard supports more than 256 code points: the total supported number is 1,114,112. According to Wikipedia, this space is currently used by 136,755 characters covering 139 modern and historic scripts, as well as multiple symbol sets. The rest is reserve for future use. As you can see, Unicode is the encoding covering most of world's commonly used characters so you should never fall into code page issues again. You do not need to stick with UTF-8, Unicode can be also represented as UTF-16, UTF-32 or in several more exotic representations (UTF-7, UTF-1 and others) or in non-transitional forms like UCS-4. Of them, UTF-8 is most commonly supported so I recommend this one. Without using characters above code point 127 it is compatible with ASCII (except of the BOM mark, which is visible in ASCII as few garbage characters at the start of the file).

If any program needs a code page from you, select code page 65001 for UTF-8.

If you want to explore all characters of the Unicode, including searching or filtering by their name or other properties or identifying unknown characters, use for example BabelMap.

I would suggest you may want to check your Encoding settings. That said, even playing with those, I was unable to reproduce the issue with true Em dashes (Alt+0151 in Windows). My guess is it's external (source or system settings) perhaps? – Anaksunaman – 2017-07-01T04:44:11.450

@Anaksunaman My settings are the default installation settings. I have no idea what "external" factors could be at work. This only happens in Notepad++. – 76987 – 2017-07-01T07:00:32.707

@76987 I was able to emulate the problem just as you explain my creating a notepad document with the Alt+0151

—and then when I opened up on Notepad++ it looked similar to your screen shot. This is likely just how Notepad++ interprets that character but if you go up to the encoding options and change it to others (e.g. ANSI) you will see the interpreted character change to something different. I think this is simple how the text editor software interprets the characters. – Pimp Juice IT – 2017-07-03T03:56:47.050@McDonald's By changing the En̲coding > Character sets option to Windows-1252, I was able to make the em-dashes appear. However, once I close and re-open the txt file, the Chinese characters will have returned. I tried changing the Encoding settings for new documents in Preferences, but that didn't help. So the solution seems to be a merely temporary solution. – 76987 – 2017-07-03T06:00:52.263

@76987 - So you can change the default in Notepad++ to always use the encoding you need it to for showing the characters for example see the screen shot here... but go to

– Pimp Juice IT – 2017-07-03T11:13:40.677Settings|Preferences|New Document| and then select the option from the Encoding section that suites your needs. Next time you close then open the document, then the new encoding you select should be the Notepad++ default.