78

27

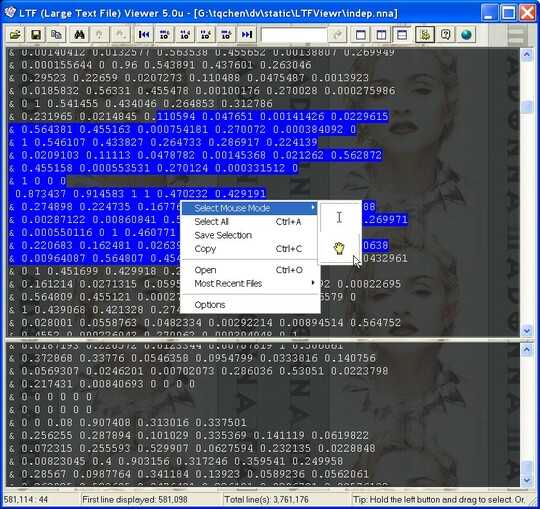

Is there a tool to split large text file (9Gb) into smaller files so that I can open it and look through?

Anything usable from command line that comes with Windows (XP)?

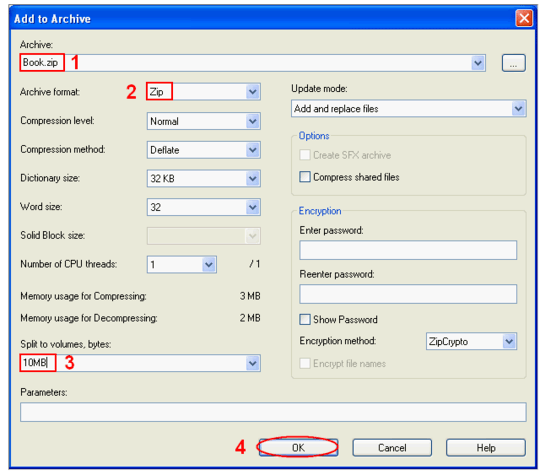

Or what's the best way to split it? Can I use 7z to create separate volumes and then unzip one of them separately? Will it be readable or does it need all the other parts to unzip into the big file again?

Update

I put together quick 48 lines python script that split the large file into 0.5GB files which are easy to open even in vim. I've just needed to look through data towards the last part of the log (yes it is a log file). Each record is split across multiple lines so grep would not do.

I notice you solved your problem, If you're still around, could you post the solution so others might benefit? – Journeyman Geek – 2012-10-19T03:30:02.183

This has been discussed in much detail at SO

– Rishi Dua – 2013-06-26T06:33:00.453I see you edited to mention grep. Do you have cygwin or unxutils installed? You could have used

grep -nwithheadandtailto see chunks of the file. Example,grep -n "something" file.txtreturns95625: something. You want to see that line and 9 lines below it for a total of 10 lines:head -n 95635 file.txt | tail -n 10. – John T – 2010-01-11T04:10:04.923