I read a lot but what I read so far did not make much sense, so I am asking here a new question about Java and memory.

So I am starting a Java app with the following CLI arguments

-Xms48m

-Xmx96m

-XX:MetaspaceSize=80m

-XX:MaxMetaspaceSize=150m

Within my app, I have some code like that to display the used memory:

Runtime runtime = Runtime.getRuntime();

//Used mem: runtime.totalMemory() - runtime.freeMemory()

//Total mem: runtime.totalMemory()

//Max mem: runtime.maxMemory()

The results are as expected:

Used Memory: 43 mb

Free Memory: 16 mb

Total Memory: 60 mb

Max Memory: 96 mb

I also had the Garbage Collector run, made a heap dump and analysed it, and it also says, that around 43MB are used.

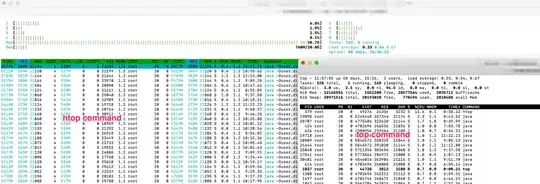

So this part is fine so far. But now, if I run the htop command on Linux, I get these numbers for my Java app:

RES: 409M

DATA: 611M

First question:

- How come, that these numbers are so high?

- If I restart my app, it starts with RES: 224M, DATA: 339M and grows and grows until after a day it is at 409M/611M as mentioned above and then I restart the application with a cron job, otherwise my RAM would be gone. How can I prevent that?

(I have 80 instances of the same app running on a server with 32GB RAM).

Here is a screenshot of the situation:

Platform:

OS: Ubuntu 16.04.6 LTS

Java: OpenJDK Runtime Environment (build 1.8.0_232-8u232-b09-0ubuntu1~16.04.1-b09) / OpenJDK 64-Bit Server VM (build 25.232-b09, mixed mode)

- Java App: Play Framework v2.7 app