I'm currently investigating the use of HAProxy as a Load Balancer. I've found that the recommended configuration for HA of the HAProxy itself (not the backends) involves using keepalived to establish a VIP that both systems respond to. This means that when the primary stops being active on the network, the passive HAProxy sends a gratuitous ARP message and instructs the network to send all traffic for that VIP to it instead.

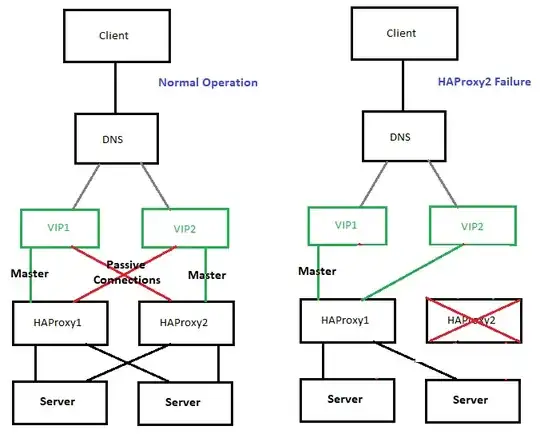

This looks to be a good solution but it prevents an Active-Active Load Balancing setup for the HAProxies. I'm curious as to any methods that allow both HAProxies to respond to traffic and maintain reasonable uptime in case of a failure on one of them. DNS Round robin between two VIPs managed by the HAProxies, with opposite masters, seems like a reasonable solution but I haven't found any guidance on whether keepalived can support two VIPs on one system simultaneously.

Terrible MSPaint diagram to illustrate:

Is this possible? Is this a reasonable solution? Have you seen any alternate solutions which are viable?

Edit: I found the following after posting which suggests it's possible but suggests using CLUSTERIP as an alternative. I'm not certain about the compatibility between CLUSTERIP and HAProxy.