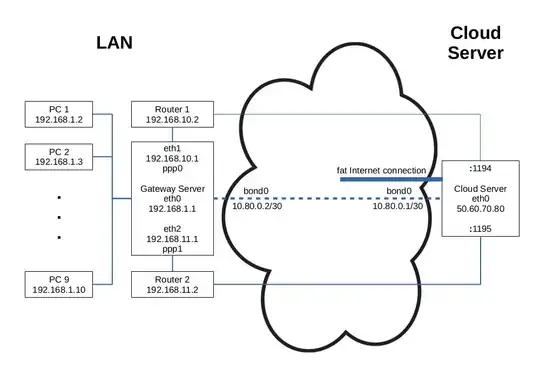

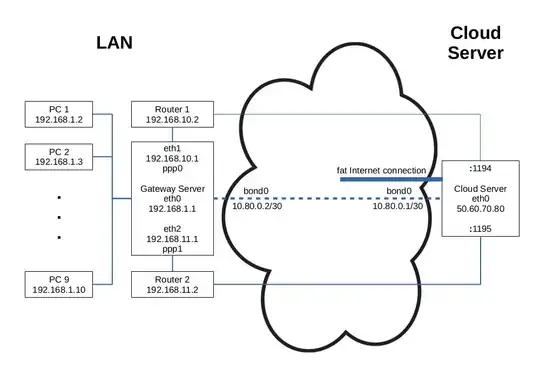

This solution applies Linux Ethernet Bonding to two independent OpenVPN connections from a local gateway server to a server in the cloud that you have root access to, like a Linode or a DigitalOcean Droplet. The two Internet connections that the OpenVPN connections are based on use two ADSL routers in transparent bridge mode, i.e. we use their modem functionality only and create connections via PPP over Ethernet (PPPoE) driver. And the firewalls are implemented with FireHOL on both ends of the bonded connection.

The graph gives an overview. The LAN PCs are connected to eth0, 192.168.1.1/24, on the Gateway Server. The two routers are connected via individual, physical network interfaces eth1, 192.168.10.1/24, and eth2, 192.168.11.1/24. (Couldn't make PPPoE work with virtual interfaces on eth0.) The PPPoE driver creates interfaces ppp0 and ppp1 for individual connections to the ISP. OpenVPN binds to the Gateway Servers' 192.168.10.1/24 and 192.168.11.1/24 addresses on the LAN side, and to ports 1194 and 1195 of the Cloud Server's eth0 interface, 50.60.70.80/24. And these two OpenVPN connections are then bonded to create the virtual interfaces 10.80.0.2/30 on the LAN side and 10.80.0.1/30 on the Cloud Server side. Defining the Cloud Server's 10.80.0.1 address as default gateway on the LAN's Gateway Server allows all LAN computers to access the Internet at almost double the speed of one individual PPPoE connection.

The following configuration is based on Ubuntu Server (works here with 16.04 on the LAN side and 18.04 on the Cloud side). All commands assume root privileges.

Cloud Server Side

OpenVPN Tunnels

Install the latest OpenVPN version (replace bionic for 18.04 with xenial for 16.04)

cloud-server# wget -O - https://swupdate.openvpn.net/repos/repo-public.gpg|apt-key add -

cloud-server# echo "deb http://build.openvpn.net/debian/openvpn/stable bionic main" > /etc/apt/sources.list.d/openvpn-aptrepo.list

cloud-server# apt update && apt install openvpn

In /etc/default/openvpn make sure

AUTOSTART="none"

is active. Disable the service, tunnels will be managed from /etc/network/interfaces:

cloud-server# systemctl disable openvpn

Create /etc/openvpn/tap0.conf

# disable encryption, traffic continues unencrypted anyways

auth none

cipher none

dev tap0

mode p2p

port 1194

local 50.60.70.80

proto udp

log /var/log/tap0.log

verb 3

ping 2

ping-restart 10

persist-tun

compress lz4-v2

daemon

and /etc/openvpn/tap1.conf like /etc/openvpn/tap0.conf except

dev tap1

...

port 1195

...

log /var/log/tap1.log

Linux Ethernet Bonding

Using ifupdown for network management on the Cloud Server, modify your /etc/network/interfaces (adjust gateway for your environment):

auto eth0

iface eth0 inet static

address 50.60.70.80

netmask 255.255.255.0

gateway 50.60.70.1

post-up /usr/local/bin/vpn-start

pre-down /usr/local/bin/vpn-stop

Options for the bonding device can be maintained in /etc/modprobe.d/bonding.conf:

options bonding mode=0 miimon=100

mode=0 means to use bonded lines in a round-robin fashion which should provide both, failover and speed enhancement.

The following two scripts create/destroy the bonding device. Create /usr/local/bin/vpn-start (and chmod +x):

#!/bin/bash

openvpn --config /etc/openvpn/tap0.conf

openvpn --config /etc/openvpn/tap1.conf

ip link add bond0 type bond

ip addr add 10.80.0.1/30 dev bond0

ip link set tap0 master bond0

ip link set tap1 master bond0

ip link set bond0 up mtu 1440

ip route add 192.168.1.0/24 via 10.80.0.2

You may need to adjust the mtu to your environment. Create /usr/local/bin/vpn-stop (and chmod +x):

#!/bin/bash

ip route del 192.168.1.0/24 via 10.80.0.2

ip link set bond0 down

ip link del bond0

pkill 'openvpn'

Firewall

For your firewalling needs you can install FireHOL:

cloud-server# apt install firehol

Leave START_FIREHOL=NO in /etc/default/firehol and instead create /etc/systemd/system/firehol.service

[Unit]

Description=FireHOL Stateful Packet Filtering Firewall

Documentation=man:firehol(1) man:firehol.conf(5)

DefaultDependencies=no

Before=network-pre.target

Wants=network-pre.target

Wants=systemd-modules-load.service local-fs.target

After=systemd-modules-load.service local-fs.target

Conflicts=shutdown.target

Before=shutdown.target

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/usr/sbin/firehol start

ExecStop=/usr/sbin/firehol stop

[Install]

WantedBy=multi-user.target

and enable it

cloud-server# systemctl enable firehol

Create /etc/firehol/firehol.conf:

version 6

server_vpn_ports="udp/1194-1195"

client_vpn_ports="default"

snat4 to 50.60.70.80 outface eth0 dst not 50.60.70.80

interface eth0 web

protection strong

server ssh accept

server vpn accept

# more servers here as per your needs

client all accept

interface bond0 vpn

policy accept

router4 web2vpn inface eth0 outface bond0 dst 192.168.1.0/24,10.80.0.2

client all accept

Activate and Check

Restart the Cloud Server. Check the bonding device:

cloud-server# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: load balancing (round-robin)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: tap0

MII Status: up

Speed: 10 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: aa:04:0b:ea:33:48

Slave queue ID: 0

Slave Interface: tap1

MII Status: up

Speed: 10 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 1e:70:4f:4b:2a:e8

Slave queue ID: 0

LAN Side

PPPoE Internet Connections

You would have to find out for your modems how to put them in transparent bridge mode and assign the LAN addresses. Using ifupdown for network management on the LAN Gateway Server, add the following to /etc/network/interfaces:

auto eth1

iface eth1 inet static

address 192.168.10.1

netmask 255.255.255.0

network 192.168.10.0

broadcast 192.168.10.255

auto eth2

iface eth2 inet static

address 192.168.11.1

netmask 255.255.255.0

network 192.168.11.0

broadcast 192.168.11.255

Install the PPPoE driver:

lan-server# apt update

lan-server# apt install pppoe pppoeconf

Create the two PPP configuration files, /etc/ppp/peers/dsl1:

plugin rp-pppoe.so eth1

unit 0

user "YourUsername1"

noauth

hide-password

persist

mtu 1492

noipdefault

defaultroute

replacedefaultroute

and /etc/ppp/peers/dsl2:

plugin rp-pppoe.so eth2

unit 1

user "YourUsername2"

noauth

hide-password

persist

mtu 1492

noipdefault

defaultroute

replacedefaultroute in /etc/ppp/peers/dsl1 makes this connection the default Internet connection before bonding.

Provide the passwords in /etc/ppp/chap-secrets and /etc/ppp/pap-secrets:

"YourUsername1" * "Password1"

"YourUsername2" * "Password2"

Make sure both files are owned by root and chmod 600.

Add the following to the end of /etc/network/interfaces:

auto dsl1

iface dsl1 inet ppp

provider dsl1

auto dsl2

iface dsl2 inet ppp

provider dsl2

This will automatically establish the PPPoE connections as defined in the configuration files their names given by the provider directives.

The VPN tunnels and the bonding device are managed by two scripts that run when the two PPP connections have come up/gone down. Create /etc/ppp/ip-up.d/bond0 (and chmod +x):

#!/bin/bash

nPpp=`ls -1 /etc/ppp/peers/* | wc -l`

if [[ `ip addr | grep -E 'ppp[0-9]:' | wc -l` -eq $nPpp ]] && \

[[ `ip addr | grep -E 'tap[0-9]:' | wc -l` -eq 0 ]]; then

/usr/local/bin/vpn-start

fi

and /etc/ppp/ip-down.d/bond0 (and chmod +x):

#!/bin/bash

if [[ `ip addr | grep -E 'ppp[0-9]:' | wc -l` -eq 0 ]]; then

/usr/local/bin/vpn-stop

fi

See below for the implementations of the vpn-* scripts.

OpenVPN Tunnels

For the OpenVPN installation proceed like on the Cloud Server. OpenVPN will here be managed by scripts triggered when the PPPoE connections are established/destroyed.

Create /etc/openvpn/tap0.conf

remote 50.60.70.80

auth none

cipher none

dev tap0

mode p2p

port 1194

local 192.168.10.1

proto udp

log /var/log/tap0.log

verb 3

ping 2

ping-restart 10

persist-tun

compress lz4-v2

daemon

and /etc/openvpn/tap1.conf like /etc/openvpn/tap0.conf except

dev tap1

...

port 1195

local 192.168.11.1

...

log /var/log/tap1.log

Linux Ethernet Bonding

/usr/local/bin/vpn-start (chmod +x) creates the VPN tunnels, sets up the bonding device and creates routing table entries that ensure traffic goes through the right channels as the bonding driver requests:

#!/bin/bash

openvpn --config /etc/openvpn/tap0.conf

ip route add 192.168.10.0/24 dev eth1 scope link table dsl1

ip route add default dev ppp0 table dsl1

ip rule add pref 10 from 192.168.10.0/24 table dsl1

openvpn --config /etc/openvpn/tap1.conf

ip route add 192.168.11.0/24 dev eth2 scope link table dsl2

ip route add default dev ppp1 table dsl2

ip rule add pref 11 from 192.168.11.0/24 table dsl2

ip route flush cache

ip link add bond0 type bond

ip addr add 10.80.0.2/30 dev bond0

ip link set tap0 master bond0

ip link set tap1 master bond0

ip link set bond0 up mtu 1440

/usr/local/bin/gw bond0

The names for the routing tables need to be declared in /etc/iproute2/rt_tables:

...

10 dsl1

11 dsl2

Make sure the numbers are unique within this configuration file.

The mtu needs to correspond to the one configured on the Cloud Server.

/usr/local/bin/gw (chmod +x) allows to switch default gateway:

#!/bin/bash

newGw=$1

if [[ ! $newGw =~ ^ppp[0-9]$ && $newGw != bond0 ]]; then

echo "$0 {ppp[0-9]|bond0}"

exit -1

fi

ip addr show dev $newGw >/dev/null 2>&1

ret=$?

if [[ $ret -ne 0 ]]; then

echo "$newGw is not available"

exit -1

fi

via=0.0.0.0

if [[ $newGw == bond0 ]]; then

via=10.80.0.1

fi

ip route repl default via $via dev $newGw

ip route show

Create /usr/local/bin/vpn-stop (chmod +x):

#!/bin/bash

/usr/local/bin/gw ppp0

ip link set bond0 down

ip link del bond0

pkill 'openvpn'

ip rule del pref 10 from 192.168.10.0/24 table dsl1

ip route del default dev ppp0 table dsl1

ip route del 192.168.10.0/24 dev eth1 scope link table dsl1

ip rule del pref 11 from 192.168.11.0/24 table dsl2

ip route del default dev ppp1 table dsl2

ip route del 192.168.11.0/24 dev eth2 scope link table dsl2

ip route flush cache

The two vpn-* and the gw scripts can of course be run manually also if need be.

Firewall

Install FireHOL like on the Cloud Server with the following /etc/firehol/firehol.conf configuration:

version 6

lan="eth0"

web="ppp+"

vpn="bond+"

tcpmss auto "${web}"

masquerade "${web}"

interface "${lan}" lan

policy accept

interface "${web}" web

protection bad-packets

server ident reject with tcp-reset

client all accept

interface "${vpn}" vpn

policy accept

router web2lan inface "${web}" outface "${lan}"

protection bad-packets

server ident reject with tcp-reset

client all accept

router vpn2lan inface "${vpn}" outface "${lan}"

policy accept

Activate and Check

Restart the LAN Server and check the bonding device:

lan-server# cat /proc/net/bonding/bond0

Output should resemble Cloud Server.

If you now navigate e.g. to https://www.whatsmyip.org/ in your browser you should see your cloud server's IP address.

You can test your speed improvement e.g. by running

lan-server# wget -4 -O /dev/null http://proof.ovh.net/files/1Gio.dat

Here we see bonded speed only some 5% less than the sum of the individual lines' speeds.

Failover with Link Balancer

If one of the Internet connections goes down the bonding device doesn't continue on the remaining connection as one might expect. For this event one can prepare by setting up FireHOL's Link Balancer.

One way to do this is by creating a suitable /etc/firehol/link-balancer.conf and schedule /usr/sbin/link-balancer as a cron job to periodically (e.g. every 2 minutes) check the connections and failover to what is still available if need be. The following link-balancer.conf will make Internet access continue on the remaining good line if one goes bad:

check_bond0() {

for dev in ppp0 ppp1 bond0; do

/sbin/ip addr show dev $dev >/dev/null 2>&1

ret=$?

if [[ $ret -ne 0 ]]; then

break

fi

done

if [[ $ret -eq 0 ]]; then

/bin/ping -w 8 -c 4 -I 10.80.0.2 10.80.0.1 >/dev/null 2>&1

ret=$?

fi

return $ret

}

gateway bond dev bond0 gw 10.80.0.1 check bond0

gateway dsl1 dev ppp0

gateway dsl2 dev ppp1

table main

default via bond weight 100

fallback via dsl1

fallback via dsl2

The default check for the bonded connection seems not to reliably detect its state, that's why the custom check_bond0.

When the bad connection gets back up again, Link Balancer will make both connections default gateways that will be balanced on a connection basis. Couldn't with reasonable effort revive the bonded connection, so in these rare cases one will have to manually restart potentially both VPN ends.