I build a bare-metal kubernetes cluster (nothing heavy, just three server) with kubeadm on Debian 9. Like ask by Kubernetes I disable the SWAP :

- swapoff -a

- removing the SWAP line in

/etc/fstab - Adding

vm.swappiness = 0to/etc/sysctl.conf

So, there is no SWAP anymore on my servers.

$ free

total used free shared buff/cache available

Mem: 5082668 3679500 117200 59100 1285968 1050376

Swap: 0 0 0

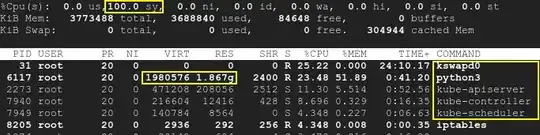

One node is used to run some microservices. When I start to play with all the microservices, they use 10% of RAM each. And the kswapd0 process start to use a lot of CPU.

If I stress a little bit the microservices they stop to respond because kswapd0 use all the CPU. I try to wait if kswapd0 stop his work, but it never happened. Even after 10h hour.

I read a lot of stuff but didn’t find any solution.

I can increase the amount of RAM, but this will not fix my issue.

How do the Kubernetes Masters deal with this kind of problem?

More details:

- Kubernetes version 1.15

- Calico version 3.8

- Debian version 9.6

In advance, thank you for your precious help.

-- Edit 1 --

As requested by @john-mahowald

$ cat /proc/meminfo

MemTotal: 4050468 kB

MemFree: 108628 kB

MemAvailable: 75156 kB

Buffers: 5824 kB

Cached: 179840 kB

SwapCached: 0 kB

Active: 3576176 kB

Inactive: 81264 kB

Active(anon): 3509020 kB

Inactive(anon): 22688 kB

Active(file): 67156 kB

Inactive(file): 58576 kB

Unevictable: 92 kB

Mlocked: 92 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 0 kB

Writeback: 0 kB

AnonPages: 3472080 kB

Mapped: 116180 kB

Shmem: 59720 kB

Slab: 171592 kB

SReclaimable: 48716 kB

SUnreclaim: 122876 kB

KernelStack: 30688 kB

PageTables: 38076 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 2025232 kB

Committed_AS: 11247656 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 0 kB

VmallocChunk: 0 kB

HardwareCorrupted: 0 kB

AnonHugePages: 0 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

DirectMap4k: 106352 kB

DirectMap2M: 4087808 kB