The ipc numActiveHandler is documented here as:

The number of RPC handlers actively servicing requests

I am looking for a more detailed explanation about the significance of that metric.

I am trying to debug a scenario, where numActiveHandler is stuck at 32. I think 32 is a pre-configured max.

During that time, the same regionserver is stuck at 100% cpu consumption. For one of the regions on that reqionserver, the rate of processed read requests look like they are reduced by some pressure, a bottleneck somewhere. The read request latencies also increase about 5X.

What could lead to this behavior ? My intuition is that there has been too many connections to that region server during that time and the bottleneck is before a read request could be processed. Any suggestions where to look next ?

Update

The numActiveHandler metric was added here. The description in that ticket says:

We found [numActiveHandler] is a good metric to measure how busy of a server. If this number is too high (compared to the total number of handlers), the server has risks in getting call queue full.

Update2

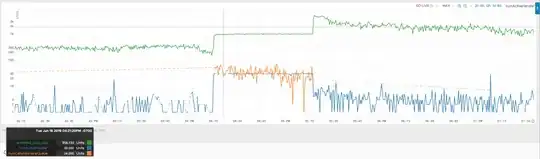

During the same period, another metric hbase.regionserver.ipc.numCallsInGeneralQueue behaves abnormally too.

Attaching a plot showing them together.

Update3

Our hbase version is cdh5-1.2.0_5.11.0 from https://github.com/cloudera/hbase/tree/cdh5-1.2.0_5.11.0

Unfortunately I do not have the numActive<OperationName>Handler metrics :( However, from other existing metrics, I know definitely the culprit is scanNext requests. More about that later.

For the other parameters @spats has suggested, I need to make some investigation and tuning.

hbase.regionserver.handler.count docs mention:

Start with twice the CPU count and tune from there.

Looking at the cpu count, I could set it to 50 instead of default 30.

In any case, the numbers of 30, 50 sounds so small, I am having difficulty grasping their impact. This region server can process 2000 scanNext requests per second. Is that achieved with 30 handlers ? Are these handlers similar to execution threads ? Are they related to the number of parallel requests a regionserver can handle? Is that not such a small number ?

hbase.ipc.server.callqueue.handler.factor is also mentioned here. Its default is 0.1.

With the default value of 30 handler count, that would make 3 queues. I am having difficulty understanding the tradeoff. If I set the hbase.ipc.server.callqueue.handler.factor to 1, each handler will have its own queue. What would be the adverse effects of that configuration ?

Update4

The culprit is scanNext requests going to that region server. However the situation is more complicated.

The following chart includes the scanNext operation count on the same region server. Please note now the y-axis is in log scale.

At the start of the anomaly (4:15PM) there was a rise in the scanNext requests. From what I know about the service that sent the requests, the scanNext request count must have been larger between 4:15PM to 5:20 PM compared to later than 5:20PM. I do not know the exact scanNext request number between 4:15PM and 5:20PM, my unproven back of the envelope calculation suggests that it should be about 5% more.

Because of that, I think this scanNext num ops metric is misleading. It may be the completed scanNext operation count. Between 4:15PM to 5:20PM, I think the regionserver was blocked on something else and could not complete the scanNext operations.

And, whatever that blockage was, I thought we could understand from the anomaly in the numActiveHandler and numCallsInGeneralQueue metrics. But I am having difficulty finding a good architectural document that gives insights about those metrics and what may cause that anomaly.