I have setup ZFS RAID0 for PostgreSQL database. The Storage and Instances are in AWS EC2 and EBS volumes.

NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pgpool 479G 289G 190G - 70% 60% 1.00x ONLINE -

xvdf 59.9G 36.6G 23.3G - 71% 61%

xvdg 59.9G 34.7G 25.2G - 70% 57%

xvdh 59.9G 35.7G 24.2G - 71% 59%

xvdi 59.9G 35.7G 24.2G - 71% 59%

xvdj 59.9G 36.3G 23.6G - 71% 60%

xvdk 59.9G 36.5G 23.4G - 71% 60%

xvdl 59.9G 36.6G 23.3G - 71% 61%

xvdm 59.9G 36.6G 23.2G - 71% 61%

Previously the FRAG is at 80% on most of the devices and we have suffered a heavy write IOPS. As the pool capacity is previously at 75% utilization (400GB), provisioned additional 10GB to each device (400GB + 80GB). Now the FRAG is reduced to 70%. One important metric is that write IOPS is much lesser for the same workload now.

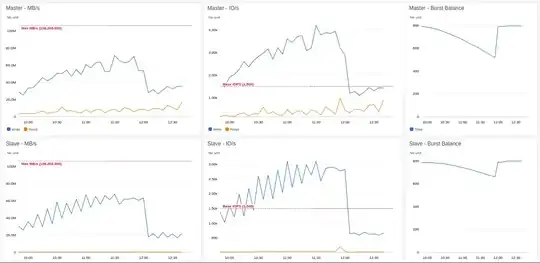

As per the Cloudwatch metrics after increase in EBS size, write IOPS drastically reduced to 1200 - 1400 IOPS from 4000 IOPS for Master PG and reduced to 600 IOPS from 3000 IOPS for Slave PG. I have suspected that this is due to how FRAG amplifies writes as explained in this answer.

We have set recordsize=128K as the compressratio is much better than recordsize=8K. I think due to higher recordsize, FRAG is increased quickly and results in write amplification and heavy write IOPS. Will decreasing the record size would prevent write amplification or any other problem which I am missing?

Database Info

PostgreSQL 9.6

Checkpoint Timeout: 10min

Total Databases: ~1100

Tables / DB: ~1300

Files / DB: ~6000 to 8000 (1300 Tables + Indexes + Toast)

Total Files for All DBs: ~7.7m

In average for every 5 mins, ~60000 to 70000 files (Tables + Indexes + Toast) would be affected (as per postgres checkpoint completion log). So any tuning need to be done regarding the huge number of files? Or atleast what I'm hoping is why the drastic difference in IOPS when additional space is added to the pool?

Also I've seen the same kind of IOPS difference a month back when additional space is added to the pool but getting back to the high IOPS rate after two days. So I guess the same would repeat after two to three days and that's why I'm suspecting Free Space Fragmentation FRAG would be the culprit..!!

ZPOOL Props

ubuntu@ip-10-0-1-59:~$ sudo zpool get all

NAME PROPERTY VALUE SOURCE

pgpool size 479G -

pgpool capacity 60% -

pgpool altroot - default

pgpool health ONLINE -

pgpool guid 1565875598252756833 -

pgpool version - default

pgpool bootfs - default

pgpool delegation on default

pgpool autoreplace off default

pgpool cachefile - default

pgpool failmode wait default

pgpool listsnapshots off default

pgpool autoexpand on local

pgpool dedupditto 0 default

pgpool dedupratio 1.00x -

pgpool free 190G -

pgpool allocated 289G -

pgpool readonly off -

pgpool ashift 0 default

pgpool comment - default

pgpool expandsize - -

pgpool freeing 0 -

pgpool fragmentation 71% -

pgpool leaked 0 -

pgpool multihost off default

pgpool feature@async_destroy enabled local

pgpool feature@empty_bpobj enabled local

pgpool feature@lz4_compress active local

pgpool feature@multi_vdev_crash_dump enabled local

pgpool feature@spacemap_histogram active local

pgpool feature@enabled_txg active local

pgpool feature@hole_birth active local

pgpool feature@extensible_dataset active local

pgpool feature@embedded_data active local

pgpool feature@bookmarks enabled local

pgpool feature@filesystem_limits enabled local

pgpool feature@large_blocks enabled local

pgpool feature@large_dnode enabled local

pgpool feature@sha512 enabled local

pgpool feature@skein enabled local

pgpool feature@edonr enabled local

pgpool feature@userobj_accounting active local

ZFS Props

ubuntu@ip-10-0-1-59:~$ sudo zfs get all

NAME PROPERTY VALUE SOURCE

pgpool type filesystem -

pgpool creation Mon Oct 8 18:45 2018 -

pgpool used 289G -

pgpool available 175G -

pgpool referenced 288G -

pgpool compressratio 5.06x -

pgpool mounted yes -

pgpool quota none default

pgpool reservation none default

pgpool recordsize 128K default

pgpool mountpoint /mnt/PGPOOL local

pgpool sharenfs off default

pgpool checksum on default

pgpool compression lz4 local

pgpool atime off local

pgpool devices on default

pgpool exec on default

pgpool setuid on default

pgpool readonly off default

pgpool zoned off default

pgpool snapdir hidden default

pgpool aclinherit restricted default

pgpool createtxg 1 -

pgpool canmount on default

pgpool xattr sa local

pgpool copies 1 default

pgpool version 5 -

pgpool utf8only off -

pgpool normalization none -

pgpool casesensitivity sensitive -

pgpool vscan off default

pgpool nbmand off default

pgpool sharesmb off default

pgpool refquota none default

pgpool refreservation none default

pgpool guid 571000568545391306 -

pgpool primarycache all default

pgpool secondarycache all default

pgpool usedbysnapshots 0B -

pgpool usedbydataset 288G -

pgpool usedbychildren 364M -

pgpool usedbyrefreservation 0B -

pgpool logbias throughput local

pgpool dedup off default

pgpool mlslabel none default

pgpool sync standard default

pgpool dnodesize legacy default

pgpool refcompressratio 5.07x -

pgpool written 288G -

pgpool logicalused 1.42T -

pgpool logicalreferenced 1.42T -

pgpool volmode default default

pgpool filesystem_limit none default

pgpool snapshot_limit none default

pgpool filesystem_count none default

pgpool snapshot_count none default

pgpool snapdev hidden default

pgpool acltype off default

pgpool context none default

pgpool fscontext none default

pgpool defcontext none default

pgpool rootcontext none default

pgpool relatime off default

pgpool redundant_metadata most local

pgpool overlay off default

ARC

ubuntu@ip-10-0-1-59:~$ cat /proc/spl/kstat/zfs/arcstats

13 1 0x01 96 4608 8003814189 8133508755293587

name type data

hits 4 48883641586

misses 4 1321425301

demand_data_hits 4 15606800719

demand_data_misses 4 786648720

demand_metadata_hits 4 32729793033

demand_metadata_misses 4 278006868

prefetch_data_hits 4 315020107

prefetch_data_misses 4 207366277

prefetch_metadata_hits 4 232027727

prefetch_metadata_misses 4 49403436

mru_hits 4 10685966527

mru_ghost_hits 4 85159850

mfu_hits 4 37807765384

mfu_ghost_hits 4 40046050

deleted 4 960331018

mutex_miss 4 15489677

access_skip 4 17626

evict_skip 4 21812713742

evict_not_enough 4 267500438

evict_l2_cached 4 0

evict_l2_eligible 4 108797848903680

evict_l2_ineligible 4 2000957528064

evict_l2_skip 4 0

hash_elements 4 313401

hash_elements_max 4 769926

hash_collisions 4 587807552

hash_chains 4 11108

hash_chain_max 4 6

p 4 3794526616

c 4 7022898344

c_min 4 1004520576

c_max 4 16072329216

size 4 6944858120

compressed_size 4 3732648960

uncompressed_size 4 20043857408

overhead_size 4 1682603008

hdr_size 4 110128312

data_size 4 3359916544

metadata_size 4 2055335424

dbuf_size 4 246193488

dnode_size 4 943126912

bonus_size 4 230157440

anon_size 4 33360384

anon_evictable_data 4 0

anon_evictable_metadata 4 0

mru_size 4 3718656000

mru_evictable_data 4 1940126208

mru_evictable_metadata 4 224441856

mru_ghost_size 4 1684268032

mru_ghost_evictable_data 4 0

mru_ghost_evictable_metadata 4 1684268032

mfu_size 4 1663235584

mfu_evictable_data 4 1288254464

mfu_evictable_metadata 4 83499008

mfu_ghost_size 4 1506922496

mfu_ghost_evictable_data 4 1391591424

mfu_ghost_evictable_metadata 4 115331072

l2_hits 4 0

l2_misses 4 0

l2_feeds 4 0

l2_rw_clash 4 0

l2_read_bytes 4 0

l2_write_bytes 4 0

l2_writes_sent 4 0

l2_writes_done 4 0

l2_writes_error 4 0

l2_writes_lock_retry 4 0

l2_evict_lock_retry 4 0

l2_evict_reading 4 0

l2_evict_l1cached 4 0

l2_free_on_write 4 0

l2_abort_lowmem 4 0

l2_cksum_bad 4 0

l2_io_error 4 0

l2_size 4 0

l2_asize 4 0

l2_hdr_size 4 0

memory_throttle_count 4 0

memory_direct_count 4 3209752

memory_indirect_count 4 15181019

memory_all_bytes 4 32144658432

memory_free_bytes 4 6916120576

memory_available_bytes 3 6413860864

arc_no_grow 4 0

arc_tempreserve 4 0

arc_loaned_bytes 4 0

arc_prune 4 252427

arc_meta_used 4 3584941576

arc_meta_limit 4 12054246912

arc_dnode_limit 4 1205424691

arc_meta_max 4 12420274128

arc_meta_min 4 16777216

sync_wait_for_async 4 4231322

demand_hit_predictive_prefetch 4 195199007

arc_need_free 4 0

arc_sys_free 4 502260288

zdb -C

ubuntu@ip-10-0-1-59:~$ sudo zdb -C pgpool

MOS Configuration:

version: 5000

name: 'pgpool'

state: 0

txg: 4739409

pool_guid: 1565875598252756833

errata: 0

hostname: 'ip-10-0-1-59'

com.delphix:has_per_vdev_zaps

vdev_children: 8

vdev_tree:

type: 'root'

id: 0

guid: 1565875598252756833

create_txg: 4

children[0]:

type: 'disk'

id: 0

guid: 1507251152420866879

path: '/dev/xvdf1'

whole_disk: 1

metaslab_array: 64

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1425

create_txg: 4

com.delphix:vdev_zap_leaf: 129

com.delphix:vdev_zap_top: 130

children[1]:

type: 'disk'

id: 1

guid: 12461793154748882472

path: '/dev/xvdg1'

whole_disk: 1

metaslab_array: 68

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1424

create_txg: 9

com.delphix:vdev_zap_leaf: 131

com.delphix:vdev_zap_top: 132

children[2]:

type: 'disk'

id: 2

guid: 15169312858460766498

path: '/dev/xvdh1'

whole_disk: 1

metaslab_array: 69

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1427

create_txg: 12

com.delphix:vdev_zap_leaf: 133

com.delphix:vdev_zap_top: 134

children[3]:

type: 'disk'

id: 3

guid: 8533762672700299025

path: '/dev/xvdi1'

whole_disk: 1

metaslab_array: 74

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1422

create_txg: 15

com.delphix:vdev_zap_leaf: 71

com.delphix:vdev_zap_top: 72

children[4]:

type: 'disk'

id: 4

guid: 6612366135198079494

path: '/dev/xvdj1'

whole_disk: 1

metaslab_array: 139

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1428

create_txg: 18

com.delphix:vdev_zap_leaf: 136

com.delphix:vdev_zap_top: 137

children[5]:

type: 'disk'

id: 5

guid: 15947515837120747219

path: '/dev/xvdk1'

whole_disk: 1

metaslab_array: 142

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1421

create_txg: 21

com.delphix:vdev_zap_leaf: 140

com.delphix:vdev_zap_top: 141

children[6]:

type: 'disk'

id: 6

guid: 15626684534009192025

path: '/dev/xvdl1'

whole_disk: 1

metaslab_array: 146

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1426

create_txg: 24

com.delphix:vdev_zap_leaf: 143

com.delphix:vdev_zap_top: 144

children[7]:

type: 'disk'

id: 7

guid: 19815078749163274

path: '/dev/xvdm1'

whole_disk: 1

metaslab_array: 149

metaslab_shift: 27

ashift: 9

asize: 64418480128

is_log: 0

DTL: 1423

create_txg: 27

com.delphix:vdev_zap_leaf: 147

com.delphix:vdev_zap_top: 148

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

zfs.conf

ubuntu@ip-10-0-1-59:~$ sudo cat /etc/modprobe.d/zfs.conf

options zfs zfs_arc_max=77147180237

zdb metaslabs & spacemap

From the metaslabs and spacemap info, it look like the metaslabs and spacemaps at end of each vdev have lots of free space (due to latest size expansion) compared with the upper regions. So this correlates with the problem explained here as how the degrade in performance when the metaslabs and spacemap is fragmented.

I think it's better to add mirrors and remove the old vdevs so to promote the new devices as primary stripe. This would help the metaslabs and spacemap as if it's a fresh pool without much fragmentation (??? not sure !!!). Anyway, this would be kept as a last resort, until then I'll look into verifying the problem nature and any tunings needed regarding metaslabs / spacemap from zfs side.

Also to reduce fragmentation, planned to add zfs log device to a seperate device and moving postgres pg_xlog directory to seperate device with ext4 partition.