I'm looking into RAID solutions for a very large file server (70+TB, ONLY serving NFS and CIFS). I know using ZFS raid on top of hardware raid is generally contraindicated, however I find myself in an unusual situation.

My personal preference would be to setup large RAID-51 virtual disks. I.e Two mirrored RAID5, with each RAID5 having 9 data + 1 hotspare (so we don't lose TOO much storage space). This eases my administrative paranoia by having the data mirrored on two different drive chassis, while allowing for 1 disk failure in each mirror set before a crisis hits.

HOWEVER this question stems from the fact, that we have existing hardware RAID controllers (LSI Megaraid integrated disk chassis + server), licensed ONLY for RAID5 and 6. We also have an existing ZFS file system, which is intended (but not yet configured) to provide HA using RFS-1.

From what I see int he documentation, ZFS does not provide a RAID-51 solution.

The suggestion is to use the hardware raid to create two, equally sized RAID5 virtual disks on each chassis. These two RAID5 virtual disks are then presented to their respective servers as /dev/sdx.

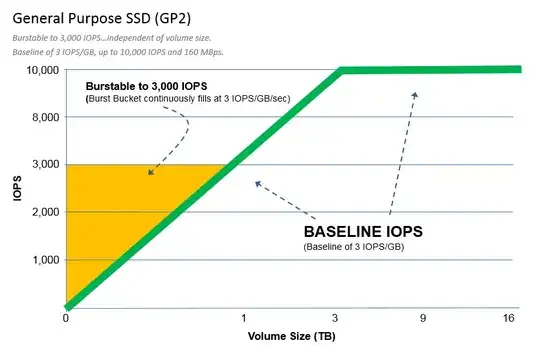

Then use ZFS + RFS-1 to mirror those two virtual disks as an HA mirror set (see image)

Is this a good idea, a bad idea, or just an ugly (but usable) configuration.

Are there better solutions?