We have a MongoDB replication set consisting of three members:

"members" : [

{

"_id" : 6,

"host" : "10.0.0.17:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 7,

"host" : "10.0.0.18:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 8,

"host" : "10.0.0.19:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

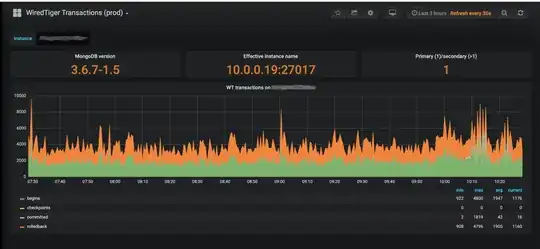

The cluster is under moderate load, not more than few tens of requests per second.

db.serverStatus() on a primary reports that almost all transactions are rolled back:

"transaction begins" : 2625009877,

"transaction checkpoint currently running" : 0,

"transaction checkpoint generation" : 22618,

"transaction checkpoint max time (msecs)" : 5849,

"transaction checkpoint min time (msecs)" : 153,

"transaction checkpoint most recent time (msecs)" : 1869,

"transaction checkpoint scrub dirty target" : 0,

"transaction checkpoint scrub time (msecs)" : 0,

"transaction checkpoint total time (msecs)" : 11017082,

"transaction checkpoints" : 22617,

"transaction checkpoints skipped because database was clean" : 0,

"transaction failures due to cache overflow" : 0,

"transaction fsync calls for checkpoint after allocating the transaction ID" : 22617,

"transaction fsync duration for checkpoint after allocating the transaction ID (usecs)" : 354402,

"transaction range of IDs currently pinned" : 0,

"transaction range of IDs currently pinned by a checkpoint" : 0,

"transaction range of IDs currently pinned by named snapshots" : 0,

"transaction range of timestamps currently pinned" : 8589934583,

"transaction range of timestamps pinned by the oldest timestamp" : 8589934583,

"transaction sync calls" : 0,

"transactions committed" : 30213144,

"transactions rolled back" : 2594972913,

"update conflicts" : 578

Basically, my questions are: What is going on here? Is it normal to have so many transactions and so many rollbacks? If not, what is a root cause and hot to fix it?

Upd.: We upgraded to 3.6.8-2.0 (this was the latest Percona package in 3.6 series) and the problem persisted.