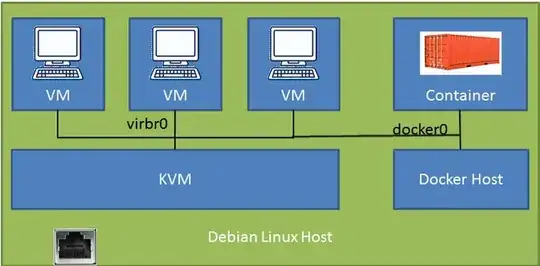

I'm used to implement that using the following setup :

<interface type='bridge'>

<mac address='52:54:00:a9:28:0a'/>

<source bridge='br0'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

opensvc service configuration

[DEFAULT]

docker_daemon_args = --log-opt max-size=1m --storage-driver=zfs --iptables=false

docker_data_dir = /{env.base_dir}/docker

env = PRD

nodes = srv1.acme.com srv2.acme.com

orchestrate = start

id = 4958b24d-4d0f-4c30-71d2-bb820e043a5d

[fs#1]

dev = {env.pool}/{namespace}-{svcname}

mnt = {env.base_dir}

mnt_opt = rw,xattr,acl

type = zfs

[fs#2]

dev = {env.pool}/{namespace}-{svcname}/docker

mnt = {env.base_dir}/docker

mnt_opt = rw,xattr,acl

type = zfs

[fs#3]

dev = {env.pool}/{namespace}-{svcname}/data

mnt = {env.base_dir}/data

mnt_opt = rw,xattr,acl

type = zfs

[ip#0]

netns = container#0

ipdev = br0

ipname = 1.2.3.4

netmask = 255.255.255.224

gateway = 1.2.3.1

type = netns

[container#0]

hostname = {svcname}

image = google/pause

rm = true

run_command = /bin/sh

type = docker

[container#mysvc]

image = mysvc/mysvc:4.1.3

netns = container#0

run_args = -v /etc/localtime:/etc/localtime:ro

-v {env.base_dir}/data/mysvc:/home/mysvc/server/data

type = docker

[env]

base_dir = /srv/{namespace}-{svcname}

pool = data

startup log

2019-01-04 11:27:14,617 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - checking 1.2.3.4 availability

2019-01-04 11:27:18,565 - srv1.acme.com.appprd.mysvc.fs#1 - INFO - mount -t zfs -o rw,xattr,acl data/appprd-mysvc /srv/appprd-mysvc

2019-01-04 11:27:18,877 - srv1.acme.com.appprd.mysvc.fs#2 - INFO - mount -t zfs -o rw,xattr,acl data/appprd-mysvc/docker /srv/appprd-mysvc/docker

2019-01-04 11:27:19,106 - srv1.acme.com.appprd.mysvc.fs#3 - INFO - mount -t zfs -o rw,xattr,acl data/appprd-mysvc/data /srv/appprd-mysvc/data

2019-01-04 11:27:19,643 - srv1.acme.com.appprd.mysvc - INFO - starting docker daemon

2019-01-04 11:27:19,644 - srv1.acme.com.appprd.mysvc - INFO - dockerd -H unix:///var/lib/opensvc/namespaces/appprd/services/mysvc/docker.sock --data-root //srv/appprd-mysvc/docker -p /var/lib/opensvc/namespaces/appprd/services/mysvc/docker.pid --exec-root /var/lib/opensvc/namespaces/appprd/services/mysvc/docker_exec --log-opt max-size=1m --storage-driver=zfs --iptables=false --exec-opt native.cgroupdriver=cgroupfs

2019-01-04 11:27:24,669 - srv1.acme.com.appprd.mysvc.container#0 - INFO - docker -H unix:///var/lib/opensvc/namespaces/appprd/services/mysvc/docker.sock run --name=appprd..mysvc.container.0 --detach --hostname mysvc --net=none --cgroup-parent /opensvc.slice/appprd.slice/mysvc.slice/container.slice/container.0.slice google/pause /bin/sh

2019-01-04 11:27:30,965 - srv1.acme.com.appprd.mysvc.container#0 - INFO - output:

2019-01-04 11:27:30,965 - srv1.acme.com.appprd.mysvc.container#0 - INFO - f790e192b5313d7c3450cb257d075620f40c2bad3d69d52c8794eccfe954f250

2019-01-04 11:27:30,987 - srv1.acme.com.appprd.mysvc.container#0 - INFO - wait for up status

2019-01-04 11:27:31,031 - srv1.acme.com.appprd.mysvc.container#0 - INFO - wait for container operational

2019-01-04 11:27:31,186 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - bridge mode

2019-01-04 11:27:31,268 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /sbin/ip link add name veth0pl20321 mtu 1500 type veth peer name veth0pg20321 mtu 1500

2019-01-04 11:27:31,273 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /sbin/ip link set veth0pl20321 master br0

2019-01-04 11:27:31,277 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /sbin/ip link set veth0pl20321 up

2019-01-04 11:27:31,281 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /sbin/ip link set veth0pg20321 netns 20321

2019-01-04 11:27:31,320 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /usr/bin/nsenter --net=/var/lib/opensvc/namespaces/appprd/services/mysvc/docker_exec/netns/fc2fa9b2eaa4 ip link set veth0pg20321 name eth0

2019-01-04 11:27:31,356 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /usr/bin/nsenter --net=/var/lib/opensvc/namespaces/appprd/services/mysvc/docker_exec/netns/fc2fa9b2eaa4 ip addr add 1.2.3.4/27 dev eth0

2019-01-04 11:27:31,362 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /usr/bin/nsenter --net=/var/lib/opensvc/namespaces/appprd/services/mysvc/docker_exec/netns/fc2fa9b2eaa4 ip link set eth0 up

2019-01-04 11:27:31,372 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /usr/bin/nsenter --net=/var/lib/opensvc/namespaces/appprd/services/mysvc/docker_exec/netns/fc2fa9b2eaa4 ip route replace default via 1.2.3.1

2019-01-04 11:27:31,375 - srv1.acme.com.appprd.mysvc.ip#0 - INFO - /usr/bin/nsenter --net=/var/lib/opensvc/namespaces/appprd/services/mysvc/docker_exec/netns/fc2fa9b2eaa4 /usr/bin/python3 /usr/share/opensvc/lib/arp.py eth0 1.2.3.4

2019-01-04 11:27:32,534 - srv1.acme.com.appprd.mysvc.container#mysvc - INFO - docker -H unix:///var/lib/opensvc/namespaces/appprd/services/mysvc/docker.sock run --name=appprd..mysvc.container.mysvc -v /etc/localtime:/etc/localtime:ro -v /srv/appprd-mysvc/data/mysvc:/home/mysvc/server/data --detach --net=container:appprd..mysvc.container.0 --cgroup-parent /opensvc.slice/appprd.slice/mysvc.slice/container.slice/container.mysvc.slice mysvc/mysvc:4.1.3

2019-01-04 11:27:37,776 - srv1.acme.com.appprd.mysvc.container#mysvc - INFO - output:

2019-01-04 11:27:37,777 - srv1.acme.com.appprd.mysvc.container#mysvc - INFO - 1616cade9257d0616346841c3e9f0d639a9306e1af6fd750fe70e17903a11011

2019-01-04 11:27:37,797 - srv1.acme.com.appprd.mysvc.container#mysvc - INFO - wait for up status

2019-01-04 11:27:37,833 - srv1.acme.com.appprd.mysvc.container#mysvc - INFO - wait for container operational