I don't have answers for 1-3, only some additional information about Infiniband(IB) applications that may help you find the answer. I can answer 4 though.

1) What are my connectivity options? The primary purpose is sharing data -- is NFS my only choice, or does FreeBSD have something Infiniband-specific?

2) If it is NFS, does it have to be over IP, or is there some "more intimate" Infiniband-specific protocol?

The key word to look for is probably NFS over RDMA, but a quick search shows that it may not be complete yet for FreeBSD. You could also look into SRP or iSER, but I found no references to any applications using these IB protocols.

3) If it is NFS over IP, should I pick UDP or TCP? Any other tuning parameters to squeeze the most from the direct connection?

TCP. According to the FreeBSD IB Wiki, as part of your IB configuration, you're setting connected mode, which is akin to using TCP. At least in the Linux world, when using connected mode, you should only use NFS over TCP and not UDP. NFS over UDP should only be done when using datagram mode, and neither is recommended.

4) What type of cable do I need to connect these directly? To my surprise, searching for "infiniband cable" returned a variety of products with different connectors.

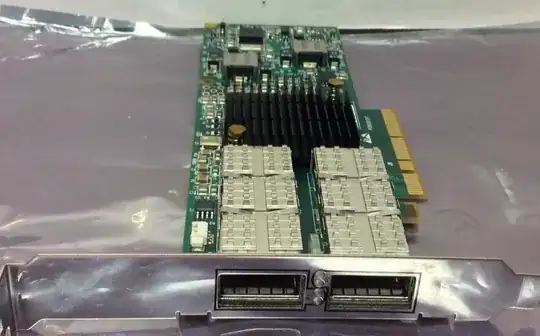

You want a "QDR" cable, which is short for "Quad Data Rate," or 40Gbps. The ConnectX-3 cards do FDR, which is short for "Fourteen Data Rate," or 56Gbps (14Gbps*4 lanes.) Either cable should work fine for your card.

You can buy Mellanox branded cables, in which case you know it will work, or you can get an off-brand. I like the 3M brand cables. They're a flat cable that support a very sharp bend radius, and allow you to stack a bunch of cables in very small space without any crosstalk. Used Mellanox QDR cables ought to be really cheap on eBay. I've never bought used, but if you do that, you MUST get a cable that has been tested - that's a hard thing to troubleshoot if you don't have a working system to compare against.

There are two types of cables - passive (copper) or active (fiber) cables. The fiber cables are permanently attached to the QSFP connectors, so you'll have to get them in specific lengths. Copper has a max length of 3 meters, or maybe up to 5 meters - any longer, and you have to use the fiber cables. Don't get a fiber cable until you have everything 100% working on copper. I've seen instances where the copper works fine, but the fiber does not, due to an odd failure mode in hardware. Eliminate that possibility until you have experience and an inventory of parts to troubleshoot with.

And now, a few other hints that you didn't ask for, but might help. First, make sure you're running opensm on one of the systems. Think of it like a dhcp server; without it the two systems will physically link, but not pass any data.

Second, some Mellanox cards will operate in either IB mode or Ethernet mode. These are usually the VPI series of cards. I'm not sure if that was an option on ConnectX-2 or if your cards support it. But if they do, it may be easier to have those cards run as 40GBE instead of QDR IB and have good NFS performance. In my experience on Linux, we see IPoIB has about 20-30% of the performance as wire speed. You'll get wire speed with IB protocols like RDMA, or by switching to Ethernet mode.