I have a t2.nano instance running in North-Virginia availability zone (us-east-1) for almost 1 year.

In hope of reducing latency, I just have deployed the created AMI of that instance to a t3.micro instance in Singapore (ap-southeast-1) zone. There is an RDS (AZ:us-east-1) attached to the Apache server of the instance.

But the t3.micro(in Singapore) is responding way slower than the old t2.nano(in N. Virginia, USA) from more than 4 times more distant location (Dhaka, Bangladesh).

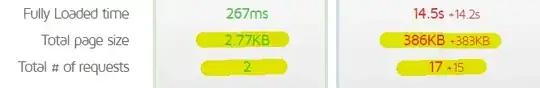

As proof of the slowness, Google's Pagespeed site ranks the old & new servers as 100 & 71 respectively, while Pingdom ranks the 2 servers as 100 & 81 respectively & GTmetrix ranks these as 100 & 79 respectively. Screenshot from GTmetrix comparison of the 2 sites:

EDIT:

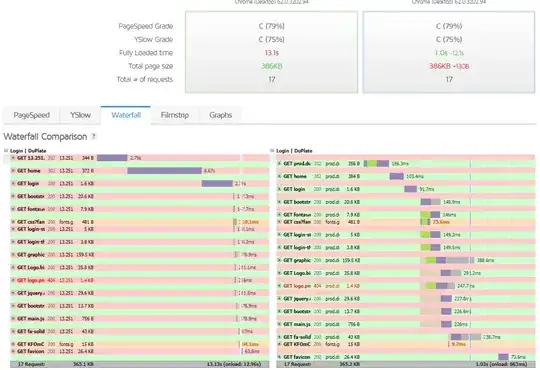

The ranks were mistakenly generated using imbalanced requests, but the following screenshot now depicts that there is really a long waiting time for the t3.micro instance:

This server hosts a lot of other REST APIs (developed using Laravel framework, both for web front-end & mobile apps), all of which are reflecting the long delays.

I have not used anymore configuration in this system & all other configurations (security group, AMI, IAM, RDS, S3 etc.) are exactly same for both the instances.

I understand that the RDS connection might occur some more milliseconds of delay (& probably some delay due to any caching?), but an average of more than 10s delay feels intolerable.

What can occur such difference & what should be done more to avoid this?