When you're talking about performance on a server, there are a few different ways of looking at it. There's the apparent response time (similar to network latency) and the throughput (similar to network bandwidth).

Some versions of Windows Server ship with Balanced Power settings enabled by default. As Jeff pointed out. Windows 2008 R2 is one of them. Very few CPU's these days are single core so this explanation applies to almost every Windows server you will run into with the exception of single-core VM's. (more on those later).

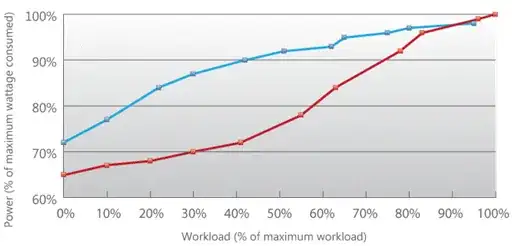

When the Balanced power plan is active, the CPU attempts to throttle back how much power it's using. The way it does this is by disabling half of the CPU cores in a process known as "parking". Only half of the CPU's will be available at a time so it uses less power during times of low traffic. This isn't a problem in and of itself.

What IS a problem is the fact that when CPU's are unparked, you've doubled the available CPU cycles available to the system and suddenly unbalanced the load on the system, taking it from (for example) 70% utilization to 35% utilization. The system looks at that and after the burst of traffic is processed, it thinks "Hey, I should dial this back a bit to conserve power". And so it does.

Here's the bad part. In order to prevent an uneven distribution of heat & power within the CPU cores, it has a tendency to park the CPU's that haven't been parked recently. And in order for that to function properly, the CPU needs to flush everything from the CPU registers (L1, L2 & L3 cache) to some other location (most likely main memory).

As a hypothetical example, let's say you have an 8 core CPU with C1-C8.

- Active: C1, C3, C5, C7

- Parked: C2, C4, C6, C8

When this happens, all of them become active for some period of time, and then the system will park them as follows:

- Active: C2, C4, C6, C8

- Parked: C1, C3, C5, C7

But in doing so, there's a good amount of overhead associated with flushing all of the data from the L1-L3 cache to make this happen so that weird errors don't happen to programs that were flushed from the CPU pipeline.

There's likely an official name for it, but I like to explain it as CPU thrashing. Basically the processors are spending more time doing busy work moving data around internally than they are fielding work requests.

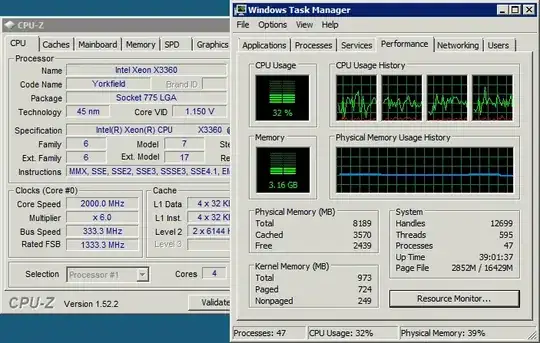

If you have any kind of application that needs low latency for its requests, you need to disable the Balanced Power settings. If you're not sure if this is a problem, do the following:

- Open up the "Task Manager"

- Click the "Performance" tab.

- Click "Open Resource Monitor"

- Select the "CPU" tab

- Look at the right-side of the window at the various CPU's.

If you see any of them getting parked, you'll notice that half of them are parked at any given time, they'll all fire up, and then the other half get parked. It alternates back and forth. Thus, the system CPU's are thrashing.

Virtual Machines:

This problem is even worse when you're running a virtual machine because there's the additional overhead of the hypervisor. Generally speaking, in order for a VM to run, the hardware needs to have a slot in time available for each of the cores at each timeslice.

If you have a 16 core piece of hardware, you can run VM's using more than 16 total cores but for each timeslice, only up to 16 virtual CPU's will be eligible for that time slice and the hypervisor must fit all of the cores for a VM into that timeslice. It can't be spread out over multiple timeslices. (A timeslice is essentially a set of X CPU cycles. It might be 1000 or it might be 100k cycles)

Ex: 16 core hardware with 8 VM's. 6 have 4 virtual CPU's(4C) and 2 have 8 virtual CPU's(8C).

Timeslice 1: 4x4C

Timeslice 2: 2x8C

Timeslice 3: 2x4C + 1x8C

Timeslice 4: 1x8C + 2x4C

What the hypervisor cannot do is split half of the allotment for a timeslice to the first 4 CPU's of an 8 vCPU VM and then on the next timeslice, give the rest to the other 4 vCPU's of that VM. It's all or nothing within a timeslice.

If you're using Microsoft's Hyper-V, the power control settings could be enabled in the host OS, meaning it will propagate down to the client systems, thus impacting them as well.

Once you see how this works, it's easy to see how using Balanced Power Control settings causes performance problems and sluggish servers. One of the underlying issues is that the incoming request needs to wait for the CPU parking/unparking process to complete before the server is going to be able to respond to the incoming request, whether that's a database query, a web server request or anything else.

Sometimes, the system will park or unpark CPU's in the middle of a request. In these cases, the request will start into the CPU pipeline, get dumped out of it, and then a different CPU core will pick up the process from there. If it's a hefty enough request, this might happen several times throughout the course of the request, changing what should have been a 5 second database query to a 15 second database query.

The biggest thing you're going to see from using Balanced Power is that the systems are going to feel slower to respond to just about every request you make.