So you're setting up some cluster on AWS and need SSH access between the nodes, correct? You have 2 options:

The naive one is to add each instance IP to the Security Group Inbound list - but that means you'll need to update the SG every time you add a new instance in the cluster. (If you ever do). Don't do this, I only mentioned it for completeness.

Far better is to use the Security Group ID itself as the source of the traffic.

It's important to understand that SG is not only an inbound filter but also tags all outbound traffic - and you can then refer to the originating SG ID in the same or other security groups.

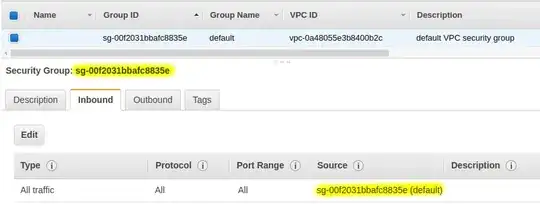

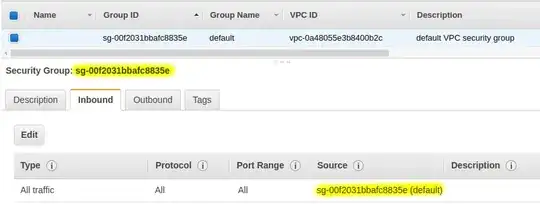

Have a look at the default security group in your VPC. You'll most likely see something like this:

Note that the rule refers to the Security Group ID itself.

With this rule everything that originates from any host that's a member of your security group will be accepted by all other members / instances in the group.

In your case you may want to restrict it to SSH, ICMP (if you need ping working) or any other ports you need.

Also check the Outbound tab and make sure that it has an entry for All traffic to 0.0.0.0/0 (unless you've got specific security needs), otherwise the instances won't be able to initiate any outbound connections. By default it should be there.

Hope that helps :)