Note: This is a follow-up question to Is there a way to protect SSD from corruption due to power loss?. I got good info there but it basically centered in three area, "get a UPS", "get better drives", or how to deal with Postgres reliability.

But what I really want to know is whether there is anything I can do to protect the SSD against meta-data corruption especially in old writes. To recap the problem. It's an ext4 filesystem on Kingston consumer-grade SSDs with write-cache enabled and we're seeing these kinds of problems:

- files with the wrong permissions

- files that have become directories (for example, toggle.wav is now a directory with files in it)

- directories that have become files (not sure of content..)

- files with scrambled data

The problem is less with these things happening on data that's being written while the drive goes down, or shortly before. It's a problem but it's expected and I can handle that in other ways.

The bigger surprise and problem is that there is meta-data corruption happening on the disk in areas that were not recently written to (ie, a week or more before).

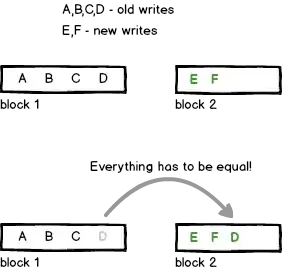

I'm trying to understand how such a thing can happen at the disk/controller level. What's going on? Does the SSD periodically "rebalance" and move blocks around so even though I'm writing somewhere else? Like this:

And then there is a power loss when D is being rewritten. There may be pieces left on block 1 and some on block 2. But I don't know if it works this way. Or maybe there is something else happening..?

In summary - I'd like to understand how this can happen and if there anything I can do to mitigate the problem at the OS level.

Note: "get better SSDs" or "use a UPS" are not valid answers here - we are trying to move in that direction but I have to live with the reality on the ground and find the best outcome with what we have now. If there is no solution with these disks and without a UPS, then I guess that's the answer.

References:

Is post-sudden-power-loss filesystem corruption on an SSD drive's ext3 partition "expected behavior"? This is similar but it's not clear if he was experiencing the kinds of problems we are.

EDIT: I've also been reading issues with ext4 that might have problems with power-loss. Ours are journaled, but I don't know about anything else.

Prevent data corruption on ext4/Linux drive on power loss

http://www.pointsoftware.ch/en/4-ext4-vs-ext3-filesystem-and-why-delayed-allocation-is-bad/