I have been working on an android OS build automation through Jenkins and the repository is massive and the build itself takes a fair amount of time. Therefore, I need to to run on my slave that has much more power and threads available for the build and not fill the masters disk space up to the point where the other devs can't use it.

When I had my first build generated it deployed to the master, I checked the configuration and reviewed the best practices from Jenkins.

https://wiki.jenkins.io/display/JENKINS/Jenkins+Best+Practices

If you have a more complex security setup that allows some users to only configure jobs, but not administer Jenkins, you need to prevent them from running builds on the master node, otherwise they have unrestricted access into the JENKINS_HOME directory. You can do this by setting the executor count to zero. Instead, make sure all jobs run on slaves. This ensures that the jenkins master can scale to support many more jobs, and it also protects builds from modifying potentially sensitive data on $JENKINS_HOME accidentally/maliciously. If you need some jobs to run on the master (e.g. backups of Jenkins itself), use the Job Restrictions Plugin to limit which jobs can be executed there.

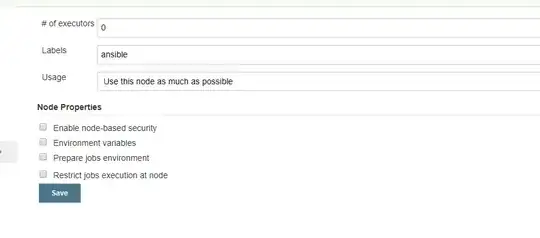

I noticed that we had two executors available on the master and as per the best practices this should be set to zero if you never want to build on the master. I set this down to zero and retried my build.

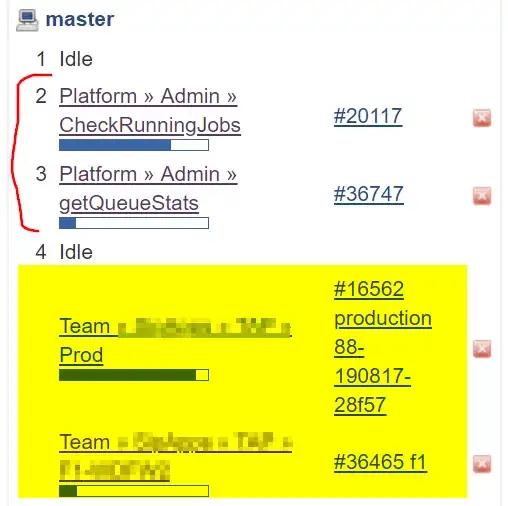

To my surprise it's still building on the master. Below is my Jenkins file that implicitly says to build on my slave.

pipeline {

agent {label 'van-droid'}

stages {

stage('Build') {

steps {

echo 'Building..'

sh 'make clean'

sh 'export USE_CCACHE=1'

sh 'export CCACHE_DIR=/nvme/.ccache'

sh './FFTools/make.sh'

}

}

}

}

Anyone have any idea what's going on?