I am looking for some recommendations on the network design for my ha-ft and all-flash-vsan-enabled vsphere cluster.

I've planed around vsphere a bit, but newbie to vsan and ha/ft. I've Googled and searched here on StackExchange, but I just can't get my head around where to start for my network design.

To all the vsphere experts out there, if you could offer some guidance/starting point, that would be much appreciated.

My all-flash VSAN will be configured for RAID5.

Hosts: 4x identical ESXI hosts each with:

- 1x Intel E5-2620v4 CPU

- 64GB RAM (2x 32GB DDR4-2133MHz ECC RAM)

- 1x 200GB Intel DC S3700 Enterprise SATA SSD for cache

- 2x 480GB Micron 5100 Pro Enterprise SATA SSDs for capacity

- Onboard NIC with 4x 1GBe ports

- Add-on NIC with 4x 10GBe ports (Intel X710 on PCIe 3.0/x8)

Switches:

- 2x 16ports 10GBe L2 Switches dedicated to vsphere traffic(ubiquiti es-16-xg)

- 1x 24ports 1GBe L3 Switch for general traffic (Aruba 2540 24G PoE+)

- 1x 24ports 1GBe L2 Swtich for general traffic (HPE 1820-24G)

What's troubling me is how to design based on my exact environment, specifically:

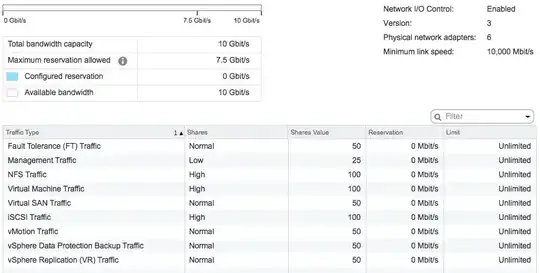

- allocation of NICs (virtual and physical) between VSAN, FT-logging, vMotion etc traffic

- dedicated NIC or shared

- multi-NIC vMotion? (i read somewhere this is not required as i have 10GBe NICs)

- should i use teaming (route based teaming)?

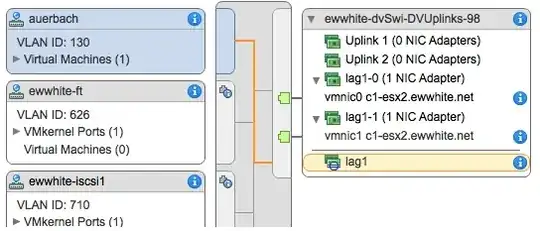

- vss / dvs / dvPortGroup allocation

I know there are official VMware guides, and many many blogs which have recommended configurations. However, I'm hoping to leverage everyone's knowledge on how best to configure the network given I have 4x1GBe+4x10GBe per host.

Any help would be much appreciated, thanks!