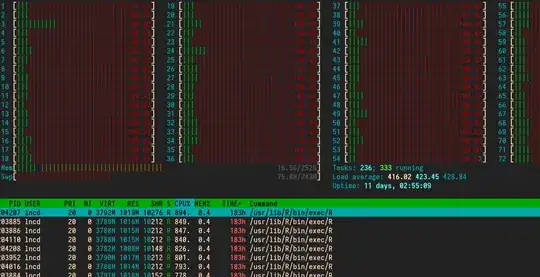

My understanding is high kernel/sy CPU usage is a symptom of network and disk IO or issues of RAM throughput. #516139. However, I suspect in the case below, over allocating threads is giving the kernel (via scheduling) way too much to do and the actual user level process computations are suffering.

We've parallelized building many models in R without realizing that each model building function is openMP capable and will default to distributing itself (to all available cores!?).

- Without already having suspicious/poorly written code to reason about, is there a way to tell sy usage is high because of thread allocation?

- Once this is running is there anyway to to set e.g.

ulimiton an individual process or any other recourse short of killing the top level process?

mpstat

Linux 4.9.0-4-amd64 (rhea.wpic.upmc.edu) 01/19/2018 _x86_64_ (72 CPU)

11:27:42 AM CPU %usr %nice %sys %iowait %irq %soft %steal %guest %gnice %idle

11:27:42 AM all 13.28 0.00 30.09 0.17 0.00 0.03 0.00 0.00 0.00 56.42

iostat

Linux 4.9.0-4-amd64 (rhea.wpic.upmc.edu) 01/19/2018 _x86_64_ (72 CPU)

avg-cpu: %user %nice %system %iowait %steal %idle

13.28 0.00 30.16 0.17 0.00 56.40

Device: tps kB_read/s kB_wrtn/s kB_read kB_wrtn

sda 5.90 182.64 532.33 175621086 511871712

sdb 4.26 10.68 1014.29 10268992 975304572

sdc 0.82 14.68 20.13 14111683 19354860

sdd 0.00 0.02 0.00 18100 0

cat /proc/self/mountstats

device skynet:/Volumes/Phillips/ mounted on /Volumes/Phillips with fstype nfs statvers=1.1

opts: rw,vers=3,rsize=65536,wsize=65536,namlen=255,acregmin=3,acregmax=60,acdirmin=30,acdirmax=60,hard,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=xxxxxxx,mountvers=3,mountport=748,mountproto=udp,local_lock=none

age: 960031

caps: caps=0x3fc7,wtmult=512,dtsize=32768,bsize=0,namlen=255

sec: flavor=1,pseudoflavor=1

events: 22290816 111452957 1493156 351729 8727855 16583649 130526167 54024016 266 1063322 0 8965212 14276120 4723 2406702 480455 238 1439836 0 615 53950807 7 0 0 0 0 0

bytes: 1584954051456 218684872379 0 0 742185394287 219176285117 181264042 53636171

RPC iostats version: 1.0 p/v: 100003/3 (nfs)

xprt: tcp 1017 1 75 0 0 66894351 66887373 6904 256266328938 0 802 1887233163 595159288

per-op statistics

NULL: 0 0 0 0 0 0 0 0

GETATTR: 22290802 22290914 0 3154213512 2496568872 18446744073371231314 88830744 118185897

SETATTR: 5616 5618 0 942564 808704 122600 893047 1025591

LOOKUP: 16586987 16586993 0 3230313244 3836903464 18446744073421412542 29327650 31652035

ACCESS: 5630423 5630439 0 810455208 675650520 2233531 21149691 23526686

READLINK: 60834 60834 0 9245324 9267896 269 957051 958788

READ: 11461667 11461844 0 1688228580 743652637248 160174235 1277881121 1438304121

WRITE: 4246754 4259238 220 220002658844 679480640 30785630990 5061286597 35853150454

CREATE: 7464 7467 0 1485604 1970496 801177 746707 1551420

MKDIR: 83 83 0 16296 21912 1749 1164 2986

SYMLINK: 30 30 0 8504 7920 0 16 34

MKNOD: 0 0 0 0 0 0 0 0

REMOVE: 9276 9278 0 1742408 1335744 143237 439704 583661

RMDIR: 78 78 0 13080 11232 0 68 78

RENAME: 908 908 0 214236 236080 2906 27182 30095

LINK: 0 0 0 0 0 0 0 0

READDIR: 204340 204340 0 32694564 6032970656 42323 1722666 1771971

READDIRPLUS: 6343408 6343410 0 1040350176 31022488528 1465418 136921691 138608729

FSSTAT: 2834 2834 0 388096 476112 67600 532404 600234

FSINFO: 2 2 0 224 328 0 0 0

PATHCONF: 1 1 0 112 140 0 0 0

COMMIT: 35880 35964 1 5029968 5453760 41064204 31974116 73123499

edit:

This situation exists because openblas defaults to parallel computations. see