This issue has been driving me nutty for days now! I recently bonded the eth0/eth1 interfaces on a few linux servers into bond1 with the following configs(same on all systems):

DEVICE=bond0

ONBOOT=yes

BONDING_OPTS="miimon=100 mode=4 xmit_hash_policy=layer3+4

lacp_rate=1"

TYPE=Bond0

BOOTPROTO=none

DEVICE=eth0

ONBOOT=yes

SLAVE=yes

MASTER=bond0

HOTPLUG=no

TYPE=Ethernet

BOOTPROTO=none

DEVICE=eth1

ONBOOT=yes

SLAVE=yes

MASTER=bond0

HOTPLUG=no

TYPE=Ethernet

BOOTPROTO=none

Here you can see the bonding status: Ethernet Channel Bonding Driver: v3.6.0 (September 26, 2009)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer3+4 (1)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

802.3ad info

LACP rate: fast

Aggregator selection policy (ad_select): stable

Active Aggregator Info:

Aggregator ID: 3

Number of ports: 2

Actor Key: 17

Partner Key: 686

Partner Mac Address: d0:67:e5:df:9c:dc

Slave Interface: eth0

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:25:90:c9:95:74

Aggregator ID: 3

Slave queue ID: 0

Slave Interface: eth1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 00:25:90:c9:95:75

Aggregator ID: 3

Slave queue ID: 0

And the Ethtool outputs:

Settings for bond0:

Supported ports: [ ]

Supported link modes: Not reported

Supported pause frame use: No

Supports auto-negotiation: No

Advertised link modes: Not reported

Advertised pause frame use: No

Advertised auto-negotiation: No

Speed: 2000Mb/s

Duplex: Full

Port: Other

PHYAD: 0

Transceiver: internal

Auto-negotiation: off

Link detected: yes

Settings for eth0:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: Symmetric

Supports auto-negotiation: Yes

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: Symmetric

Advertised auto-negotiation: Yes

Speed: 1000Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

Auto-negotiation: on

MDI-X: Unknown

Supports Wake-on: pumbg

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

Settings for eth1:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: Symmetric

Supports auto-negotiation: Yes

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: Symmetric

Advertised auto-negotiation: Yes

Speed: 1000Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

Auto-negotiation: on

MDI-X: Unknown

Supports Wake-on: pumbg

Wake-on: d

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

The servers are both connected to the same Dell PCT 7048 switch, with both ports for each server added to its' own dynamic LAG and set to access mode. Everything looks ok, right? And yet, here are the results of attempting iperf tests from one server to the other, with 2 threads:

------------------------------------------------------------

Client connecting to 172.16.8.183, TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 4] local 172.16.8.180 port 14773 connected with 172.16.8.183 port 5001

[ 3] local 172.16.8.180 port 14772 connected with 172.16.8.183 port 5001

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 561 MBytes 471 Mbits/sec

[ 3] 0.0-10.0 sec 519 MBytes 434 Mbits/sec

[SUM] 0.0-10.0 sec 1.05 GBytes 904 Mbits/sec

Clearly both ports are being used, but not at anywhere close to 1Gbps - which is what they worked at individually before bonding them. I've tried all sorts of different bonding modes, xmit hash types, mtu sizes, etc. etc. but just cannot get the individual ports to exceed 500 Mbits/sec.....it's almost as if the Bond itself is being limited to 1G somewhere! Does anyone have any ideas?

Addition 1/19: Thanks for the comments and questions, I'll try to answer them here as I am still very interested in maximizing the performance of these servers. First, I cleared the interface counters on the Dell switch and then let it serve production traffic for a bit. Here are the counters for the 2 interfaces making up the bond of the sending server:

Port InTotalPkts InUcastPkts InMcastPkts

InBcastPkts

--------- ---------------- ---------------- ---------------- --------

--------

Gi1/0/9 63113512 63113440 72

0

Port OutTotalPkts OutUcastPkts OutMcastPkts

OutBcastPkts

--------- ---------------- ---------------- ---------------- --------

--------

Gi1/0/9 55453195 55437966 6075

9154

Port InTotalPkts InUcastPkts InMcastPkts

InBcastPkts

--------- ---------------- ---------------- ---------------- --------

--------

Gi1/0/44 61904622 61904552 48

22

Port OutTotalPkts OutUcastPkts OutMcastPkts

OutBcastPkts

--------- ---------------- ---------------- ---------------- --------

--------

Gi1/0/44 53780693 53747972 48

32673

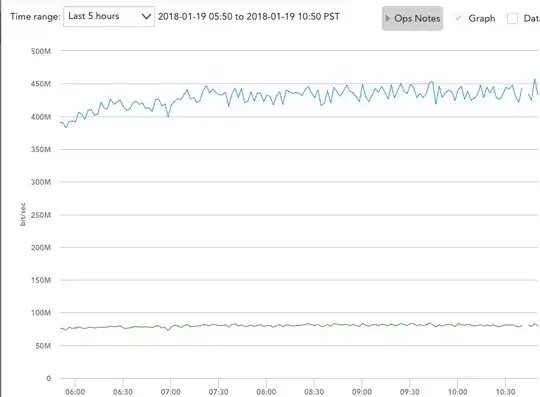

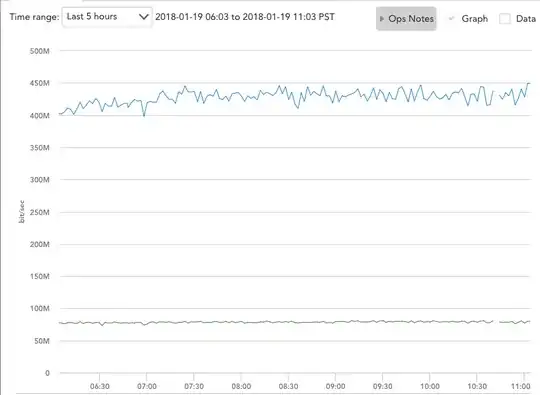

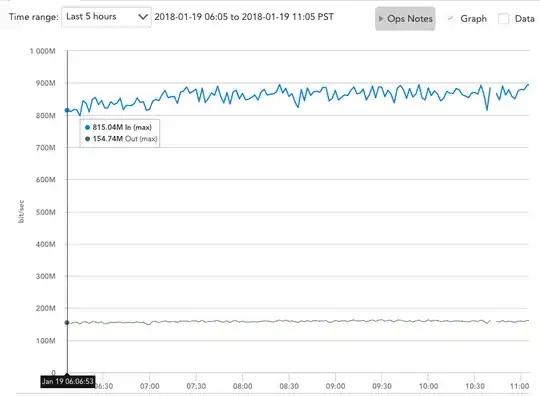

It seems like the traffic is being perfectly load balanced - but the bandwidth graphs still show almost exactly 500mbps per interface, when rx and tx are combined:

I can also say for a certainty that, when it is serving production traffic, it is constantly pushing for more bandwidth and is communicating with multiple other servers at the same time.

Edit #2 1/19: Zordache, you made me think that maybe the Iperf tests were being limited by the receiving side only using 1 port and there only 1 interface, so I ran 2 instances of server1 concurrently and ran "iperf -s" on server2 and server3. I then ran Iperf tests from server1 to servers 2 and 3 at the same time:

iperf -c 172.16.8.182 -P 2

------------------------------------------------------------

Client connecting to 172.16.8.182, TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 4] local 172.16.8.225 port 2239 connected with 172.16.8.182 port

5001

[ 3] local 172.16.8.225 port 2238 connected with 172.16.8.182 port

5001

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 234 MBytes 196 Mbits/sec

[ 3] 0.0-10.0 sec 232 MBytes 195 Mbits/sec

[SUM] 0.0-10.0 sec 466 MBytes 391 Mbits/sec

iperf -c 172.16.8.183 -P 2

------------------------------------------------------------

Client connecting to 172.16.8.183, TCP port 5001

TCP window size: 85.3 KByte (default)

------------------------------------------------------------

[ 3] local 172.16.8.225 port 5565 connected with 172.16.8.183 port

5001

[ 4] local 172.16.8.225 port 5566 connected with 172.16.8.183 port

5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 287 MBytes 241 Mbits/sec

[ 4] 0.0-10.0 sec 292 MBytes 244 Mbits/sec

[SUM] 0.0-10.0 sec 579 MBytes 484 Mbits/sec

Both SUMs added still won't go over 1Gbps! As for your other question, my port-channels are set up with just the following 2 lines:

hashing-mode 7

switchport access vlan 60

Hashing-mode 7 is Dell's "Enhanced Hashing". It doesn't say specifically what it does, but I have tried various combos of the other 6 modes, which are:

Hash Algorithm Type

1 - Source MAC, VLAN, EtherType, source module and port Id

2 - Destination MAC, VLAN, EtherType, source module and port Id

3 - Source IP and source TCP/UDP port

4 - Destination IP and destination TCP/UDP port

5 - Source/Destination MAC, VLAN, EtherType, source MODID/port

6 - Source/Destination IP and source/destination TCP/UDP port

7 - Enhanced hashing mode

If you have any suggestions, I am happy to try the other modes again, or change the configurations on my port-channel.