Our Oracle server (version 12c) is running on a RedHat-6.7 server with 128GB of RAM.

The current size of the only database on it is only about 60GB.

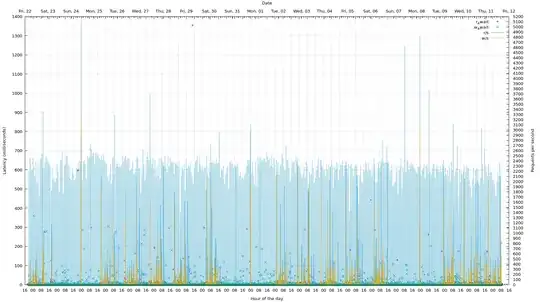

Yet, we see plenty of disk reads -- on the device where the database is stored -- in the iostat-output (shown in blue). There are some writes (shown in yellow) too, of course:

While the writes to permanent storage make sense because we do make modifications to the data some times, the reads do not -- the entire DB can fit in memory twice over... Indeed, we should be able to store it on a USB-stick and still have good read-times.

What parts of the Oracle-configuration should we examine and tune to make the server use all available RAM?

Update: we will look at the INMEMORY settings -- thanks @lenniey. But it would seem, the INMEMORY parameters come into play, when the server has to (because of RAM shortage) decide, what to drop from memory to be re-read back later. In our case nothing should ever be dropped from memory, because there is room for everything. And yet some things are, evidently, being (re)read again and again...