I would like to propose two different innovate approaches which would be used if both carrier-grade performance and future scalability was a requirement. Both methods will alleviate your performance and vLAN limitation issues.

- SR-IOV (Hardware dependent) Approach

- OvS+DPDK Approach

SR-IOV Approach:

You could use SR-IOV provided that you have an SR-IOV enabled network card. You can easily enable SR-IOV from the Hyper-V manager within the Virtual Switch manager.

This should theoretically give you native speed NIC performance thanks to the VMbus bypass, however do be aware that this method relies on a hardware dependencies, which can going against some of the key beneficial concepts of NFV and virtualization, for this reason I would also suggest the next approach :).

I have also listed supported NICs at the bottom of this answer.

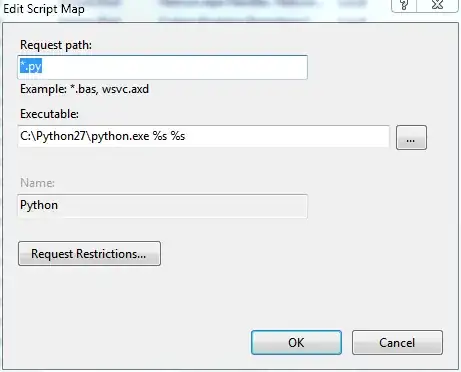

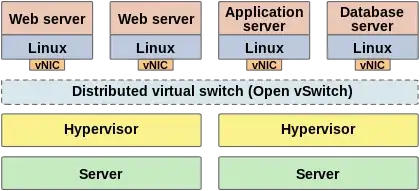

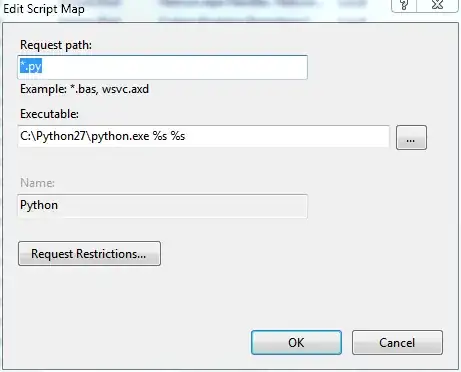

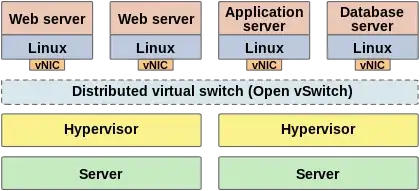

OvS + DPDK Approach:

The next method I will suggest would be to absorb the functionality from Hyper-V Switch whilst also providing a considerable boast to DataPlane performance. By enabling OpenvSwitch (OvS) on the VMM/host layer. This would enable virtualization of the Switch layer and provide extra functionality such as a distributed switching for off the system scaling and switching..obviously, this can be extremely useful to implement at an earlier stage as opposed to later, thus greatly reduce scaling complexity and providing you with a modern infrastructure setup (your friends and colleagues will be in awe)!

Next is the DPDK element; DPDK is a userspace Poll Mode Driver (PMD) used for bypassing the slow and interruption-based linux networking stack (which was not designed with virtualizaiton in mind). There's plenty of documentation out their on the web about DPDK and OvS + DPDK.

By limiting the IRQ's with the PMD and bypassing the Linux Kernal network stack you will gain a formidable jump in VM NIC performance whilst gaining more and more functionality giving you better control of the virtual infrastructure, this is the way modern networks are being deployed right now.

SR-IOV supported NICs:

- Intel® Ethernet Converged Network Adapter X710 Series

- Intel® Ethernet Converged Network Adapter X710-DA2

- Intel® Ethernet Converged Network Adapter X710-DA4

- Intel® Ethernet Converged Network Adapter XL710 Series

- Intel® Ethernet Converged Network Adapter XL710-QDA2

- Intel® Ethernet Converged Network Adapter XL710-QDA1

- Intel® Ethernet Controller X540 Family

- Intel® Ethernet Controller X540-AT1

- Intel® Ethernet Controller X540-AT2

- Intel® Ethernet Converged Network Adapter X540 Family

- Intel® Ethernet Converged Network Adapter X540-T1

- Intel® Ethernet Converged Network Adapter X540-T2

- Intel® 82599 10 Gigabit Ethernet Controller Family

- Intel® Ethernet 82599EB 10 Gigabit Ethernet Controller

- Intel® Ethernet 82599ES 10 Gigabit Ethernet Controller

- Intel® Ethernet 82599EN 10 Gigabit Ethernet Controller

- Intel® Ethernet Converged Network Adapter X520 Family

- Intel® Ethernet Server Adapter X520-DA2

- Intel® Ethernet Server Adapter X520-SR1

- Intel® Ethernet Server Adapter X520-SR2

- Intel® Ethernet Server Adapter X520-LR1

- Intel® Ethernet Server Adapter X520-T2

- Intel® Ethernet Controller I350 Family

- Intel® Ethernet Controller I350-AM4

- Intel® Ethernet Controller I350-AM2

- Intel® Ethernet Controller I350-BT2

- Intel® Ethernet Server Adapter I350 Family

- Intel® Ethernet Server Adapter I350-T2

- Intel® Ethernet Server Adapter I350-T4

- Intel® Ethernet Server Adapter I350-F2

- Intel® Ethernet Server Adapter I350-F4

- Intel® 82576 Gigabit Ethernet Controller Family

- Intel® 82576EB Gigabit Ethernet Controller

- Intel® 82576NS Gigabit Ethernet Controller