I’ve heard that if you have a guest with N virtual cores under ESXi, the hypervisor waits for N total logical processors on the host to become available simultaneously before delegating work from the guest to the hardware. Therefore, the advice is that you should very carefully consider increasing the guest by X cores unless you truly need the processing cycles because you’ll incur an increase in that wait proportionate to X, and you want to ensure your gain from adding vcpus outweighs the cost of this increased delay.

In the extreme example, suppose ESXi hosts hA and hB have identical hardware and configurations, and each has a single guest (gA and gB, respectively) and the guests are identical other than the fact that gA has 1 virtual cpu and gB has 2. If you put the same (non-parallelizable) workload on both hosts, gA “should” compete the task “faster.”

- Is this proc wait delay real? Confirmable by VMWare documentation?

- If it is real (and not obvious from provided documentation), are there equations to measure projected impact of guest vcpu increases ahead of time? Tooling to measure what kind of real world impact the delay is already having?

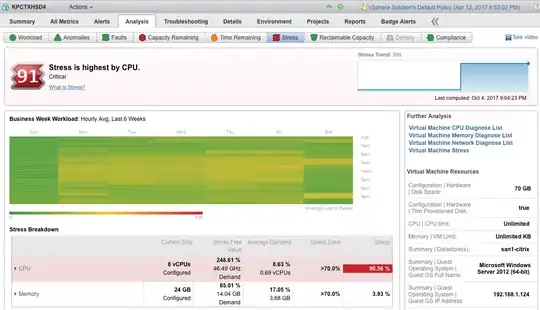

—- If it’s at all relevant, the real world problem sparking this question is we have an MS SQL Server 2014 with 4 cores that run on average 60% capacity during the day with regular spikes to 100% and we’re having an internal debate as to whether it’s smart to increase the guest to 6 or 8 cores to alleviate some performance problems we’re experiencing. The host has (I don’t know the model, only the specs) dual socket Intel hexcore at 2.6GHz w/ hyper-threading - so 24 logical cores per host.