Without going into the specifics of this particular operator's setup (which I'm not familiar with), the answer to the general question is clear.

DNS has a long history of design with redundancy in mind (the protocol has built-in facilities for synchronizing zone data between servers, multiple authoritative nameservers are natively supported by simply adding multiple NS records, most registries outright require at least two nameservers when delegating your registered domain name, etc, etc).

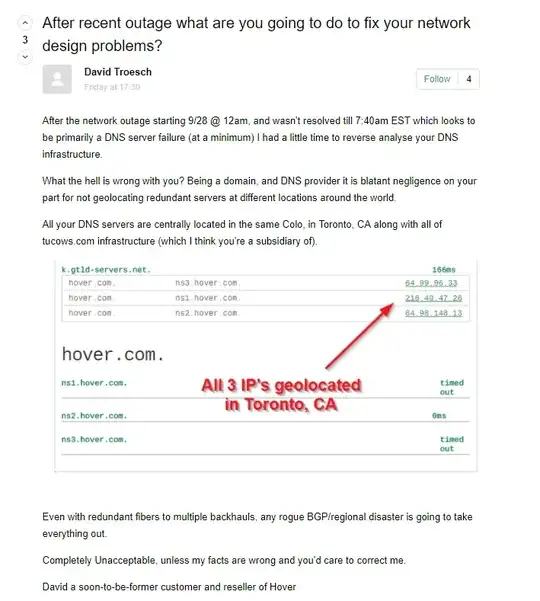

It's also long-established best practice to have diversity among your authoritative nameservers, both regarding geographical location as well as network topology.

An example of this is RFC2181 - Selection and Operation of Secondary DNS Servers (aka BCP16 since receiving Best Current Practice status), a document from 1997 specifically on this subject.

The section on Selecting Secondary Servers (ie, what the full set of authoritative nameservers should be like) in this document reads:

3.1. Selecting Secondary Servers

When selecting secondary servers, attention should be given to the

various likely failure modes. Servers should be placed so that it is

likely that at least one server will be available to all significant

parts of the Internet, for any likely failure.

Consequently, placing all servers at the local site, while easy to

arrange, and easy to manage, is not a good policy. Should a single

link fail, or there be a site, or perhaps even building, or room,

power failure, such a configuration can lead to all servers being

disconnected from the Internet.

Secondary servers must be placed at both topologically and

geographically dispersed locations on the Internet, to minimise the

likelihood of a single failure disabling all of them.

That is, secondary servers should be at geographically distant

locations, so it is unlikely that events like power loss, etc, will

disrupt all of them simultaneously. They should also be connected to

the net via quite diverse paths. This means that the failure of any

one link, or of routing within some segment of the network (such as a

service provider) will not make all of the servers unreachable.

The above are best practices for DNS deployments in general.

Obviously one will have to adjust expectations somewhat based on the situation, but when it comes to a large scale deployment operated by a company which has these services as part of their core business the above really just makes sense.