Here's a great writeup on scaling with heroku (and it applies to traditional deployments).

Given that we want containerized applications to be single process, how do we get:

- slow client protection

- slow response protection

in a Kubernetes/GKE environment that takes full advantage of horizontal pod autoscaling?

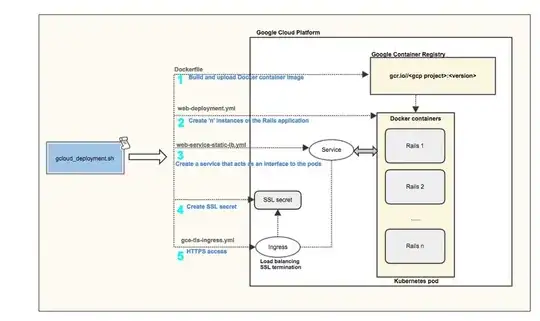

Assume my deployment looks much like the following (credit @nithinmallya4):

I have not yet selected a web server, and by default rackup is serving WEBrick. I was considering just changing this to multi-threaded Puma.

My concern is that the autoscaler works based on CPU, not based on an idea that it is consumed by a current http/s request, so it may not come into play.

- Am I understanding the autoscaler correctly?

- What is the ideal scale up/down architecture?

Our current thoughts:

nginx in a pod sidecar pattern (with a gzip

deflater) behind anIngress.pumain front of rails (in the sameimageas rails-api), assuming that it would better utilize cpu and trigger autoscalecustom metrics for HPA (still need to research this with 1.8)