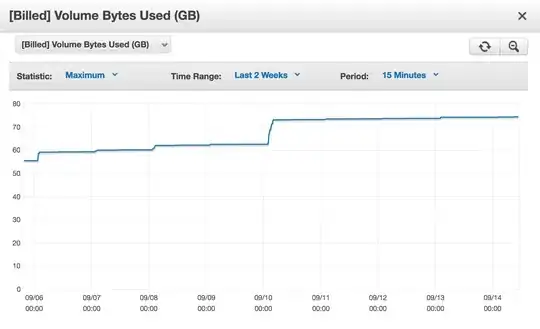

I have an Amazon (AWS) Aurora DB cluster, and every day, its [Billed] Volume Bytes Used is increasing.

I have checked the size of all my tables (in all my databases on that cluster) using the INFORMATION_SCHEMA.TABLES table:

SELECT ROUND(SUM(data_length)/1024/1024/1024) AS data_in_gb, ROUND(SUM(index_length)/1024/1024/1024) AS index_in_gb, ROUND(SUM(data_free)/1024/1024/1024) AS free_in_gb FROM INFORMATION_SCHEMA.TABLES;

+------------+-------------+------------+

| data_in_gb | index_in_gb | free_in_gb |

+------------+-------------+------------+

| 30 | 4 | 19 |

+------------+-------------+------------+

Total: 53GB

So why an I being billed almost 75GB at this time?

I understand that provisioned space can never be freed, in the same way that the ibdata files on a regular MySQL server can never shrink; I'm OK with that. This is documented, and acceptable.

My problem is that every day, the space I'm billed increases. And I'm sure I am NOT using 75GB of space temporarily. If I were to do something like that, I'd understand. It's as if the storage space I am freeing, by deleting rows from my tables, or dropping tables, or even dropping databases, is never re-used.

I have contacted AWS (premium) support multiple times, and was never able to get a good explanation on why that is.

I've received suggestions to run OPTIMIZE TABLE on the tables on which there is a lot of free_space (per the INFORMATION_SCHEMA.TABLES table), or to check the InnoDB history length, to make sure deleted data isn't still kept in the rollback segment (ref: MVCC), and restart the instance(s) to make sure the rollback segment is emptied.

None of those helped.