Without seeing statistics of the big tables (specifically are they being effectively vacuumed by auto-vacuum), my main suggestion is to limit the amount of history you keep (specifically what goes into the history* tables, as opposed to trend* tables).

Generally speaking Zabbix manages the volume of data collected by turning history (detailed observations) into trends (aggregated observations); the idea is you keep history for a brief period where it might be meaningful to see minute by minute exact data, but for longer term studies aggregated data is adequate. Moreover, it means that the history tables (which are busily having data added) are also smaller, and the trend tables can be larger but with less write activity.

It sounds like you are doing the opposite, keeping no trend data, but all history? Is there a reason, or accident?

Aside, which becomes relevant in a moment: Partitioning is a tool, it will not solve your immediate problem, but working on very large datasets like this it will become your friend. That said, partitioning (mostly) requires discipline in history and trend retention, you have to keep all items a similar length of time so you can just drop their associated partition as it ages off. Back to the main answer...

What I do is look at different items and decide how I use them, and keep history only as long as I really need, and keep trend only as long as well, if at all. For example, I have quite a few sanity checks, that alert if something goes wonky, but generally are items returning 0, "OK" or some such. It's rather pointless to keep those more than a few days. This item-specific retention is at odds with partitioning, though, so you decide.

More relevant is what you poll and how often. I dropped our number of items by a factor of maybe 10 by aggressively filtering out stuff no one was looking at. One of the biggest is interfaces - some devices that have one physical interface may have 6 or 10 virtual ones; sure (someone will say) they have meaning, but is anyone actually looking at data collected from them? Subinterfaces, loopback interfaces, (some) virtual interfaces, etc. Network admins often think "I'll keep everything just in case", but it is rarely useful - go spelunking into the item data, and see where you have large numbers of interfaces you just will never need to know about. Or worst case, you might have to start monitoring again. Whack them from low level discovery.

While you are in there, look at WHAT you are collecting for interfaces. Same idea; people often collect everything SNMP shows because, well, they can. Pretend for a moment you are paying for each data item, and ask yourself if it's worth keeping if you were paying by the item. (In a sense, data storage wise, you are). If you've done monitoring for a few years, ask yourself if you EVER have needed a count of fragment failures (simple example of what sounds like a real and useful item, maybe is to some people). What would you do if you say 5 appear? If it's not actionable, why keep it? If it's the kind of thing you look at reactively, in real time, why keep it from every system historically?

While you are in there, ask why you are polling so fast on some items. Consider packet/byte count issues - sure, it's kind of neat to watch a real-time-like history graph every 60 seconds, but is it actionable? Does it teach you more than one every 180 seconds? Every 300 seconds? You are probably collecting a LOT of such data, very fast - will you use it? I've had network admins say "but I need to react quickly to problems". Then you find them putting in latency and hysteresis so as to avoid false alarms and flapping.

Drop back on what you collect and how often and your history will shrink by a factor of 10 (+/-) without significantly impacting its utility. Then cut back on how long you keep it in detail (vs trends) and it can drop by another factor of 2 or 4.

Long, rambling answer, but basically: if it's not actionable, don't keep it. You can always put it back if you guess wrong.

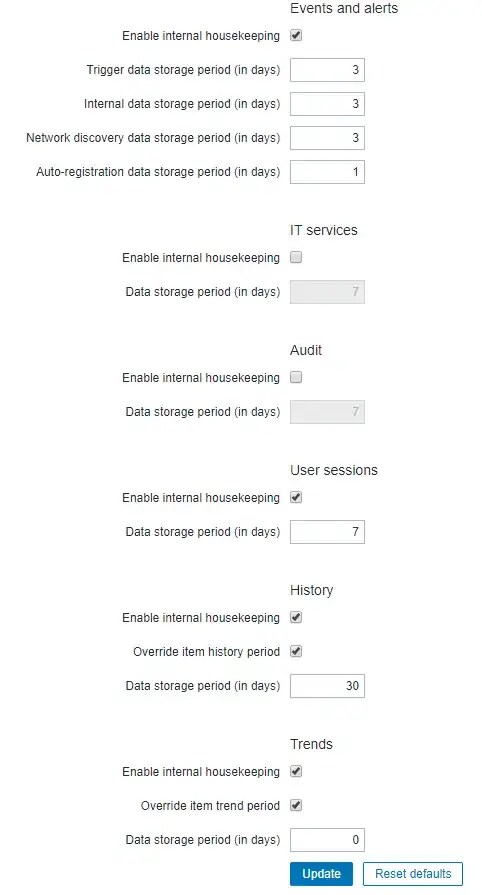

Finally: Be sure auto-vacuum is working effectively, consider setting housekeeping's delete maximum to 0 (delete all at once) but monitor carefully afterwards for blocking (on a good sized system with adequate memory/process/disk speed this can significantly speed up house keeping, but it also risks blocking if it tries to do TOO much at once).

OK, really finally: If you DO decide to do as suggested and eliminate a lot of items, consider whether you can just start over on the data. Cleaning up hundreds of gigs of data in housekeeping is going to be a huge challenge.