Got a problem here on a 2016 Windows Server Failover Cluster (WSFC) hosting an SQL Failover Cluster Instance (FCI) employing Storage Spaces Direct (S2D). On each server, after successful initial creation, S2D automatically added an otherwise unused RAID-volume to the storage pool (although S2D cannot be created on RAID-volumes and absolutely insists on unraided disks). Now it's broken, due to - as far as I could figure out - exactly that. As a consequence, the virtual disk is offline, taking the whole cluster down with it. It won't come back online, due to a missing cluster network ressource. The disks in question can be retired but not removed. Virtual disk repair does not run, cluster compatibility test claims invalid configuration.

This is a new setup. So I could simply delete the virtual disk, the cluster or even the servers and start over. But before we go productive, I need to make sure, this does not ever happen again. The system shooting itself in the virtual knee to a crashing halt just by needlessly and wrongly adding an unsupported disk is no platform we can deploy. So primarily I need a way to prevent this from happening, rather than to repair it now. My guess is that preventing an S2D setup from grabbing more disks than it was created on would do the trick. The cost of potentially more manual interaction during a real disk replacement is negligible to the clusterf... we have here. Much as I browsed the documentation so far, however, I cannot find any way to control that. Unless I'm missing something, neither Set-StoragePool, Set-VirtualDisk nor Set-Volume offer any parameter to that extend.

Any help or hint would be greatly appreciated.

Following are just more details on the above: We have 2 HPE DL380 Gen9 server machines doubly connected to each other via RDMA capable 10GB Ethernet and via 1GB to the client net. Each feature a RAID controller HP ??? and a simple HBA controller HP ??? (since S2D absolutely requires and works only on directly attached, unraided disks). The storage configuration comprises of an OS-RAID on the RAID-controller, a Files-RAID on the RAID controller, and the set of directly attached disks on the HBA intended for S2D.

I set up 2 Windows Servers 2016 datacenter edition on the OS-RAIDs, installed WSFC feature, ran and passed the cluster compatibility test including S2D option, created the cluster without storage, added a file share witness (on a separate machine), enabled S2D on the storage pool, which automatically comprised of all of the unraided disks, and on top of that pool created a virtual disk of the mirror type and used NTFS as file system, since this is supposed to be the FS of choice for an SQL FCI installation.

I then installed SQL 2016 standard edition as an FCI on that cluster, imported a database and tested it all. Everything was fine. Database was right there and faster than ever. Forced as well as automatic failover was a breeze. Everything looked good.

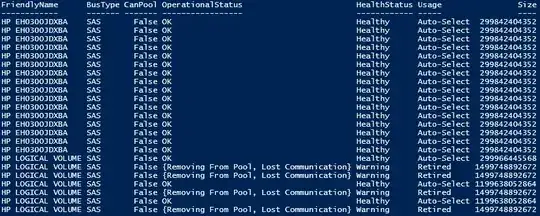

Next day we tried to make use of the remaining Files-RAID. First thing was to change the RAID level as we didn't like the pre-configuration. Shortly after deleting the pre-configured RAID volume and building a new one (on each server), we detected that the cluster was down. From what I could figure out so far, the pre-configured Files-RAID volume had in the meantime been automatically added to the pool, and as we just deleted it, it was now missing from the pool. While I checked, I found the new Files-RAID, while still being created, already shown as a physical drive of the pool as well. So the pool now included 2 RAID volumes on each server, one of which didn't even exist. These volumes (but not their disks) are listed by Get-PhysicalDisk along with the actually physical disks on the HBA, not sure if that's regular. The pool itself is still online and doesn't complain, the virtual disk however is not simply degraded for missing disks, but completely offline (and so is, in consequence, the whole cluster).

I was able to retire those physical disks (i.e. those which are actually the RAID volumes), and they are now marked as retired. But they are still in the pool and I cannot remove them just now, trying to do so fails. A Repair-VirtualDisk should rebuild the virtual disk to a proper state on just the remaining disks (I went by this: https://social.technet.microsoft.com/Forums/windows/en-US/dbbf317b-80d2-4992-b5a9-20b83526a9c2/storage-spaces-remove-physical-disk?forum=winserver8gen), but this job is immediately over, "successfull" of course, with no effect whatsoever.

Trying to switch the virtual disk back online fails, stating that a networked cluster ressource is unavailable. As far as I understand, this could only refer to the (available) storage pool, since the missing disks are no cluster ressources. The pool shows no errors to fix. Running the cluster compatibility test claims a configuration not suited for a cluster.

I cannot find any part left that would budge another inch, the whole thing looks deadlocked for good. Any ideas on how to prevent a running WSFC from f...ing itself up that way?

I did not encounter any error message I found particularly enlightening, and I didn't want to bomb the page even more by posting all of them. If anyone wants to have any specific detail, just let me know.

Thanks a lot for your time, guys!

Karsten