I'm a bit confused regarding the setup of a SAN with ISCSI and multipath. We are upgrading our network to 10Gbit, so there are 2 10Gbit switches that are configured with MLAG for normal ethernet traffic. LAG groups are made on the appriorate NICs, so for ethernet only.

Now there's a SAN with 2 controllers. Each controller houses 4 x 10Gbit NICs. So my initial plan was to use all 4 NICs on each controller to maximize throughput, as there are multiple servers connecting to the SAN.

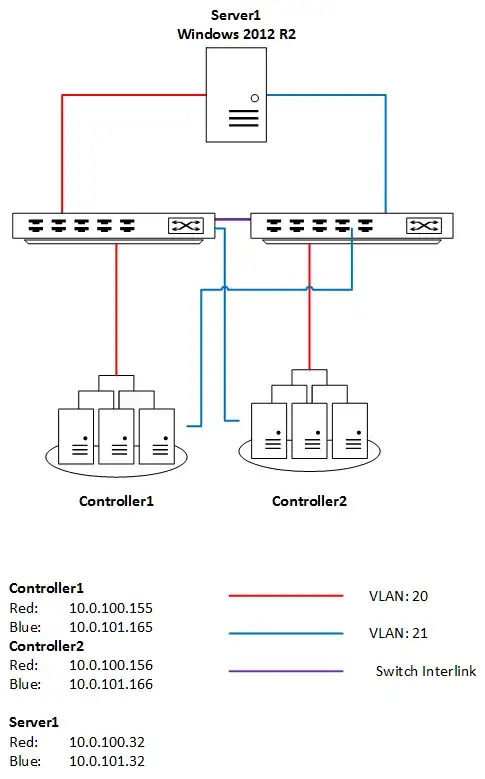

The servers connecting house 2 dedicated 10Gbit NICs for SAN traffic. Each NIC connects to one of the switches. There's no LAG on those interfaces, they are configured with a static address in the SAN network.

- Is it OK for the 2 controllers of the SAN to be in the same subnet? So to have one main SAN network. Or do I need an independent segment for each controller?

- What about the 4 NICs of each SAN controller? Do I just bundle 2 NICs in a LACP group on each controller? Or is LAG not needed at all? I'm a bit confused what a reasonable configuration would be. Connect 2 NICs of each controller to one switch? Would I assign each NIC a dedicated address in the SAN network and use all the 8 addresses in total for multipathing?

Well, I'm not a storage expert as one can see,...:-)

So I got an update on question number 1. Assuming I'm going to use 2 NICs of each SAN controller (not all 4 to keep it simple for the time being), it would make sense to use two different subnets. It seems that this increases the chance that MPIO is being used efficiently.

If all the NICs are on the same subnet there's no guarantee that MPIO is being used.

I'm still investigating answer 2 though. I found multiple articles describing a setup as outlined above, but just using 2 NICs of each SAN controller. I tested that out, but contradicting to all the articles I don't get 1 single ISCSI target, I get two (one for each controller). When configuring MPIO using both targets my throughput drops from 1800MB/s to around 30MB/s,...

Switches: 2 x Mellanox SX1012

Storage: QSAN XS5200 with 4 x SFP+ 10Gbit adapter per controller

Server: Supermicro 2028TP-HC1R-SIOM, 4 x SFP+ Intel X710

The current configuration looks as follows: