I'm provisioning a Galera MySQL cluster under Vagrant using a multi machine VagrantFile.

I don't believe the issue lies with Vagrant

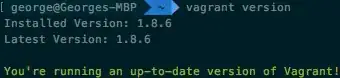

Vagrant Version

VagrantFile

Vagrant.configure(2) do |config|

config.vm.box = "ubuntu/trusty64"

config.vm.provider "virtualbox" do |vb|

vb.memory = "2048"

end

config.ssh.forward_agent = true

if Vagrant.has_plugin?("vagrant-cachier")

config.cache.scope = :box

config.cache.enable :apt

end

config.vm.define "core0" do |core0|

core0.vm.network "private_network", ip: "192.168.50.3"

core0.vm.hostname = "core0"

core0.vm.provision :hosts, :sync_hosts => true

core0.vm.provision "shell", inline: <<-SHELL

sudo python /vagrant/bootstrap.pex --core-nodes core0 core1 core2 --node-zero

SHELL

end

config.vm.define "core1" do |core1|

core1.vm.network "private_network", ip: "192.168.50.4"

core1.vm.hostname = "core1"

core1.vm.provision :hosts, :sync_hosts => true

core1.vm.provision "shell", inline: <<-SHELL

sudo python /vagrant/bootstrap.pex --master core0 --core

SHELL

end

config.vm.define "core2" do |core2|

core2.vm.network "private_network", ip: "192.168.50.5"

core2.vm.hostname = "core2"

core2.vm.provision :hosts, :sync_hosts => true

core2.vm.provision "shell", inline: <<-SHELL

sudo python /vagrant/bootstrap.pex --master core0 --core

SHELL

end

end

Vagrant Plugins

I'm using the vagrant-cachier and vagrant-hosts in vagrant.

Vagrant runs and creates each VM in turn and then I do a 2 stage provision to ensure the networking between the boxes is established before attempting clustering.

vagrant up --provision-with hosts && vagrant provision --provision-with shell

the shell provisioner uses salt to creates and installs mariadb and gluster

Mariadb versions

vagrant@core0:~$ sudo apt-cache policy mariadb-server-core-10.1

mariadb-server-core-10.1:

Installed: 10.1.18+maria-1~trusty

Candidate: 10.1.18+maria-1~trusty

vagrant@core0:~$ sudo apt-cache policy galera-3

galera-3:

Installed: 25.3.18-trusty

Candidate: 25.3.18-trusty

I configure the galera.cnf with the cluster addresses as

wsrep_cluster_address = gcomm://core2,core0,core1

when hosts core1 and core2 attempt to join with core0 they are unable to.

core1 joining cluster

core1 is able to find the core0 and retrieve the current cluster state.

Oct 12 15:15:02 core1 mysqld: 2016-10-12 15:15:02 140403237877696 [Note] WSREP: gcomm: connecting to group 'TestSystem', peer 'core2:,core0:,core1:'

Oct 12 15:15:02 core1 mysqld: 2016-10-12 15:15:02 140403237877696 [Note] WSREP: (a61950db, 'tcp://0.0.0.0:4567') connection established to a61950db tcp://127.0.0.1:4567

Oct 12 15:15:02 core1 mysqld: 2016-10-12 15:15:02 140403237877696 [Note] WSREP: (a61950db, 'tcp://0.0.0.0:4567') connection established to a61950db tcp://127.0.1.1:4567

Oct 12 15:15:02 core1 mysqld: 2016-10-12 15:15:02 140403237877696 [Warning] WSREP: (a61950db, 'tcp://0.0.0.0:4567') address 'tcp://127.0.1.1:4567' points to own listening address, blacklisting

Oct 12 15:15:02 core1 mysqld: 2016-10-12 15:15:02 140403237877696 [Note] WSREP: (a61950db, 'tcp://0.0.0.0:4567') connection established to a5301480 tcp://192.168.50.3:4567

Oct 12 15:15:02 core1 mysqld: 2016-10-12 15:15:02 140403237877696 [Note] WSREP: (a61950db, 'tcp://0.0.0.0:4567') turning message relay requesting on, nonlive peers:

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140403237877696 [Note] WSREP: declaring a5301480 at tcp://192.168.50.3:4567 stable

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140403237877696 [Note] WSREP: Node a5301480 state prim

core2 not available

as expected at this time core2 is not available

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140403237877696 [Note] WSREP: discarding pending addr without UUID: tcp://192.168.50.5:4567

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140403237877696 [Note] WSREP: gcomm: connected

SST failure

core1 attempts to connect to core0 using the address 10.0.2.15 which is the Vagrant NAT address

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140403237563136 [Note] WSREP: State transfer required:

Oct 12 15:15:03 core1 mysqld: #011Group state: a530f9fd-908d-11e6-a72a-b2e3a6b91029:1113

Oct 12 15:15:03 core1 mysqld: #011Local state: 00000000-0000-0000-0000-000000000000:-1

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140403237563136 [Note] WSREP: New cluster view: global state: a530f9fd-908d-11e6-a72a-b2e3a6b91029:1113, view# 2: Primary, number of nodes: 2, my index: 1, protocol version 3

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140403237563136 [Warning] WSREP: Gap in state sequence. Need state transfer.

Oct 12 15:15:03 core1 mysqld: 2016-10-12 15:15:03 140402002753280 [Note] WSREP: Running: 'wsrep_sst_xtrabackup-v2 --role 'joiner' --address '10.0.2.15' --datadir '/var/lib/mysql/' --parent '9043' --binlog '/var/log/mariadb_bin/mariadb-bin' '

Oct 12 15:15:03 core1 mysqld: WSREP_SST: [INFO] Logging all stderr of SST/Innobackupex to syslog (20161012 15:15:03.985)

Oct 12 15:15:03 core1 -wsrep-sst-joiner: Streaming with xbstream

Oct 12 15:15:03 core1 -wsrep-sst-joiner: Using socat as streamer

Oct 12 15:15:04 core1 -wsrep-sst-joiner: Evaluating timeout -k 110 100 socat -u TCP-LISTEN:4444,reuseaddr stdio | xbstream -x; RC=( ${PIPESTATUS[@]} )

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140403237563136 [Note] WSREP: Prepared SST request: xtrabackup-v2|10.0.2.15:4444/xtrabackup_sst//1

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140403237563136 [Note] WSREP: REPL Protocols: 7 (3, 2)

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140402075592448 [Note] WSREP: Service thread queue flushed.

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140403237563136 [Note] WSREP: Assign initial position for certification: 1113, protocol version: 3

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140402075592448 [Note] WSREP: Service thread queue flushed.

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140403237563136 [Warning] WSREP: Failed to prepare for incremental state transfer: Local state UUID (00000000-0000-0000-0000-000000000000) does not match group state UUID (a530f9fd-908d-11e6-a72a-b2e3a6b91029): 1 (Operation not permitted)

Oct 12 15:15:04 core1 mysqld: #011 at galera/src/replicator_str.cpp:prepare_for_IST():482. IST will be unavailable.

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140402019526400 [Note] WSREP: Member 1.0 (core1) requested state transfer from '*any*'. Selected 0.0 (core0)(SYNCED) as donor.

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140402019526400 [Note] WSREP: Shifting PRIMARY -> JOINER (TO: 1113)

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140403237563136 [Note] WSREP: Requesting state transfer: success, donor: 0

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140402019526400 [Warning] WSREP: 0.0 (core0): State transfer to 1.0 (core1) failed: -32 (Broken pipe)

Oct 12 15:15:04 core1 mysqld: 2016-10-12 15:15:04 140402019526400 [ERROR] WSREP: gcs/src/gcs_group.cpp:gcs_group_handle_join_msg():736: Will never receive state. Need to abort.

wsrep status on core0

logging into mysql on core0 and running

SHOW GLOBAL STATUS LIKE 'wsrep_%'

+------------------------------+--------------------------------------+

| Variable_name | Value |

+------------------------------+--------------------------------------+

...

| wsrep_cluster_state_uuid | a530f9fd-908d-11e6-a72a-b2e3a6b91029 |

| wsrep_cluster_status | Primary |

| wsrep_gcomm_uuid | a5301480-908d-11e6-a84e-0b2444c3985f |

| wsrep_incoming_addresses | 10.0.2.15:3306 |

| wsrep_local_state | 4 |

| wsrep_local_state_comment | Synced |

| wsrep_local_state_uuid | a530f9fd-908d-11e6-a72a-b2e3a6b91029 |

...

+------------------------------+--------------------------------------+

So it appears that core0 is advertising its wsrep incoming address as 10.0.2.15:3306, which isn't the address I'm expecting - 192.168.0.3:3306.

ifconfig on core0

this shows the NAT on eth0

vagrant@core0:~$ ifconfig

eth0 Link encap:Ethernet HWaddr 08:00:27:de:04:89

inet addr:10.0.2.15 Bcast:10.0.2.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:fede:489/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:218886 errors:0 dropped:0 overruns:0 frame:0

TX packets:81596 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:205966097 (205.9 MB) TX bytes:6015101 (6.0 MB)

eth1 Link encap:Ethernet HWaddr 08:00:27:bc:f7:ee

inet addr:192.168.50.3 Bcast:192.168.50.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:febc:f7ee/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:261637 errors:0 dropped:0 overruns:0 frame:0

TX packets:244284 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:59467905 (59.4 MB) TX bytes:114065906 (114.0 MB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:246320 errors:0 dropped:0 overruns:0 frame:0

TX packets:246320 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:64552545 (64.5 MB) TX bytes:64552545 (64.5 MB)

How / Why does the address get set to this? Is there a way I can make the address the correct address?

updates

how wsrep_incoming_addresses is set

While wsrep_cluster_address has to be specified at the node start, wsrep_incoming_addresses is internally determined during the initialization. On linux operation systems, the command that is used to determine the IP address picks the first available global IP address from the list of interfaces.

ip addr show | grep '^\s*inet' | grep -m1 global | awk '

{print $2 }

' | sed 's/\/.*//'

https://mariadb.atlassian.net/browse/MDEV-5487

my output

vagrant@core0:~$ ip addr show | grep '^\s*inet' | grep -m1 global | awk '

> {print $2 }

> ' | sed 's/\/.*//'

10.0.2.15