Due to hurricane Matthew, our company shutdown all servers for two days. One of the servers was an ESXi host with an attached HP StorageWorks MSA60.

When we powered things back up today and logged into the vSphere client, we noticed that none of our guest VMs are available (they're all listed as "inaccessible"). And when I look at the hardware status in vSphere, the array controller and all attached drives appear as "Normal", but the drives all show up as "unconfigured disk".

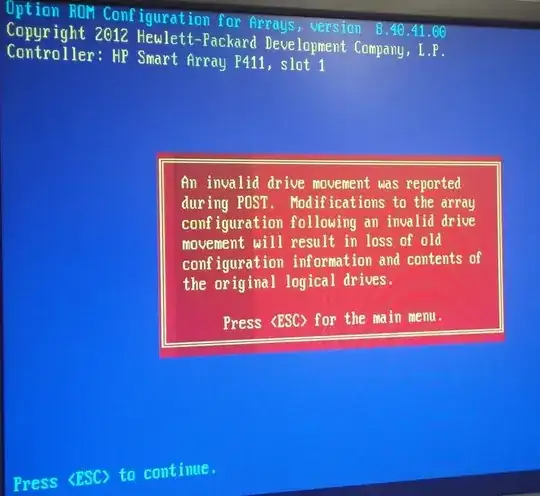

We rebooted the server and tried going into the RAID config utility to see what things look like from there, but we received the following message:

An invalid drive movement was reported during POST. Modifications to the array configuration following an invalid drive movement will result in loss of old configuration information and contents of the original logical drives

Needless to say, we're very confused by this because nothing was "moved"; nothing changed. We simply powered up the MSA and the server, and have been having this issue ever since.

The MSA is attached via a single SAS cable, and the drives are labeled with stickers, so I know the drives weren't moved or switched around:

---------------------

| 01 | 04 | 07 | 10 |

---------------------

| 02 | 05 | 08 | 11 |

---------------------

| 03 | 06 | 09 | 12 |

---------------------

At the moment, I don't know what make and model the drives are, but they are all 1TB SAS drives.

I have two main questions/concerns:

Since we did nothing more than power the devices off and back on, what could've caused this to happen? I of course have the option to rebuild the array and start over, but I'm leery about the possibility of this happening again (especially since I have no idea what caused it).

Is there a snowball's chance in hell that I can recover our array and guest VMs, instead of having to rebuild everything and restore our VM backups?