I have a HP Proliant DL380 G7 w/ HP SmartArray P812 W 1G-BBWC, This is plugged into a D2600 Storage enclosure with 1 mini sas cable. All firwmware versions are the latest (including the disks). There is also the internal backplane plugged into the internal SAS port.

There is one RAID 5 storage array (Across 3 * 4TB SATA disks) and three RAID 1 Arrays, across 1TB SATA disks. Additionally, there are internal SAS 2.5 inch disks connected to the internal port of the controller. 3 X 300GB Raid 5 and 2 X 300GB RAID 1. This problem seems to affect both "internal" disks and disks in the D2600 enclosure.

I am having some very weird performance issues on this system that I cannot track down.

The server is running ESXi 6 from an internal HP Enterprise USB storage device.

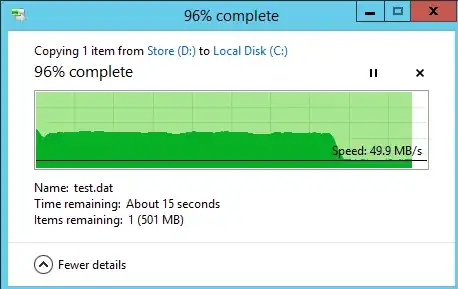

With low disk load, no problem. Here is where the issues start. If I copy a benchmark file from one disk array to another, It initially starts out at 250mb/s for a random amount of time (between 10 and 45 seconds). After this, disk IO drops considerably and becomes very random. (see screenshot).

If the IO load continues, eventually the transfer drops to 0, and the array stops responding entirely.

Simultaneously the ESX host logs the following:

Device naa.bla performance has deteriorated. I/O latency increased from average value of 5134 microseconds to 434632 microseconds.

A Linux box on the same server shows the following results :

Noteable is the 1800ms latency!

If the array stop responding entirely, the only way to recover is to restart the host. This occurs across all arrays, doesn't matter if its internal or external. I have tried a second D2600 and a different SAS cable. No change. Disabling Windows write Cache or the disk cache on the drives themselves makes no difference.

I am completely stuck at this stage and tearing my hair out, any help would be much appreciated!