Let's say we're using ext4 (with dir_index enabled) to host around 3M files (with an average of 750KB size) and we need to decide what folder scheme we're going to use.

In the first solution, we apply a hash function to the file and use two levels folder (being 1 character for the first level and 2 characters to second level): therefore being the filex.for hash equals to abcde1234, we'll store it on /path/a/bc/abcde1234-filex.for.

In the second solution, we apply a hash function to the file and use two levels folder (being 2 characters for the first level and 2 characters to second level): therefore being the filex.for hash equals to abcde1234, we'll store it on /path/ab/de/abcde1234-filex.for.

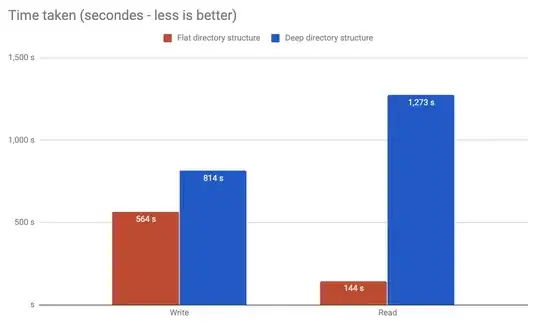

For the first solution we'll have the following scheme /path/[16 folders]/[256 folders] with an average of 732 files per folder (the last folder, where the file will reside).

While on the second solution we'll have /path/[256 folders]/[256 folders] with an average of 45 files per folder.

Considering we're going to write/unlink/read files (but mostly read) from this scheme a lot (basically the nginx caching system), does it maters, in a performance sense, if we chose one or other solution?

Also, what are the tools we could use to check/test this setup?