The more I look at it, the more I'm inclined to think that there's a problem with the data collection.

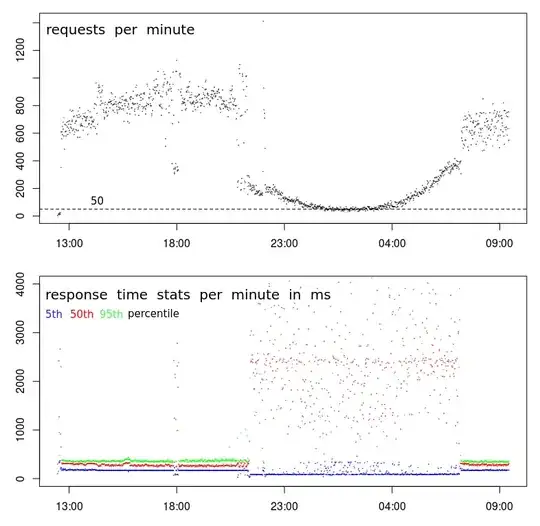

First off, there is something really weird going on with your TPS. While the overall pattern looks normal, there is a very sharp break occurring at about 9pm, and then again at about 7am. A normal chart will be much smoother during the transition to off-peak hours.

That suggests that there is a change in the profile, and you possibly have 2 distinct types of clients:

- One that operates only between 7am(ish) and 9pm(ish), at high volumes, and

- another that probably operates around the clock, at lower volumes.

The second hint is around 18:00. Most of the time before and after, we have the high volume profile--high TPS and low latency. But at around 18:00 there is a sudden drop from 800-1000 RPM to less than 400 RPM. What could possibly cause that?

The third hint is the step-down in the 5th-percentile response times. I actually prefer to look at the min response times (but the 5th percentile is possibly better) for two reasons: It tells me service time (i.e. response time minus queueing), and response times tend to follow a Weibull distribution which means that the mode (or the most common value) is just above the minimum.

So the step-down in the 5th percentile says to me that there is a sudden break in the series, and the service time has actually dropped even though both the variance and the average response times have greatly increased.

Next steps

At this stage I would do a deep dive into the logs to find out what is different about the 18:00 low-volume samples compared to the high-volume samples before and after it.

I would look for:

- differences in geographic location (in case latency is affecting the $request_time)

- differences in URL (should be none)

- differences in HTTP method (POST/GET) (should be none)

- repeated requests from the same IP

- and any other differences...

BTW, the 18:00 "event" is enough evidence for me that it's nothing to do with data center congestion/activity. For that to be true, the congestion would have to cause a drop in TPS, which is possible at 18:00 but extremely unlikely to be causing a sustained, and smoothly curving drop in TPS for 10 hours between 9pm and 7am.