I'm having one site that when hit with a spider just goes off the handles. Normally everything seems fine. We have a nagios montior to report back when CPU is over 80%.

When we get the warnings, I begin watching logs via sudo tail -f access_log. Most times, it's a spider.

It seems to get caught in one URL that the spider has packed with an infinite number of query string values.

What I've tried:

I've since put Disallow: *?* in robots.txt.

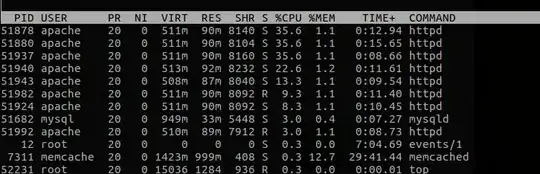

Current top reads:

Question:

Are there other methods that I could use to tell spiders to calm down on our site? On the high memory use httpd processes, can I tell which pages these are calling in order to isolate the troubled spots on this site?

That is, how do I find and isolate the trouble maker?

Errata: We're running Apache 2.2.15 on RHEL 6.8 with memcache.

# apachectl -V

Server version: Apache/2.2.15 (Unix)

Server built: Feb 4 2016 02:44:09

Server loaded: APR 1.3.9, APR-Util 1.3.9

Compiled using: APR 1.3.9, APR-Util 1.3.9

Architecture: 64-bit

Server MPM: Prefork

threaded: no

forked: yes (variable process count)