Disclaimer: The same advice applies to all services pushing more than 10Gbps. Included but not limited to load balancers, caching servers, webservers (HAProxy, Varnish, nginx, tomcat, ...)

What you want to do is wrong, don't do it

Use a CDN instead

CDN are meant to deliver cachable static content. Use the right tool for the job (akamai, MaxCDN, cloudflare, cloudfront, ...)

Any CDN, even a free one, will do better than whatever you can achieve on your own.

Scale horizontally instead

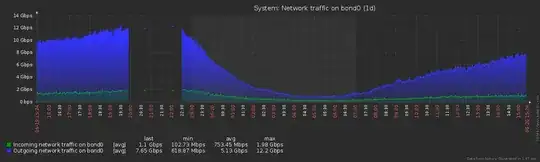

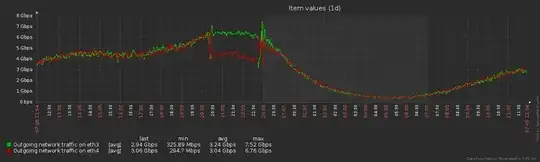

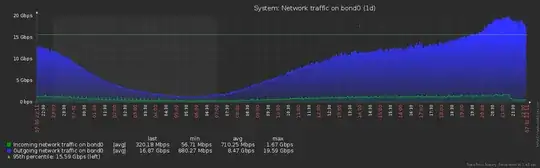

I expect a single server to handle 1-5Gbps out-of-the-box without much tweaking (note: serving static files only). The 8-10Gbps is usually within reach with advanced tuning.

Nonetheless there are many hard limits to what a single box can take. You should prefer to scale horizontally.

Run a single box, try things, measure, benchmark, optimize... until that box is reliable and dependable and its capabilities are well determined, then put more boxes like it with a global load balancer in front.

There are a few global load balancing options: most CDN can do that, DNS roundrobin, ELB/Google load balancers...

Let's ignore the good practices and do it anyway

Understanding the traffic pattern

WITHOUT REVERSE PROXY

[request ] user ===(rx)==> backend application

[response] user <==(tx)=== [processing...]

There are two things to consider: the bandwidth and the direction (emission or reception).

Small files are 50/50 tx/rx because the HTTP headers and TCP overhead are bigger than the file content.

Big files are 90/10 tx/rx because the request size is negligible compared to the response size.

WITH REVERSE PROXY

[request ] user ===(rx)==> nginx ===(tx)==> backend application

[response] user <==(tx)=== nginx <==(rx)=== [processing...]

The reverse proxy is relaying all messages in both directions. The load is always 50/50 and the total traffic is doubled.

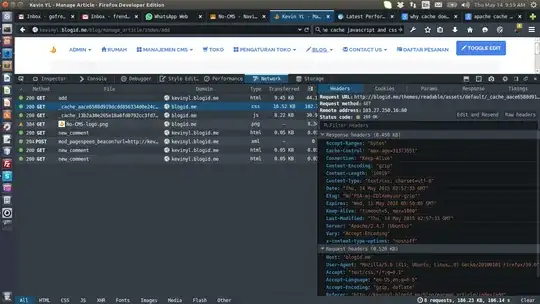

It gets more complex with caching enabled. Requests may be diverted to the hard drive, whose data may be cached in memory.

Note: I'll ignore the caching aspect in this post. We'll focus on getting 10-40 Gbps on the network. Knowing whether the data come from the cache and optimizing that cache is another topic, it's pushed over the wire either way.

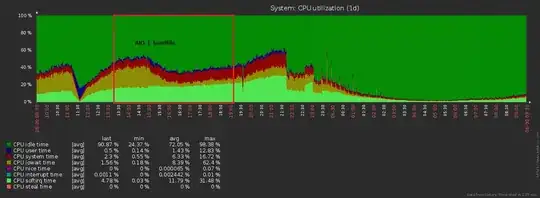

Monocore limitations

Load balancing is monocore (especially TCP balancing). Adding cores doesn't make it faster but it can make it slower.

Same for HTTP balancing with simple modes (e.g. IP, URL, cookie based. The reverse proxy reads headers on the fly, it doesn't parse nor process HTTP requests in a strict sense).

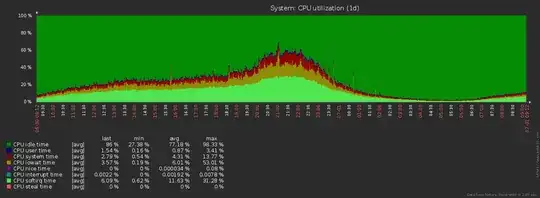

In HTTPS mode, the SSL decryption/encryption is more intensive than everything else required for the proxying. SSL traffic can and should be split over multiple cores.

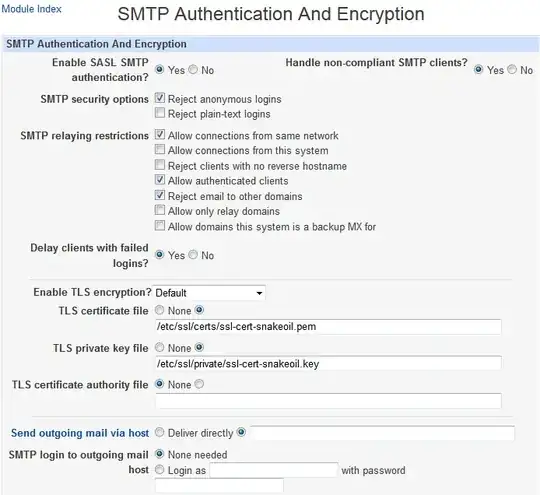

SSL

Given that you do everything over SSL. You'll want to optimize that part.

Encrypting and decrypting 40 Gbps on the fly is quite an achievement.

Take a latest generation processor with the AES-NI instructions (used for SSL operations).

Tune the algorithm used by the certificates. There are many algorithms. You want the one that is the most effective on your CPU (do benchmarking) WHILE being supported by clients AND being just secure enough (no necessary over-encryption).

IRQ and core pinning

The network card is generating interrupts (IRQ) when there is new data to read and the CPU is pre-empted to immediately handle the queue. It's an operation running in the kernel and/or the device drivers and it's strictly monocore.

It can be the greatest CPU consumer with billions of packets going out in every directions.

Assign the network card a unique IRQ number and pin it down to a specific core (see linux or BIOS settings).

Pin the reverse proxy to other cores. We don't want these two things to interfere.

Ethernet Adapter

The network card is doing a lot of the heavy lifting. All devices and manufacturers are not equal when it comes to performances.

Forget about the integrated adapter on motherboards (doesn't matter if server or consumer motherboard), they just suck.

TCP Offloading

TCP is a very intensive protocol in terms of processing (checksums, ACK, retransmission, reassembling packets, ...) The kernel is handling most of the work but some operations can be offloaded to the network card if it supports it.

We don't want just a relatively fast card, we want one with all the bells and whistles.

Forget about Intel, Mellanox, Dell, HP, whatever. They don't support all of that.

There is only one option on the table: SolarFlare -- The secret weapon of HFT firms and CDN.

The world is split in two kind of people: "The ones who know SolarFlare" and "the ones who do not". (the first set being strictly equivalent to "people who do 10 Gbps networking and care about every bit"). But I digress, let's focus :D

Kernel TCP tuning

There are options in sysctl.conf for kernel network buffers. What these settings do or not do. I genuinely don't know.

net.core.wmem_max

net.core.rmem_max

net.core.wmem_default

net.core.rmem_default

net.ipv4.tcp_mem

net.ipv4.tcp_wmem

net.ipv4.tcp_rmem

Playing with these settings is the definitive sign of over optimization (i.e. generally useless or counter productive].

Exceptionally, that might make sense given the extreme requirements.

(Note: 40Gbps on a single box is over-optimization. The reasonable route is to scale horizontally.)

Some Physical Limits

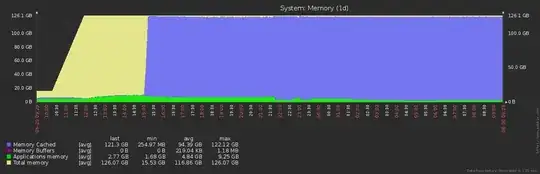

Memory bandwidth

Some numbers about memory bandwidth (mostly in GB/s): http://www.tweaktown.com/articles/6619/crucial-ddr4-memory-performance-overview-early-look-vs-ddr2-ddr3/index.html

Let's say the range is 150-300 Gbps for memory bandwidth (maximum limit in ideal conditions).

All packets have to be in the memory at some point. Just ingesting data at a 40 Gbps line rate is a heavy load on the system.

Will there be any power left to process the data? Well, let's not get our expectations too high on that. Just saying ^^

PCI-Express bus

PCIe 2.0 is 4 Gb/s per lane. PCIe 3.0 is 8 Gbps per lane (not all of it is available for the PCI card).

A 40 Gbps NIC with a single Ethernet port is promising more than the PCIe bus if the connector is anything less than 16x length on the v3.0 specifications.

Other

We could go over other limits. The point is that hardware have hard limitations inherent to the law of physics.

Software can't do better than the hardware it's running on.

The network backbone

All these packets have to go somewhere eventually, traversing switches and routers. The 10 Gbps switches and router are [almost] a commodity. The 40 Gbps are definitely not.

Also, the bandwidth has to be end-to-end so what kind of links do you have up to the user?

Last time I checked with my datacenter guy for a little 10M users side project, he was pretty clear that there would be only 2x 10 Gbits links to the internet at most.

Hard drives

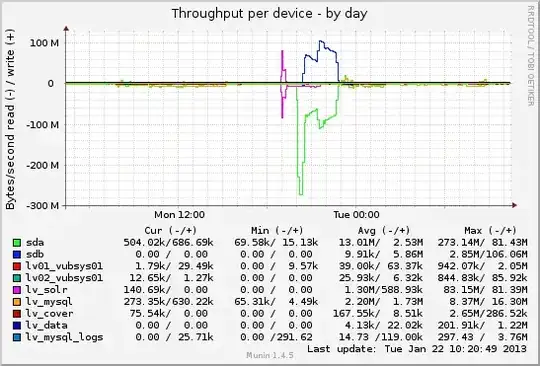

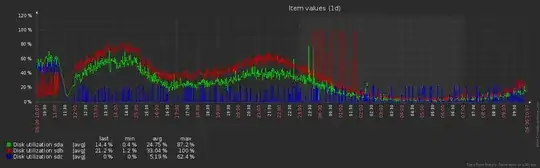

iostat -xtc 3

Metrics are split by read and write. Check for queue (< 1 is good), latency (< 1 ms is good) and transfer speed (the higher the better).

If the disk is slow, the solution is to put more AND bigger SSD in raid 10. (note that SSD bandwidth increases linearly with SSD size).

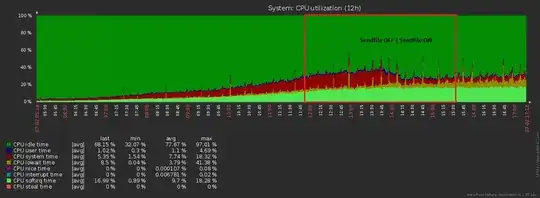

CPU choice

IRQ and other bottlenecks only run on one core so aim for the CPU with the highest single core performances (i.e. highest frequency).

SSL encryption/decryption need the AES-NI instructions so aim for the latest revision of CPU only.

SSL benefit from multiple cores so aim for many cores.

Long story short: The ideal CPU is the newest one with the highest frequency available and many cores. Just pick the most expensive and that's probably it :D

sendfile()

Sendfile ON

Simply the greatest progress of modern kernels for high performance webservers.

Final Note

1 SolarFlare NIC 40 Gbps (pin IRQ and core)

2 SolarFlare NIC 40 Gbps (pin IRQ and core)

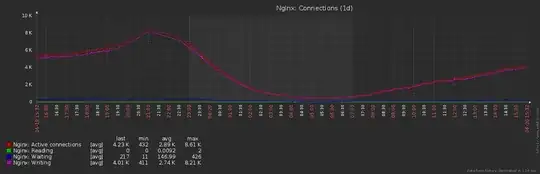

3 nginx master process

4 nginx worker

5 nginx worker

6 nginx worker

7 nginx worker

8 nginx worker

...

One thing pinned down to one CPU. That's the way to go.

One NIC leading to the external world. One NIC leading to the internal network. Splitting responsibilities is always nice (though dual 40 Gbps NIC may be overkill).

That's a lot of things to fine tuned, some of which could be the subject of a small book. Have fun benchmarking all of that. Come back to publish the results.