I'm trying to run multi-threaded benchmarks on a set of isolated CPUs. To cut a long story short, I initially tried with isolcpus and taskset, but hit problems. Now I'm playing with cgroups/csets.

I think the "simple" cset shield use-case should work nicely. I have 4 cores, so I would like to use cores 1-3 for benchmarking (I've also configured these cores to be in adaptive ticks mode), then core 0 can be used for everything else.

Following the tutorial here, it should be as simple as:

$ sudo cset shield -c 1-3

cset: --> shielding modified with:

cset: "system" cpuset of CPUSPEC(0) with 105 tasks running

cset: "user" cpuset of CPUSPEC(1-3) with 0 tasks running

So now we have a "shield" which is isolated (the user cset) and core 0 is for everything else (the system cset).

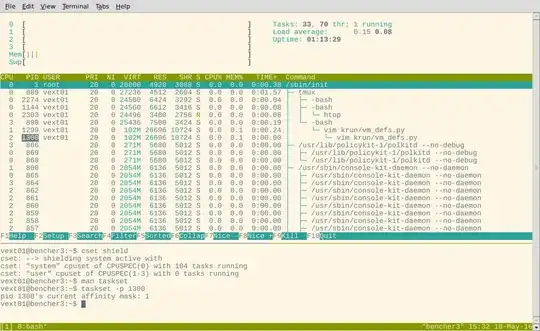

Alright, looks good so far. Now let's look at htop. The processes should all have been migrated onto CPU 0:

Huh? Some of the processes are shown as running on the shielded cores. To rule out the case that htop has a bug, I also tried using taskset to inspect the affinity mask of a process shown as being in the shield.

Maybe those tasks were unmovable? Let's pluck an arbitrary process shown as running on CPU3 (which should be in the shield) in htop and see if it appears in the system cgroup according to cset:

$ cset shield -u -v | grep 864

root 864 1 Soth [gmain]

vext01 2412 2274 Soth grep 864

Yep, that's running on the system cgroup according to cset. So htop and cset disagree.

So what's going on here? Who do I trust: cpu affinities (htop/taskset) or cset?

I suspect that you are not supposed to use cset and affinities together. Perhaps the shield is working fine, and I should ignore the affinity masks and htop output. Either way, I find this confusing. Can someone shed some light?