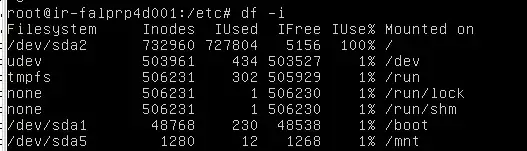

df -i

Filesystem-----Inodes-----Iused-----IFree-----IUse-----Mounted on

dev/sda2-------732960-----727804-----5156-----100%---- /

Only these 2 are having higest inodes, rest all are too low. what can be done to free up inodes?

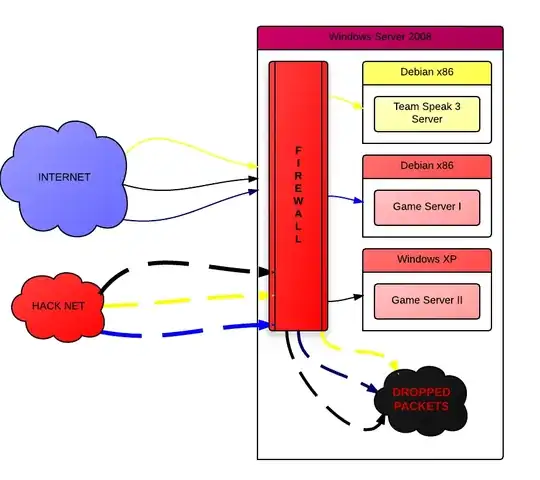

Proc 10937 inodes

Sys 22504 inodes

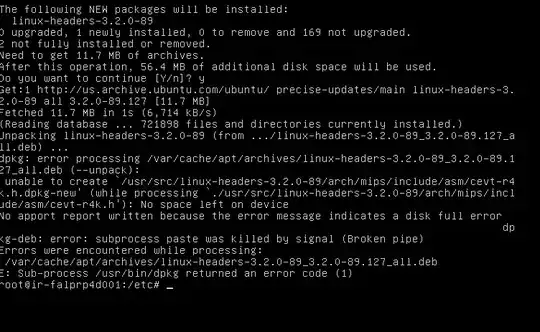

apt-get -f install says no space left

df -i output image

apt-get -f install output error image

inodes search output image -

var log is only 26Mb (highest in var directory)