I have several boxes running Debian 8, dovecot and btrfs. I'm using btrfs snapshots for short term backup. For this purpose I keep 14 snapshots of the mail subvolume.

Performance is OK until it comes to snapshot removal: as soon as btrfs-cleaner kicks in, everything is almost halted. This goes up to drbd losing connectivity to the secondary node due to timeout. This happens on several boxes so it's unlikely to be a hardware related issue.

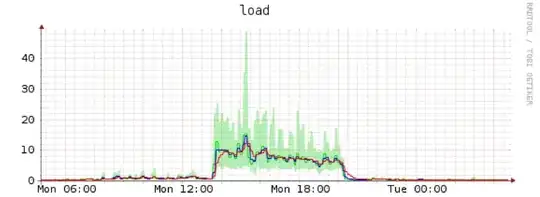

Spike is where snapshot removal takes place:

I cannot believe that this is normal behaviour. So my question is: has anyone experience with this issue, any idea on how to solve or debug it, or as last resort how to avoid it by doing things differently?

Systems are Dell R710, Debian 8, Kernel 3.16, Mount options: rw,noatime,nossd,space_cache

Edit: More system information

Dual R710, 24GB RAM, H700 w/ writecache, 8x1TB 7.2k Sata disks as RAID6, DRBD protocol B, dedicated 1Gb/s link for DRBD

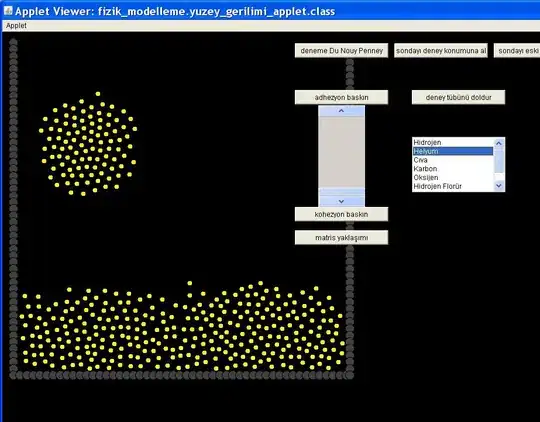

Edit: Removing Snapshot content through rm -rf. Throttled for IO, otherwise it would have run away like the btrfs-cleaner did:

I would conclude that this is way worse io-wise. The only advantage is that I can control the IO load of the userspace rm.

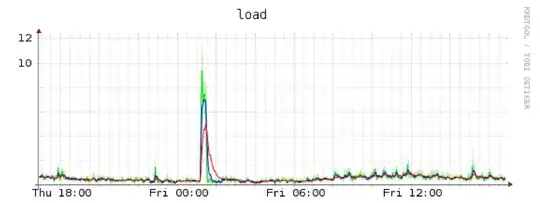

And another edit: Iops massacree