Here is what I've learned doing the exact same thing you are doing. I suggest using mbuffer. When testing in my environment it only helped on the receiving end, without it at all the send would slow down while the receive caught up.

Some examples:

http://everycity.co.uk/alasdair/2010/07/using-mbuffer-to-speed-up-slow-zfs-send-zfs-receive/

Homepage with options and syntax

http://www.maier-komor.de/mbuffer.html

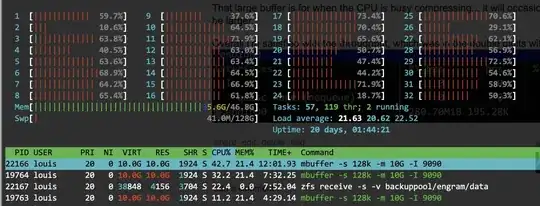

The send command from my replication script:

zfs send -i tank/pool@oldsnap tank/pool@newsnap | ssh -c arcfour remotehostip "mbuffer -s 128k -m 1G | zfs receive -F tank/pool"

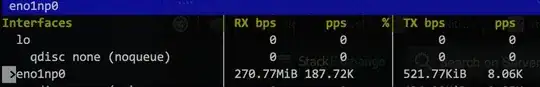

this runs mbuffer on the remote host as a receive buffer so the sending runs as fast as possible. I run a 20mbit line and found that having mbuffer on the sending side as well didn't help, also my main zfs box is using all of it's ram as cache so giving even 1g to mbuffer would require me to reduce some cache sizes.

Also, and this isnt really my area of expertise, I think it's best to just let ssh do the compression. In your example I think you are using bzip and then using ssh which by default uses compression, so SSH is trying to compress a compressed stream. I ended up using arcfour as the cipher as it's the least CPU intensive and that was important for me. You may have better results with another cipher, but I'd definately suggest letting SSH do the compression (or turn off ssh compression if you really want to use something it doesn't support).

Whats really interesting is that using mbuffer when sending and receiving on localhost speeds things up as well:

zfs send tank/pool@snapshot | mbuffer -s 128k -m 4G -o - | zfs receive -F tank2/pool

I found that 4g for localhost transfers seems to be the sweetspot for me. It just goes to show that zfs send/receive doesn't really like latency or any other pauses in the stream to work best.

Just my experience, hope this helps. It took me awhile to figure all this out.