I have: Centos 6.7

grub-install -v

grub-install (GNU GRUB 0.97)

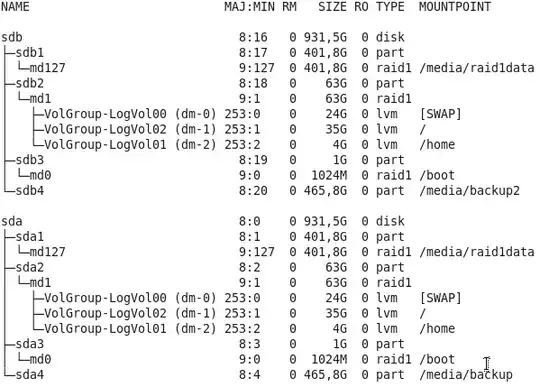

lsblk

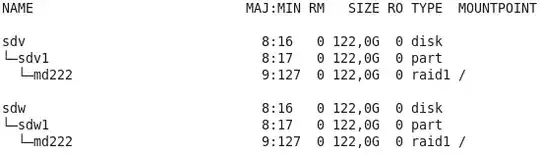

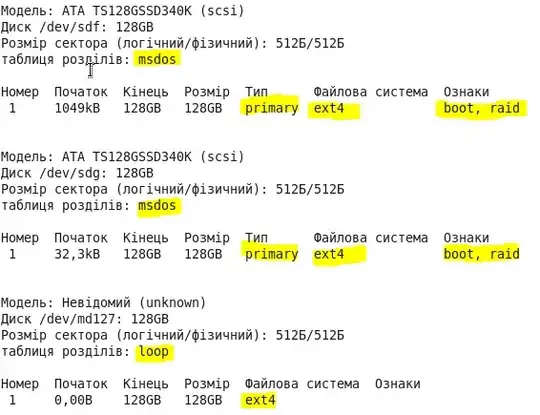

two new SSD 128gb

Live usb with Parted_Magic_2015.03.06

/boot/grub/device.map

# this device map was generated by anaconda

(hd0) /dev/sda

(hd1) /dev/sdb

/boot/grub/grub.conf

default=1

timeout=5

splashimage=(hd0,2)/grub/splash.xpm.gz

hiddenmenu

title CentOS (4.1.10-1.el6.elrepo.x86_64)

root (hd0,2)

kernel /vmlinuz-4.1.10-1.el6.elrepo.x86_64 ro root=/dev/mapper/VolGroup-LogVol02 LANG=uk_UA.UTF-8 rd_NO_LUKS KEYBOARDTYPE=pc KEYTABLE=us rd_LVM_LV=VolGroup/LogVol02 SYSFONT=latarcyrheb-sun16 rhgb crashkernel=128M quiet rd_MD_UUID=88b7c4d8:48557d19:3018c405:b427edf6 rd_LVM_LV=VolGroup/LogVol00 rd_NO_DM

initrd /initramfs-4.1.10-1.el6.elrepo.x86_64.img

I want:

1) create new mdadm raid 1 with one partition using two unformatted new ssd 128 gb

2) copy md0 (boot) and VolGroup-LogVol01 (dm-2) (home) to VolGroup-LogVol02 (dm-1)

3) swap will be mount using fstab from file

5) clone the current raid for the new and the result should be something like this:

6) make changes in boot files

7) restart server and run from new md222

Please tell me how to do that all data were not damaged, that all permissions to files and SElinux settings were not changed?

I would be very grateful if someone will share their experiences and write a mini-step instructions how to make these modifications!