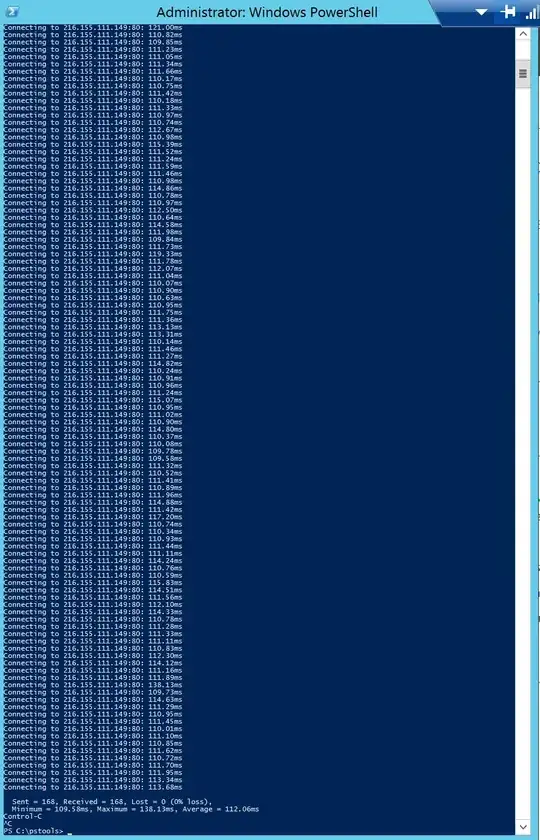

I have an issue with packets dropping to a third party data center in Florida, USA. The issue only occurs on Azure Virtual Machines, no matter which data center the VM is in. I've done the same tests simultaneously from other non-Azure networks, and there is no packets loss. The Azure Virtual Machines were "vanilla" / out of the box with no software loaded or other customizations / changes.

I've already spoken to the network admins at the data center and the only packets they are seeing are the ones that don't timeout; the packets that timeout never reach their firewall, so it sounds like something on the Azure side (especially since the packets consistently drop/timeout from multiple Azure data centers / regions). Does anyone know how I might solve this?

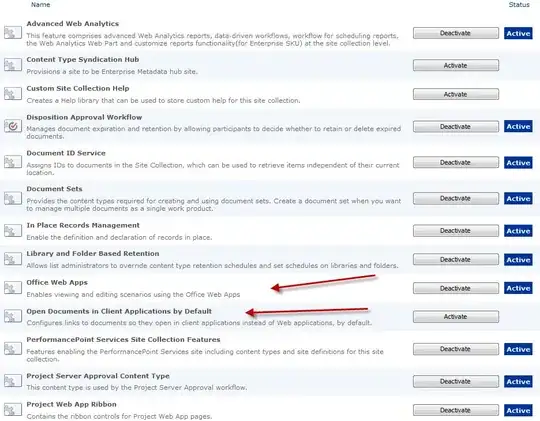

The test I was running was a continuous TCP ping (using tcping.exe) to port 80 (since ICMP is blocked on Azure):

tcping -t 216.155.111.149 80

tcping -t 216.155.111.151 80

tcping -t 216.155.111.146 80

Other evidence supporting the fact that it's not the third party data center is that I can run the same continuous TCP ping from my home computer / work computer and drop no packets. I also setup a tunnel VPN from the Azure VM to a VM at a non-Azure data center and no packets are dropped. The only time packets are dropped is when the traffic goes out to the internet/WAN directly via Azure.

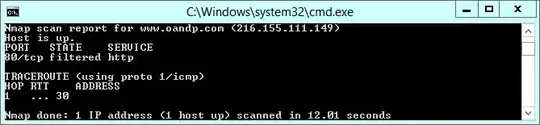

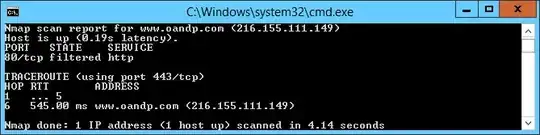

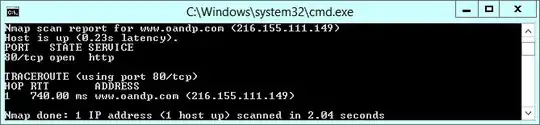

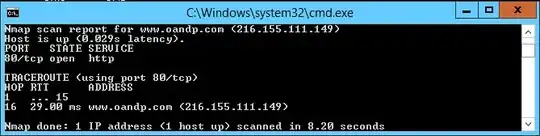

I know the next step would be some trace route tests, but since Azure blocks ICMP, I had to use nmap to run a TCP trace route; pasted below are the screenshots from those tests.

nmap -sS -p 80 -Pn --traceroute 216.155.111.149