I log all events on a system to a JSON file via syslog-ng:

destination d_json { file("/var/log/all_syslog_in_json.log" perm(0666) template("{\"@timestamp\": \"$ISODATE\", \"facility\": \"$FACILITY\", \"priority\": \"$PRIORITY\", \"level\": \"$LEVEL\", \"tag\": \"$TAG\", \"host\": \"$HOST\", \"program\": \"$PROGRAM\", \"message\": \"$MSG\"}\n")); };

log { source(s_src); destination(d_json); };

This file is monitored by logstash (2.0 beta) which forwards the content to elasticsearch (2.0 RC1):

input

{

file

{

path => "/var/log/all_syslog_in_json.log"

start_position => "beginning"

codec => json

sincedb_path => "/etc/logstash/db_for_watched_files.db"

type => "syslog"

}

}

output {

elasticsearch {

hosts => ["elk.example.com"]

index => "logs"

}

}

I then visualize the results in kibana.

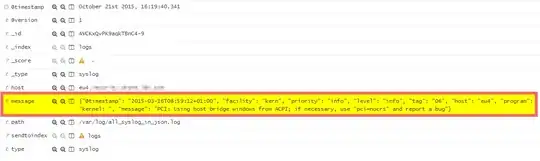

This setup works fine, except that kibana does not expand the message part:

Is it possible to tweak any of the elements of the processing chain to enable to expansion of messages (so that its components are at the same level as path or type?

EDIT: as requested, a few lines from /var/log/all_syslog_in_json.log

{"@timestamp": "2015-10-21T20:14:05+02:00", "facility": "auth", "priority": "info", "level": "info", "tag": "26", "host": "eu2", "program": "sshd", "message": "Disconnected from 10.8.100.112"}

{"@timestamp": "2015-10-21T20:14:05+02:00", "facility": "authpriv", "priority": "info", "level": "info", "tag": "56", "host": "eu2", "program": "sshd", "message": "pam_unix(sshd:session): session closed for user nagios"}

{"@timestamp": "2015-10-21T20:14:05+02:00", "facility": "authpriv", "priority": "info", "level": "info", "tag": "56", "host": "eu2", "program": "systemd", "message": "pam_unix(systemd-user:session): session closed for user nagios"}