See attached picture of Fusion Reactor, showing pages that just keep on running. Times have gone up into the millions and I've left them to see if they'd complete but that was when there were just 2 or 3.

Now I'm getting dozens of pages that just never finish. And it's different queries, I can't see any huge pattern except it only seems to apply to 3 of my 7 databases.

top shows coldfusion CPU usage around 70-120%, and digging deeper into the Fusion Reactor details pages shows all the time building up is spent solely on Mysql queries.

show processlist returns nothing unusual, execpt 10 - 20 connections in sleep state.

During this time many pages do complete, but as the the number of pages hanging builds up and they never seem to finish the server eventually just returns white pages.

The only short term solution seems to be restarting Coldfusion, which is far from ideal.

A Node.js script was recently added that runs every 5 minutes and checks for batch csv files to process, I wondered if that was causing a problem with stealing all the MySQL connections so I've disabled that (the script has no connection.end() method in it) but that's just a quick guess.

No idea where to start, can anyone help?

The worst part is the pages NEVER time out, if they did it wouldn't be so bad, but after a while nothing gets served.

I'm running a CentOS LAMP stack with Coldfusion and NodeJS as my primary scripting languages

UPDATE BEFORE ACTUALLY POSTING

During the time it took to write this post, which I started after disabling the Node script and restarting Coldfusion, the problem seems to have gone away.

But I'd still like some help identifying exactly why the pages woudlnt' time out and confirming that the Node script needs something like connection.end()

Also it might only happen under load, so I'm not 100% sure it has gone away

UPDATE

Still having issues, I've just copied one of the queries that is currently up to 70 seconds in Fusion Reactor, and run it manually in the database and it completed in a few milliseconds. The queries themselves don't seem to be a problem.

ANOTHER UPDATE

Stack trace of one of the pages still going. Server hasn't stopped serving pages in a while, all Node scripts currently disabled

MORE UPDATES

I had a few more of these today - they actually finished and I spotted this error in FusionReactor:

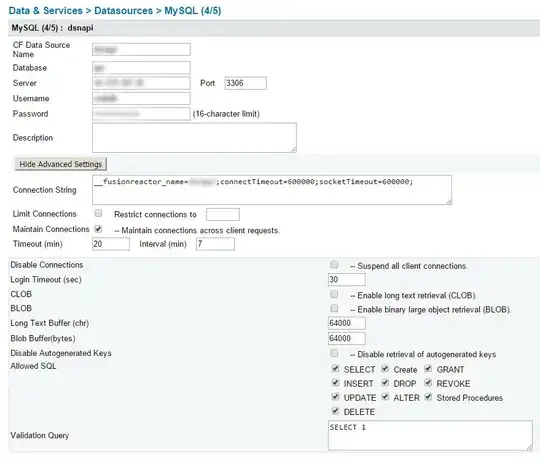

Error Executing Database Query. The last packet successfully received from the server was 7,200,045 milliseconds ago. The last packet sent successfully to the server was 7,200,041 milliseconds ago. is longer than the server configured value of 'wait_timeout'. You should consider either expiring and/or testing connection validity before use in your application, increasing the server configured values for client timeouts, or using the Connector/J connection property 'autoReconnect=true' to avoid this problem.

EVEN MORE UPDATES

Digging around the code, I tried looking for "2 h", "120" and "7200" as I felt the 7200000ms timeout was too much of a coincidence.

I found this code:

// 3 occurrences of this

createObject( "java", "coldfusion.tagext.lang.SettingTag" ).setRequestTimeout( javaCast( "double", 7200 ) );

// 1 occurrence of this

<cfsetting requestTimeOut="7200">

The 4 pages that reference those lines of code are run very rarely, have never shown up in the logs with the 2h+ time outs and are in a password protected area so can't be scraped (they were for file uploads and CSV processing, now moved to nodejs).

Is it possible that these settings could somehow be set by one page but exist in the server, and affect other requests?