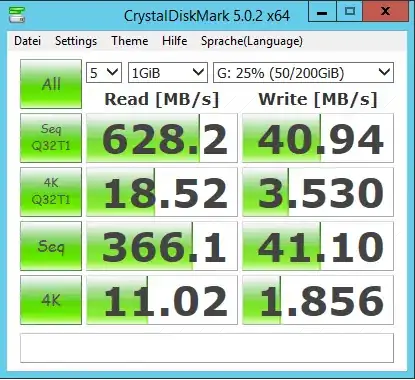

I am having a HP Proliant DL 160 Gen9 Server with a HP H240 Host Bus Adapter. 6x 1 TB Samsung SSD's configured in a raid 5 directly using the internal storage of the machine. After installing a VM on it using VMware (6.0) I did a benchmark with the following result:

After some research I came to the following conclusion:

A Controller without Cache will have trouble to calculate the raid 5 stripes and I pay this in write performance. But 630MB/s Read and 40MB/s seem to be a bit poor. Anyhow I found others having the same problem.

Since I can't change the controller today, is there a way to test if the controller is on the edge? Or do I really have to try a better one and see the result? What are my options? I am pretty new to Server/Hardware/installation since in my previous company this was managed by a outsourced hosting provider.

EDIT UPDATE

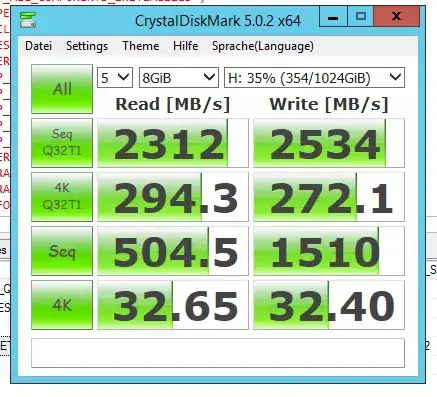

Here now the performance with write cache enabled. The read went up even before I did the change. Not sure what happened, I just played around in bios settings of the windows machine.

Today I go update the firmware to latest version, let's see what it gives us.

Here a screenshot of a Benchmark with the new Controller P440 with a 4GB Cache activated. (enabling HP SSD smart path didn't bring a performance improvement btw.) But with a Cache we get much better results. Of course I tested it with files > 4GB, to make sure to test the disk and not the cache.