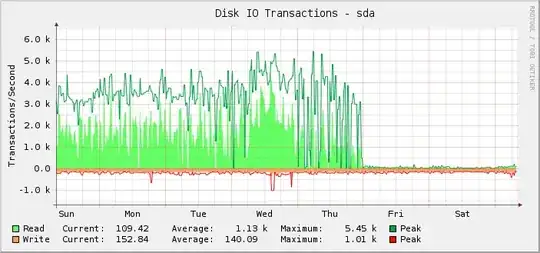

I have a Debian jessie server with two Intel DC S3610 SSDs in it, in RAID-10. It's reasonably busy for IO and for the last several weeks I've been graphing the IOPS:

As you can see, most of the time it was happily doing about 1k average read ops peaking at about 5.5k, until at midnight UTC on Friday that abruptly appears to stop and read ops drop down to almost nothing.

I've actually only noticed this retroactively because the thing is, the server is still performing as it should. That is, I believe it is the monitoring that is broken, not the amount of IOPS that the setup can do. If the actual IOPS had dropped to the level displayed, I would know because everything else would very noticeably break.

On further investigation the kilobytes read/written graphs are also broken at the same point. The request latency graphs are okay though.

In an attempt to rule out the particular graphing solution in use here (cacti and SNMP) I had a look at iostat. Its output matches what is being displayed on the graphs.

As far as I am aware iostat gets its info from /proc/diskstats. According to https://www.kernel.org/doc/Documentation/iostats.txt there will be the major, minor, device name and then a set of fields, of which the first one is the number of reads completed. So:

$ for i in {1..10}; do awk '/sda / { print $4 }' /proc/diskstats; sleep 1; done

3752035479

3752035484

3752035484

3752035486

3752035486

3752035519

3752035594

3752035631

3752036016

3752036374

It's just not credible that such a low number of reads were completed in that 10 second span.

But if /proc/diskstats is lying to me then what could the issue be and how can I hope to fix it?

Also of interest is the fact that whatever changed, changed at exactly midnight, which is rather a coincidence.

The server has quite a lot of block devices. 187 of them are LVM LVs, and another 18 are the usual partitions and md devices.

I've been regularly adding more LVs so it is possible that on Thursday I reached some sort of limit, though I did not add any anywhere near midnight so it is still strange that whatever has gone wrong has done so at midnight.

I know that /proc/diskstats can overflow, but when it does the numbers are typically erroneously huge.

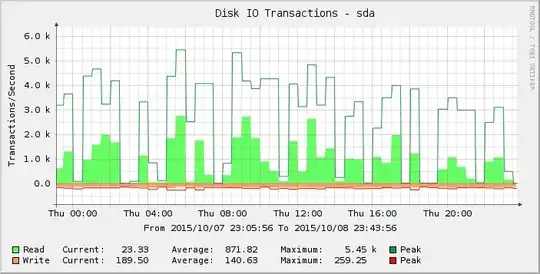

Looking at the graph a bit harder we can see that it seems more spiky on Thursday than previously in the week (and weeks) before it. Zooming in on the results just for that period we see:

Those gaps of zero or close to zero reads are abnormal and I do not believe they reflect reality. Perhaps the number of requests has been exceeding some threshold as I added more load such that it started to manifest on Thursday and by Friday most of the readings are now zero?

Does anyone have any ideas as to what is going on here?

Kernel version 3.16.7-ckt11-1+deb8u3.