After upgrading BIND to 9.8.2rc1-RedHat-9.8.2-0.37.rc1.el6_7.2 in a few caching nameservers I've noticed it's doing lots of outgoing NS queries, without changes to incoming traffic volume or patterns.

As a result, the servers are consuming much more CPU and network bandwidth which has led to performance and capacity issues.

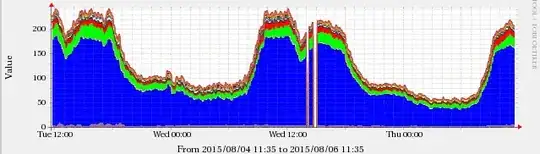

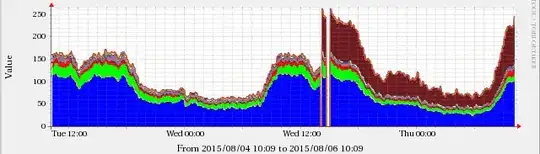

This did not happen with the previously installed version 9.8.2rc1-RedHat-9.8.2-0.30.rc1.el6_6.1 or 9.8.2-0.30.rc1.el6_6.3 (the last version on CentOS 6.6), and I could see the change in the graphs matching the time of the upgrade.

The graphs are below, the brown band corresponds to NS queries. The breaks are due to the server restart after upgrading BIND.

A tcpdump shows thousands of queries/sec asking for NS records for every queried hostname. This is odd, as I expected to see a NS query for the domain (example.com) and not the host (www.example.com).

16:19:42.299996 IP xxx.xxx.xxx.xxx.xxxxx > 198.143.63.105.53: 45429% [1au] NS? e2svi.x.incapdns.net. (49)

16:19:42.341638 IP xxx.xxx.xxx.xxx.xxxxx > 198.143.61.5.53: 53265% [1au] NS? e2svi.x.incapdns.net. (49)

16:19:42.348086 IP xxx.xxx.xxx.xxx.xxxxx > 173.245.59.125.53: 38336% [1au] NS? www.e-monsite.com. (46)

16:19:42.348503 IP xxx.xxx.xxx.xxx.xxxxx > 205.251.195.166.53: 25752% [1au] NS? moneytapp-api-us-1554073412.us-east-1.elb.amazonaws.com. (84)

16:19:42.367043 IP xxx.xxx.xxx.xxx.xxxxx > 205.251.194.120.53: 24002% [1au] NS? LB-lomadee-adservernew-678401945.sa-east-1.elb.amazonaws.com. (89)

16:19:42.386563 IP xxx.xxx.xxx.xxx.xxxxx > 205.251.194.227.53: 40756% [1au] NS? ttd-euwest-match-adsrvr-org-139334178.eu-west-1.elb.amazonaws.com. (94)

tcpdump of a client's request shows:

## client query

17:30:05.862522 IP <client> > <my_server>.53: 1616+ A? cid-29e117ccda70ff3b.users.storage.live.com. (61)

## recursive resolution (OK)

17:30:05.866190 IP <my_server> > 134.170.107.24.53: 64819% [1au] A? cid-29e117ccda70ff3b.users.storage.live.com. (72)

17:30:05.975450 IP 134.170.107.24.53 > <my_server>: 64819*- 1/0/1 A 134.170.111.24 (88)

## garbage NS queries

17:30:05.984892 IP <my_server> > 134.170.107.96.53: 7145% [1au] NS? cid-29e117ccda70ff3b.users.storage.live.com. (72)

17:30:06.105388 IP 134.170.107.96.53 > <my_server>: 7145- 0/1/1 (158)

17:30:06.105727 IP <my_server> > 134.170.107.72.53: 36798% [1au] NS? cid-29e117ccda70ff3b.users.storage.live.com. (72)

17:30:06.215747 IP 134.170.107.72.53 > <my_server>: 36798- 0/1/1 (158)

17:30:06.218575 IP <my_server> > 134.170.107.48.53: 55216% [1au] NS? cid-29e117ccda70ff3b.users.storage.live.com. (72)

17:30:06.323909 IP 134.170.107.48.53 > <my_server>: 55216- 0/1/1 (158)

17:30:06.324969 IP <my_server> > 134.170.107.24.53: 53057% [1au] NS? cid-29e117ccda70ff3b.users.storage.live.com. (72)

17:30:06.436166 IP 134.170.107.24.53 > <my_server>: 53057- 0/1/1 (158)

## response to client (OK)

17:30:06.438420 IP <my_server>.53 > <client>: 1616 1/1/4 A 134.170.111.24 (188)

I thought this could be a cache population issue, but it did not subside even after the server has been running for a week.

Some details:

- The issue didn't happen in CentOS 6.6 x86_64 fully patched

- The servers are running CentOS 6.7 x86_64 (fully patched, as of 2015-08-13).

- BIND is running in a chroot'ed environment with extra arguments

ROOTDIR=/var/named/chroot ; OPTIONS="-4 -n4 -S 8096" - redacted

named.confcontents below

What is going on here? Is there a way to change the configuration to avoid this behaviour?

acl xfer {

(snip)

};

acl bogusnets {

0.0.0.0/8; 1.0.0.0/8; 2.0.0.0/8; 192.0.2.0/24; 224.0.0.0/3;

};

acl clients {

(snip)

};

acl privatenets {

127.0.0.0/24; 10.0.0.0/8; 172.16.0.0/12; 192.168.0.0/16;

};

acl ops {

(snip)

};

acl monitoring {

(snip)

};

include "/etc/named.root.key";

key rndckey {

algorithm hmac-md5;

secret (snip);

};

key "monitor" {

algorithm hmac-md5;

secret (snip);

};

controls { inet 127.0.0.1 allow { localhost; } keys { rndckey; };

inet (snip) allow { monitoring; } keys { monitor; }; };

logging {

channel default_syslog { syslog local6; };

category lame-servers { null; };

channel update_debug {

file "/var/log/named-update-debug.log";

severity debug 3;

print-category yes;

print-severity yes;

print-time yes;

};

channel security_info {

file "/var/log/named-auth.info";

severity info;

print-category yes;

print-severity yes;

print-time yes;

};

channel querylog{

file "/var/log/named-querylog" versions 3 size 10m;

severity info;

print-category yes;

print-time yes;

};

category queries { querylog; };

category update { update_debug; };

category security { security_info; };

category query-errors { security_info; };

};

options {

directory "/var/named";

pid-file "/var/run/named/named.pid";

statistics-file "/var/named/named.stats";

dump-file "/var/named/named_dump.db";

zone-statistics yes;

version "Not disclosed";

listen-on-v6 { any; };

allow-query { clients; privatenets; };

recursion yes; // default

allow-recursion { clients; privatenets; };

allow-query-cache { clients; privatenets; };

recursive-clients 10000;

resolver-query-timeout 5;

dnssec-validation no;

querylog no;

allow-transfer { xfer; };

transfer-format many-answers;

max-transfer-time-in 10;

notify yes; // default

blackhole { bogusnets; };

response-policy {

zone "rpz";

zone "netrpz";

};

};

include "/etc/named.rfc1912.zones";

include "/etc/named.zones";

statistics-channels { inet (snip) port 8053 allow { ops; }; inet 127.0.0.1 port 8053 allow { 127.0.0.1; }; };

zone "rpz" { type slave; file "slaves/rpz"; masters { (snip) }; };

zone "netrpz" { type slave; file "slaves/netrpz"; masters { (snip) }; };