I am running a LAMP Application installed on one server, happily serving about 1M PI per month. Now I am looking into a potential partnership where my app might serve around 50-80M requests per month.

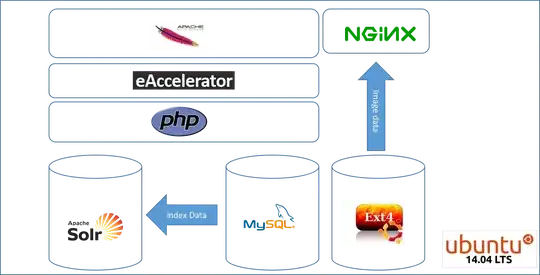

This is how my architecture looks like:

So images are served from static.domain.com while the application is served by www.domain.com. The API where 90% of the traffic comes from is under a seperate https domain api.domain.com but queries the mysql and solr stack.

Would one root server with 128GB RAM and SSDs in SW-Raid 1 with an Intel® Xeon® E5-1650 v3 Hexa-Core Haswell be able to handle that load? Most request will go against solr and deliver just a json feed, potentially not hitting the "disc".

Is this overkill with 128GB of RAM or will one server not even be able to handel that load? I could also go with 2 servers and load balance. Question is how within this architecture.

Thank you for any hint on this.