This is my first post on Stack Exchange after years of roaming around.

I'm having difficulty deciding on a rack setup that meets our company's needs for scalability, management, and future proofing. I wish to purchase two server/network racks and put all of the networking equipment in one rack and all of the server equipment in another rack. This would allow us to have room for future growth within this office space. I wanted to know what are the options for connecting the server rack with the network rack. Do I use a patch panel to directly wire the racks together? Do I put a switch in the server rack and connect the uplink ports back to the network rack? All of the terminated connections will come to patch panels on the network rack.

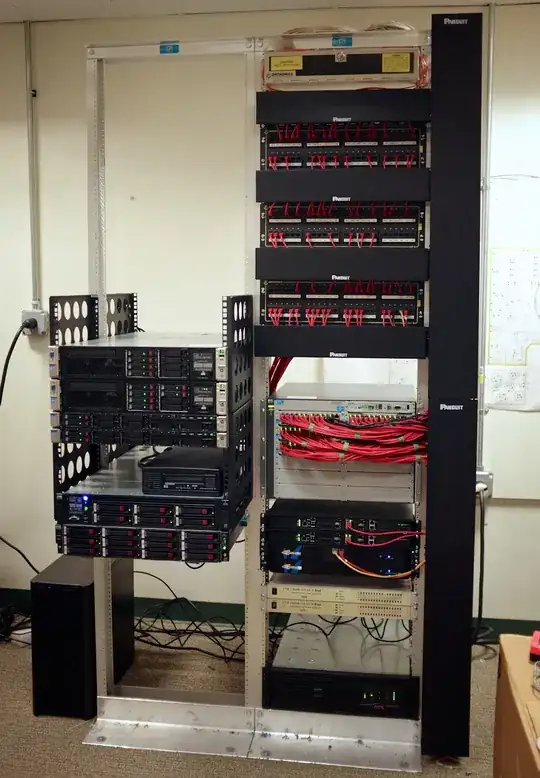

Here is the equipment that will need to be racked:

- x4 48 port 1U switches

- x1 48 port 1U switch PoE

- xN Patch panels

- x1 2U Server

- x1 2U Nas

- x2 1U Mac Mini bracket

- x1 1U KVM

- x1 1U Server

- x1 1U Firewall

- x1 3U Shelf

- x2 UPS (let's say 6U)

I wanted to get two 42U racks to plan for future growth for this office space. I was reading that people either put a patch panel in the server rack and another patch panel in the network rack and connect them together. That doesn't seem like it would scale well if we ever decided to get a third rack. Other people say to put a switch in the server rack and uplink to the network rack. That would scale better but we would waste the unused ports on the switch meanwhile. We are getting the office wired and unfortunately we will not have a separation between PoE lines and non-PoE lines at the patch panel level (i.e. there wouldn't be one panel devoted to just PoE connections). We want to be able to keep it as clean as possibly in respect to cable management. Any thoughts?

EDIT: I ended up going with the following layout. Two racks side by side with the networking equipment in one rack and the servers in another. I connected the two racks with a patch panel in each rack. This allows me to only use ports that I need because I couldn't spare (afford) to buy another switch to put in the server rack.